NIST SPECIAL PUBLICATION 1800-10C

Protecting Information and System Integrity in Industrial Control System Environments:

Cybersecurity for the Manufacturing Sector

Volume C:

How-to Guides

Michael Powell

National Cybersecurity Center of Excellence

National Institute of Standards and Technology

Michael Pease

Keith Stouffer

CheeYee Tang

Timothy Zimmerman

Engineering Laboratory

National Institute of Standards and Technology

Joseph Brule

Chelsea Deane

John Hoyt

Mary Raguso

Aslam Sherule

Kangmin Zheng

The MITRE Corporation

McLean, Virginia

Matthew Zopf

Strativia

Largo, Maryland

March 2022

FINAL

This publication is available free of charge from https://doi.org/10.6028/NIST.SP.1800-10

The first draft of this publication is available free of charge from https://www.nccoe.nist.gov/publications/practice-guide/protecting-information-and-system-integrity-industrial-control-system-draft

DISCLAIMER

Certain commercial entities, equipment, products, or materials may be identified in this document in order to describe an experimental procedure or concept adequately. Such identification is not intended to imply special status or relationship with NIST or recommendation or endorsement by NIST or NCCoE; neither is it intended to imply that entities, equipment, products, or materials are necessarily the best available for the purpose.

While NIST and the NCCoE address goals of improving management of cybersecurity and privacy risk through outreach and application of standards and best practices, it is the stakeholder’s responsibility to fully perform a risk assessment to include the current threat, vulnerabilities, likelihood of a compromise, and the impact should the threat be realized before adopting cybersecurity measures such as this recommendation.

Domain name and IP addresses shown in this guide represent an example domain and network environment to demonstrate the NCCoE project use case scenarios and the security capabilities.

National Institute of Standards and Technology Special Publication 1800-10C, Natl. Inst. Stand. Technol. Spec. Publ. 1800-10C, 128 pages, March 2022, CODEN: NSPUE2.

FEEDBACK

As a private-public partnership, we are always seeking feedback on our practice guides. We are particularly interested in seeing how businesses apply NCCoE reference designs in the real world. If you have implemented the reference design, or have questions about applying it in your environment, please email us at manufacturing_nccoe@nist.gov.

All comments are subject to release under the Freedom of Information Act (FOIA).

NATIONAL CYBERSECURITY CENTER OF EXCELLENCE

The National Cybersecurity Center of Excellence (NCCoE), a part of the National Institute of Standards and Technology (NIST), is a collaborative hub where industry organizations, government agencies, and academic institutions work together to address businesses’ most pressing cybersecurity issues. This public-private partnership enables the creation of practical cybersecurity solutions for specific industries, as well as for broad, cross-sector technology challenges. Through consortia under Cooperative Research and Development Agreements (CRADAs), including technology partners—from Fortune 50 market leaders to smaller companies specializing in information technology security—the NCCoE applies standards and best practices to develop modular, easily adaptable example cybersecurity solutions using commercially available technology. The NCCoE documents these example solutions in the NIST Special Publication 1800 series, which maps capabilities to the NIST Cybersecurity Framework and details the steps needed for another entity to re-create the example solution. The NCCoE was established in 2012 by NIST in partnership with the State of Maryland and Montgomery County, Maryland.

To learn more about the NCCoE, visit https://www.nccoe.nist.gov/. To learn more about NIST, visit https://www.nist.gov

NIST CYBERSECURITY PRACTICE GUIDES

NIST Cybersecurity Practice Guides (Special Publication 1800 series) target specific cybersecurity challenges in the public and private sectors. They are practical, user-friendly guides that facilitate the adoption of standards-based approaches to cybersecurity. They show members of the information security community how to implement example solutions that help them align more easily with relevant standards and best practices, and provide users with the materials lists, configuration files, and other information they need to implement a similar approach.

The documents in this series describe example implementations of cybersecurity practices that businesses and other organizations may voluntarily adopt. These documents do not describe regulations or mandatory practices, nor do they carry statutory authority.

ABSTRACT

Today’s manufacturing organizations rely on industrial control systems (ICS) to conduct their operations. Increasingly, ICS are facing more frequent, sophisticated cyber attacks—making manufacturing the second-most targeted industry (C. Singleton et al., X-Force Threat Intelligence Index 2021, IBM, February 2021, https://www.ibm.com/security/data-breach/threat-intelligence). Cyber attacks against ICS threaten operations and worker safety, resulting in financial loss and harm to the organization’s reputation.

The architecture and solutions presented in this guide are built upon standards-based, commercially available products, and represent some of the possible solutions. The solutions implement standard cybersecurity capabilities, such as behavioral anomaly detection, application allowlisting, file integrity-checking, change control management, and user authentication and authorization. The solution was tested in two distinct lab settings: a discrete manufacturing work cell, which represents an assembly line production, and a continuous process control system (PCS), which represents chemical manufacturing industries.

Organizations that are interested in protecting the integrity of the manufacturing system and information from destructive malware, insider threats, and unauthorized software should first conduct a risk assessment and determine the appropriate security capabilities required to mitigate those risks. Once the security capabilities are identified, the sample architecture and solution presented in this document may be used.

The security capabilities of the example solution are mapped to NIST’s Cybersecurity Framework, the National Initiative for Cybersecurity Education Framework, and NIST Special Publication 800-53.

KEYWORDS

Application allowlisting; behavioral anomaly detection; file integrity checking; firmware modification; industrial control systems; manufacturing; remote access; software modification; user authentication; user authorization.

ACKNOWLEDGEMENTS

We are grateful to the following individuals for their generous contributions of expertise and time.

Name |

Organization |

|---|---|

Dan Frechette |

Microsoft |

Ian Schmertzler |

Dispel |

Ben Burke |

Dispel |

Chris Jensen |

Tenable |

Bethany Brower |

VMWare |

Dennis Hui |

OSIsoft (now part of AVEVA) |

John Matranga |

OSIsoft (now part of AVEVA) |

Michael A. Piccalo |

Forescout |

Tim Jones |

Forescout |

Yejin Jang |

Forescout |

Samantha Pelletier |

TDI Technologies |

Rusty Hale |

TDI Technologies |

Steve Petruzzo |

GreenTec-USA |

Josh Carlson |

Dragos |

Alex Baretta |

Dragos |

The Technology Partners/Collaborators who participated in this build submitted their capabilities in response to a notice in the Federal Register. Respondents with relevant capabilities or product components were invited to sign a Cooperative Research and Development Agreement (CRADA) with NIST, allowing them to participate in a consortium to build this example solution. We worked with:

Technology Partner/Collaborator |

Product |

|---|---|

Carbon Black App Control |

|

Azure Defender for the internet of things (IoT) (incorporating technology from the acquisition of CyberX) |

|

Dispel Wicket ESI Dispel Enclave Dispel VDI (Virtual Desktop Interface) |

|

Dragos Platform |

|

eyeInspect (Formerly SilentDefense)

ICS Patrol

EyeSight |

|

WORMdisk and ForceField |

|

PI System (which comprises products such as PI Server, PI Vision and others) |

|

ConsoleWorks |

|

Tenable.ot |

DOCUMENT CONVENTIONS

The terms “shall” and “shall not” indicate requirements to be followed strictly to conform to the publication and from which no deviation is permitted. The terms “should” and “should not” indicate that among several possibilities, one is recommended as particularly suitable without mentioning or excluding others, or that a certain course of action is preferred but not necessarily required, or that (in the negative form) a certain possibility or course of action is discouraged but not prohibited. The terms “may” and “need not” indicate a course of action permissible within the limits of the publication. The terms “can” and “cannot” indicate a possibility and capability, whether material, physical, or causal.

PATENT DISCLOSURE NOTICE

NOTICE: The Information Technology Laboratory (ITL) has requested that holders of patent claims whose use may be required for compliance with the guidance or requirements of this publication disclose such patent claims to ITL. However, holders of patents are not obligated to respond to ITL calls for patents and ITL has not undertaken a patent search in order to identify which, if any, patents may apply to this publication.

As of the date of publication and following call(s) for the identification of patent claims whose use may be required for compliance with the guidance or requirements of this publication, no such patent claims have been identified to ITL.

No representation is made or implied by ITL that licenses are not required to avoid patent infringement in the use of this publication.

List of Figures

Figure 1‑1: CSMS Network Architecture

Figure 2‑1 Dispel High-level Implementation, from Remote Access for ICS

Figure 2‑2 Mapping a Network Drive

Figure 2‑3 Authentication to File Server

Figure 2‑4 Dragos OSIsoft PI Server Integration

Figure 2‑5 Dragos PI Web API Configuration

Figure 2‑6 OSIsoft PI Server to Dragos Asset and Data Pairing

Figure 2‑7 OSIsoft PI Server and Dragos Paired Data Elements

Figure 2‑8 Dragos Zone Administration Page

Figure 2‑9 Dragos Create Zone Pop-up

Figure 2‑10 Forescout High-Level Components and Dataflows

Figure 2‑11 Forescout SecureConnector Distribution Tool

Figure 2‑12 Forescout Agent Download

Figure 2‑13 eyeInspect Sensor Admin/Overview Page – Add Sensor

Figure 2‑14 Adding a New SilentDefense Sensor Dialog

Figure 2‑15 eyeInspect ICMP Protocol/Port Scan Attempt Settings

Figure 2‑16 eyeInspect Sensor Configuration Options

Figure 2‑17 eyeInspect Portscan Detection Settings

Figure 2‑18 Add ICS Patrol Sensor Dialog

Figure 2‑19 ICS Patrol Sensor Admin Page

Figure 2‑20 Add an ICS Patrol Scan Policy

Figure 2‑21 eyeSight Add Dialog – General Information

Figure 2‑22 eyeSight Add – Command Center Credentials

Figure 2‑23 eyeSight OT Settings

Figure 2‑24 eyeSight Test Connection Successful Message

Figure 2‑25 Azure Defender for IoT SSH Session for Network Configuration

Figure 2‑26 Azure Defender for IoT Create New Data Mining Report for AMS Protocol Information

Figure 2‑27 Azure Defender for IoT Custom Alert for Firmware Major Version Number Change

Figure 2‑28 Azure Defender for IoT Custom Alert for Firmware Minor Version Number Change

Figure 2‑29 Azure Defender for IoT Custom Alert for Firmware Build Version Number Change

Figure 2‑30 Screenshot of the PI Interface Configuration Utility before the Interface is configured.

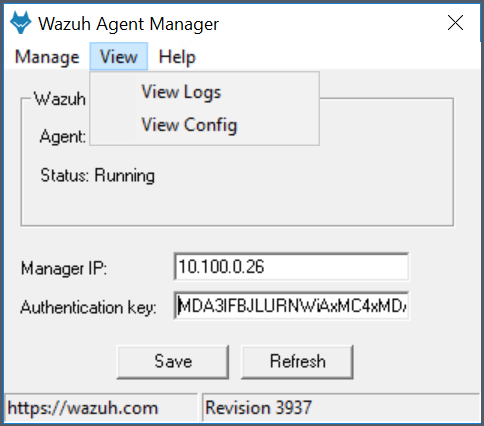

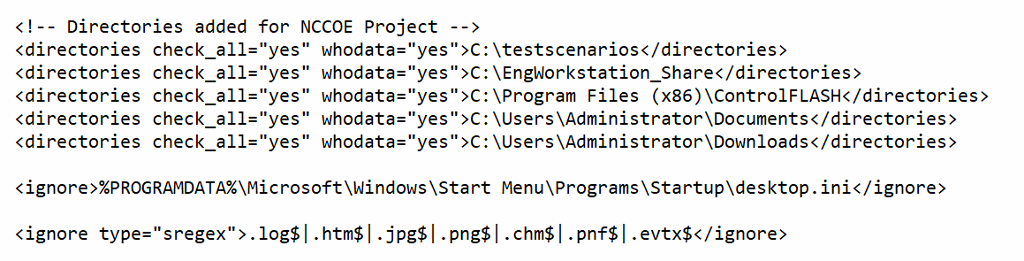

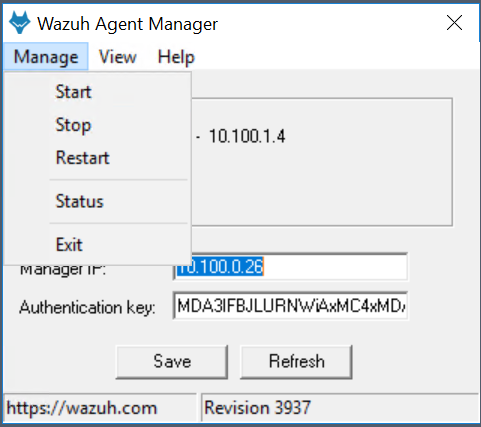

Figure 2‑39 Wazuh Agent Manager

Figure 2‑41 Wazuh Agent Manager User Interface

Figure 2‑42 Log Received After a File Change Was Detected

Figure 2‑43 ConsoleWorks Registration Screen

Figure 2‑44 ConsoleWorks Offline Registration Process

Figure 2‑45 ConsoleWorks System Backups

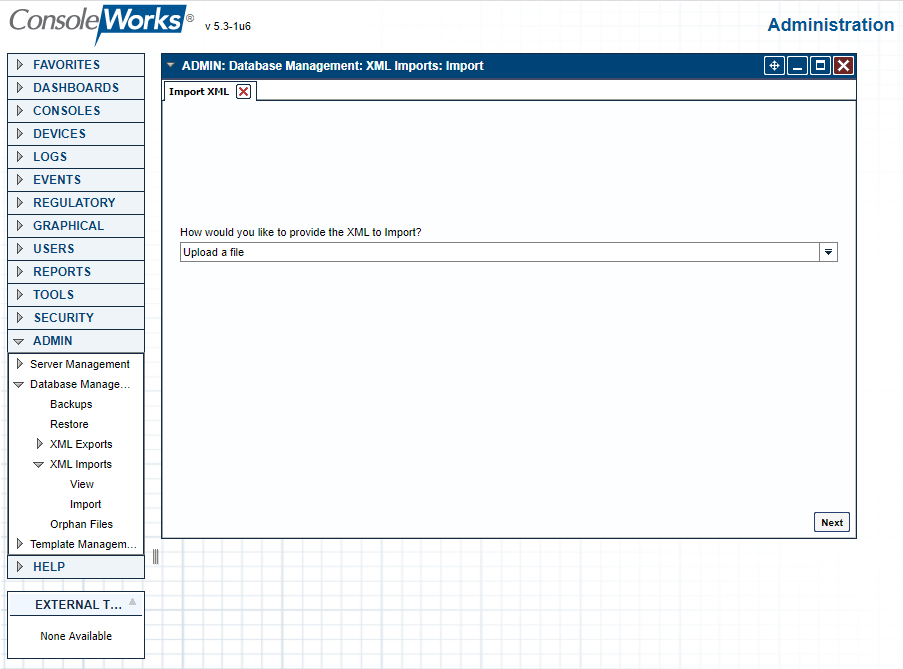

Figure 2‑46 ConsoleWorks Importing System Configurations and Components

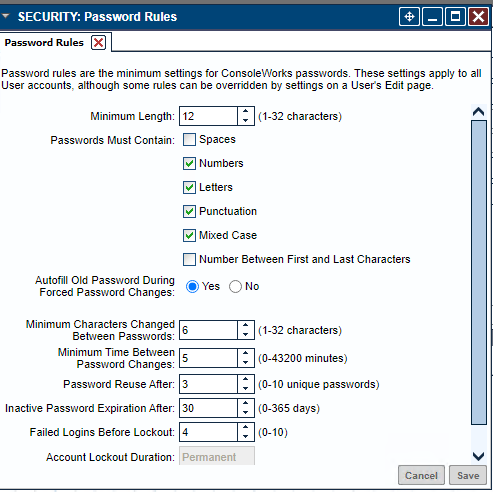

Figure 2‑47 ConsoleWorks Password Settings

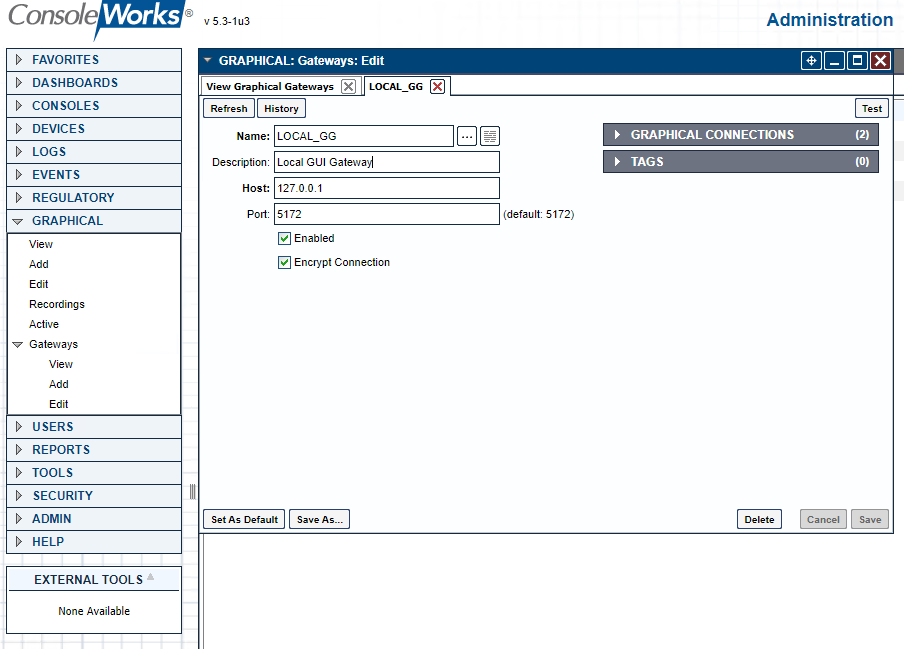

Figure 2‑48 ConsoleWorks Add the Local Graphical Gateway for RDP Access

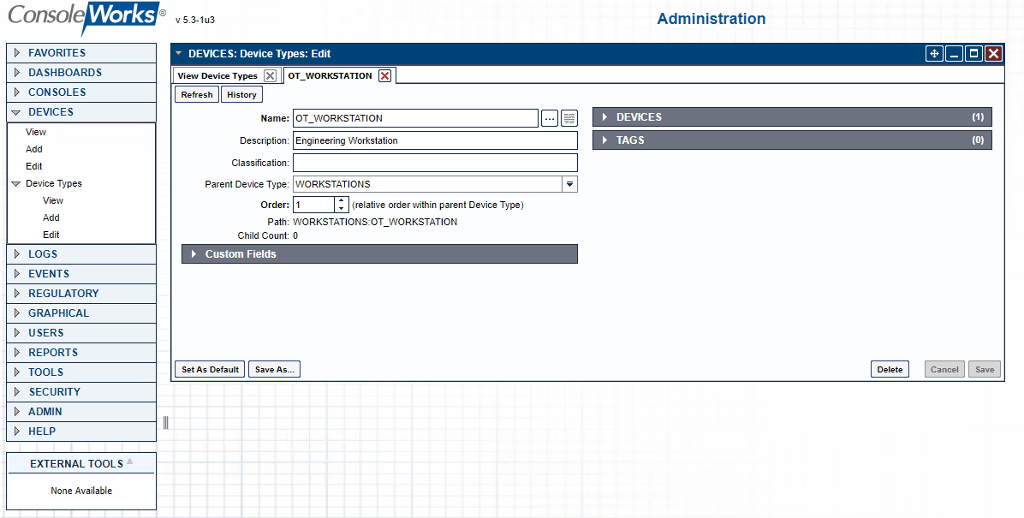

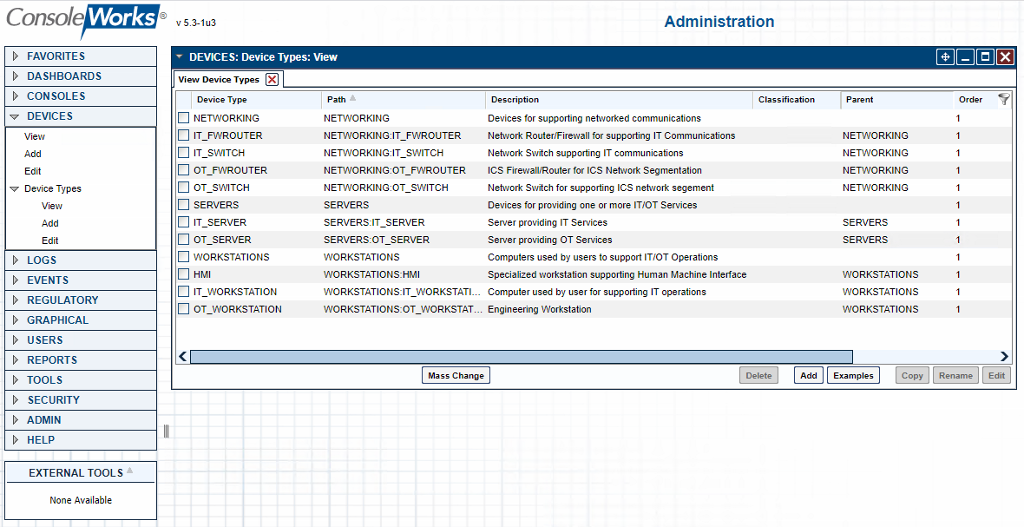

Figure 2‑49 ConsoleWorks Example Device Type Definition

Figure 2‑50 ConsoleWorks List of Device Types

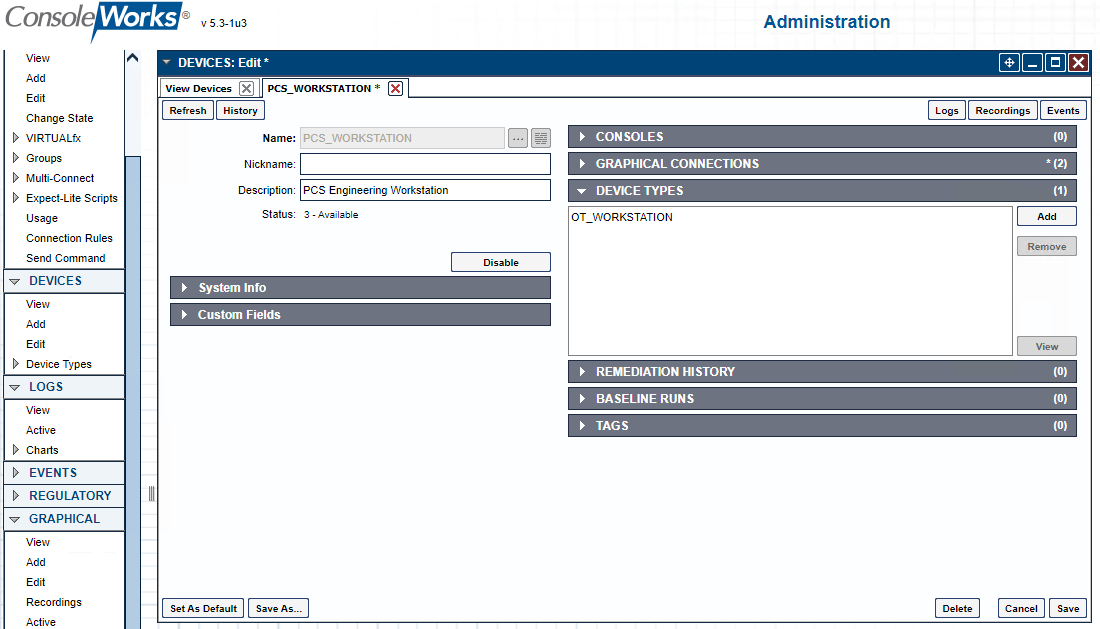

Figure 2‑51 ConsoleWorks Example Device Definition

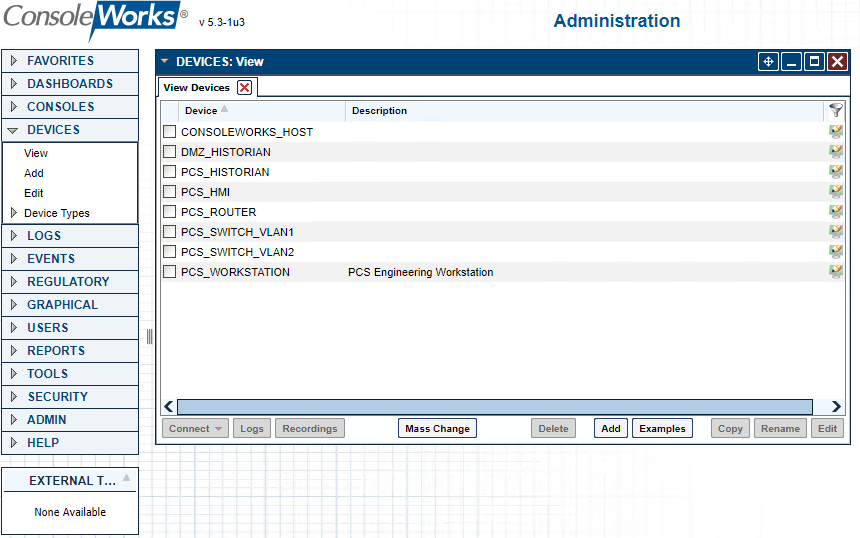

Figure 2‑52 ConsoleWorks List of PCS (Build 1) Devices

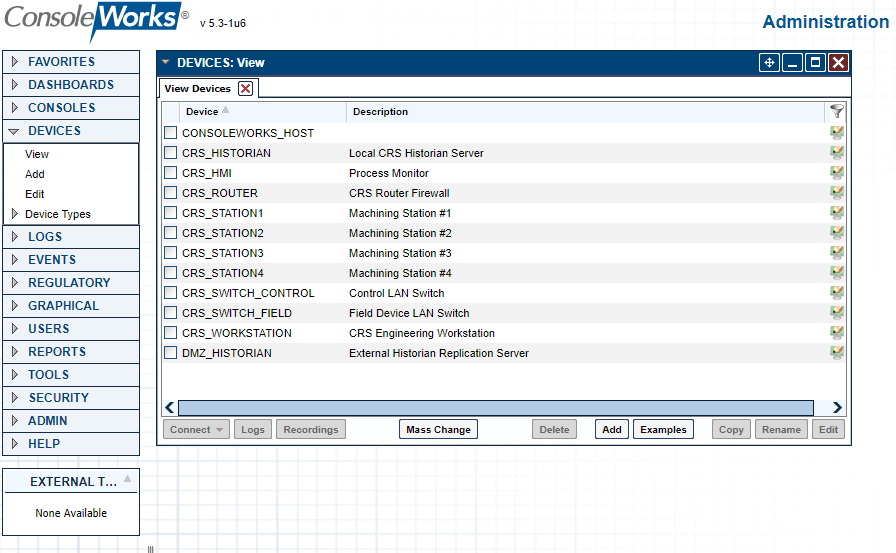

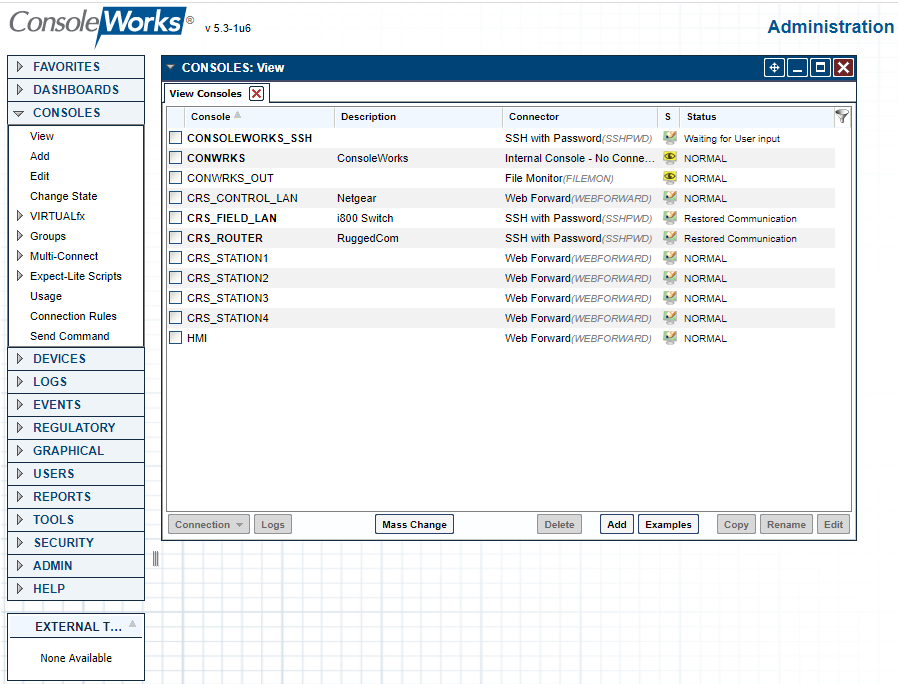

Figure 2‑53 ConsoleWorks List of CRS (Build 3) Devices

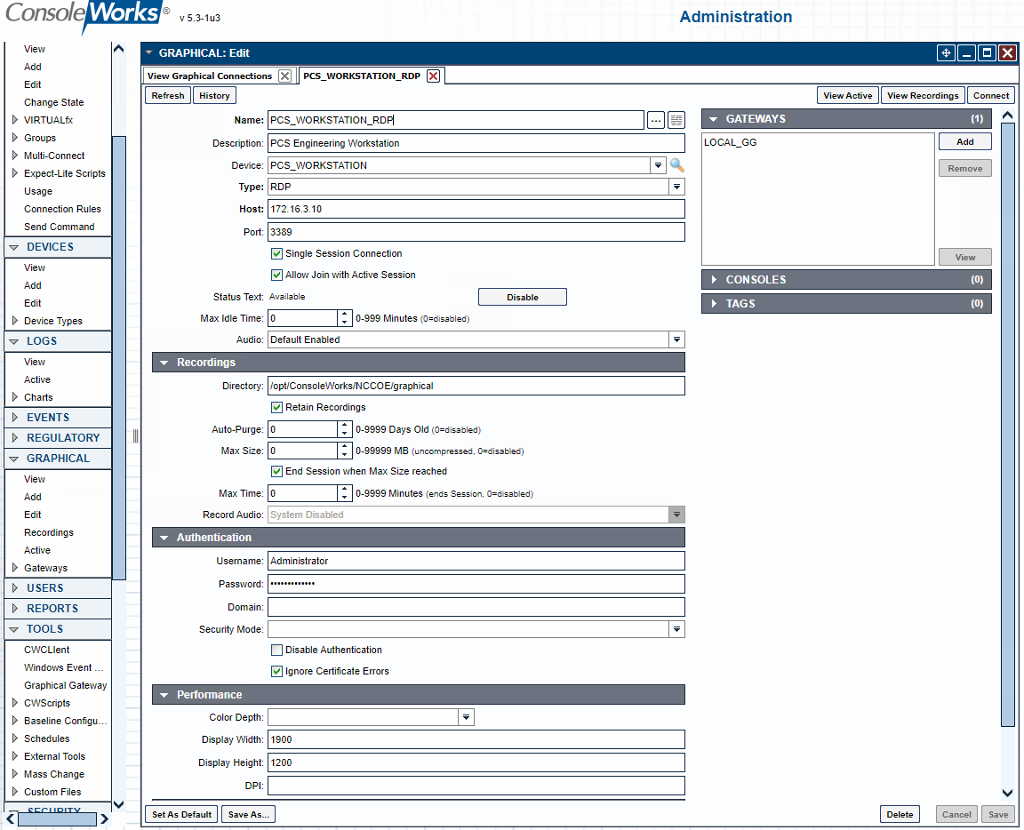

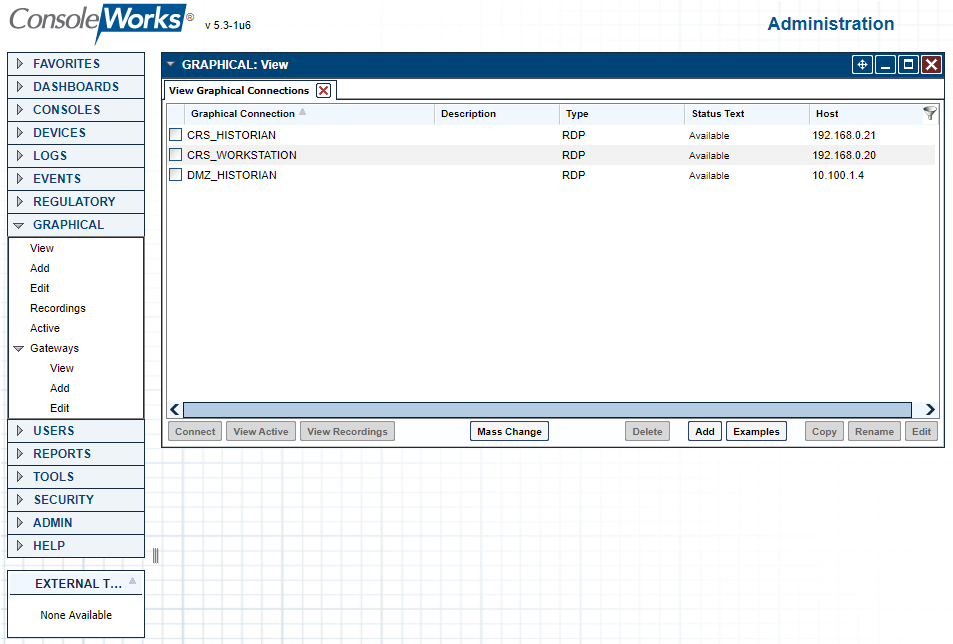

Figure 2‑54 ConsoleWorks Example RDP Configuration

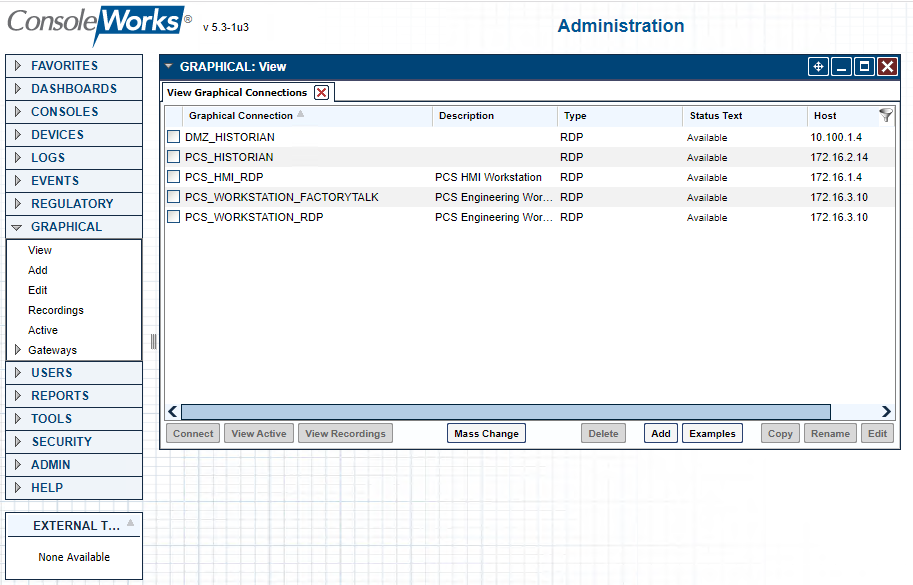

Figure 2‑55 ConsoleWorks List of PCS (Build 1) RDP Connections

Figure 2‑56 ConsoleWorks List of CRS (Build 3) RDP Connections

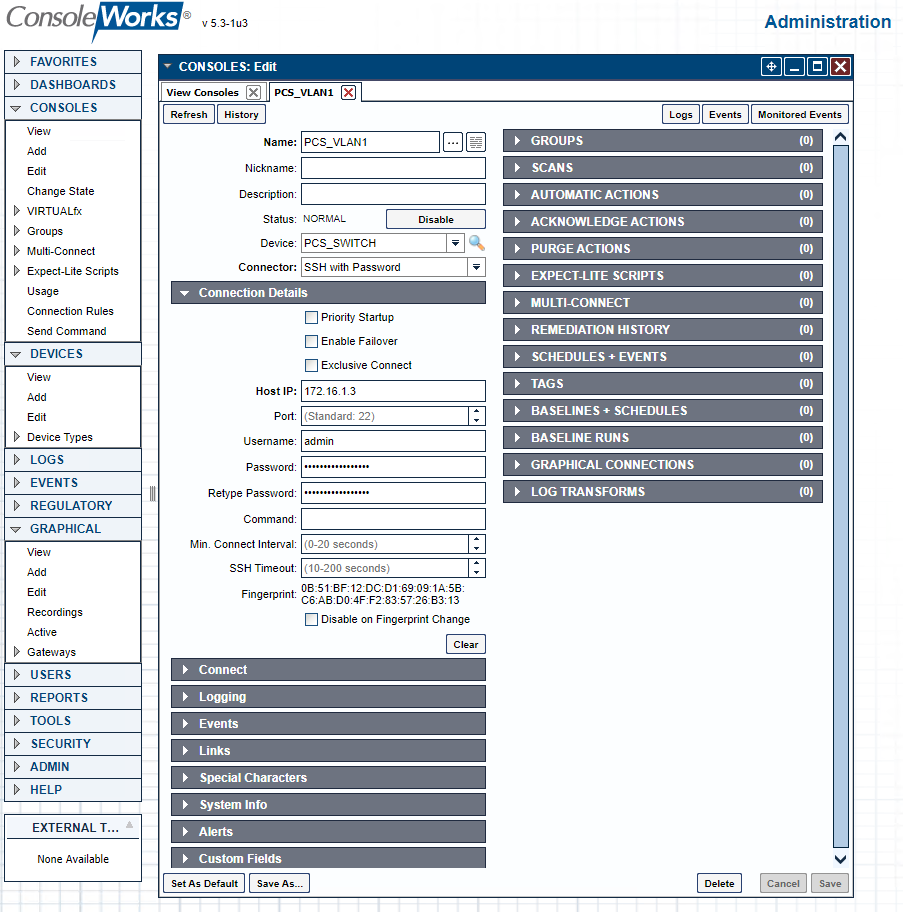

Figure 2‑57 ConsoleWorks Example Console (SSH) Connection

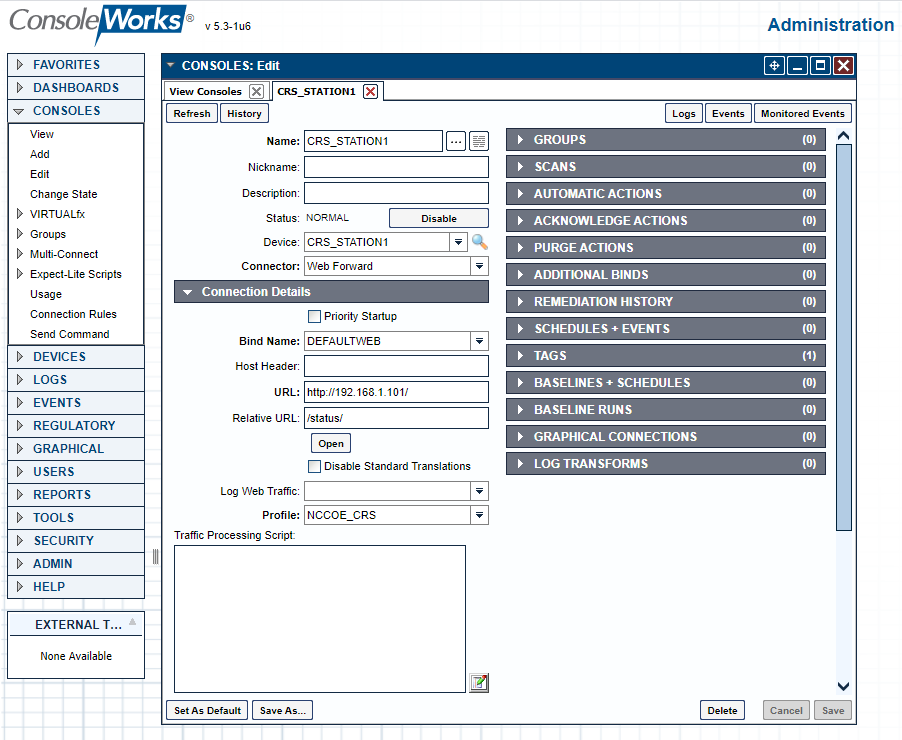

Figure 2‑58 ConsoleWorks Example Console (Web Forward) Connection

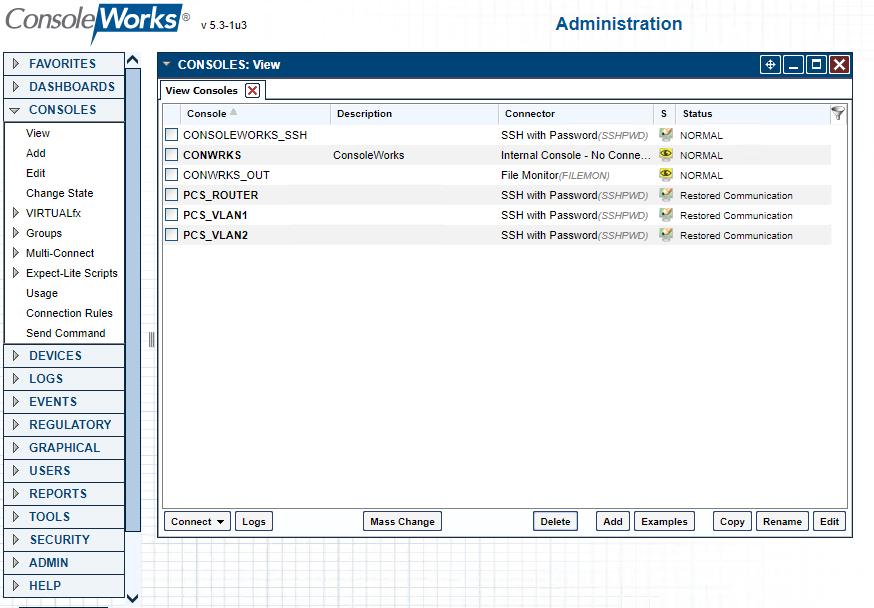

Figure 2‑59 ConsoleWorks List of PCS (Build 1) Console Connections

Figure 2‑60 ConsoleWorks List of CRS (Build 3) Console Connections

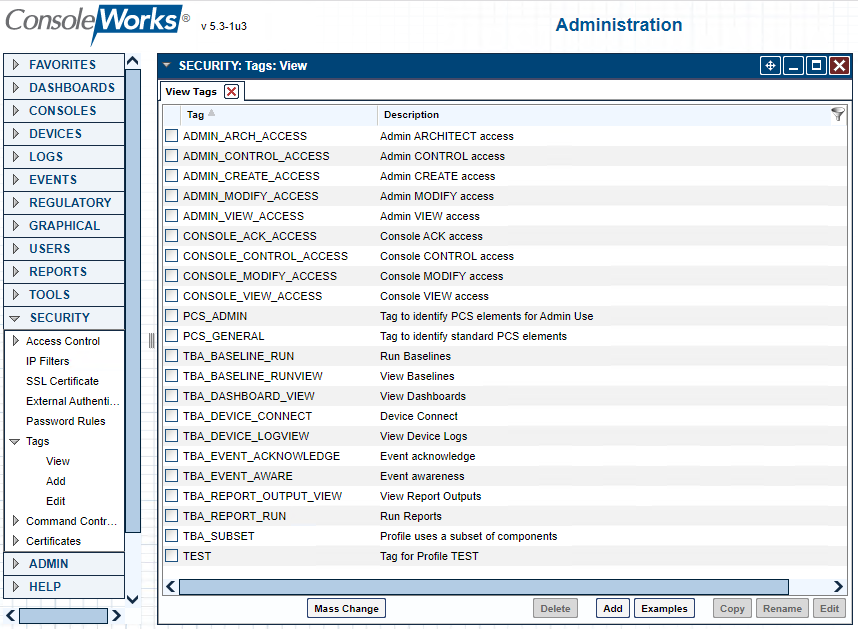

Figure 2‑61 ConsoleWorks List of Tags for PCS (Build 1)

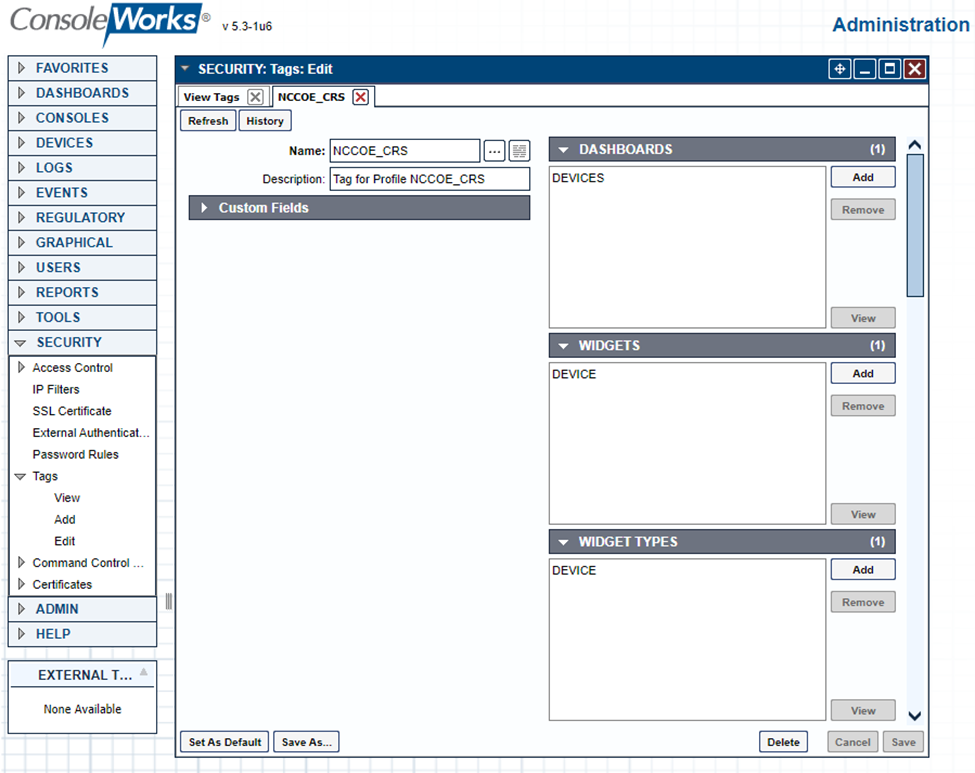

Figure 2‑62 ConsoleWorks Example Tag Definition Screen

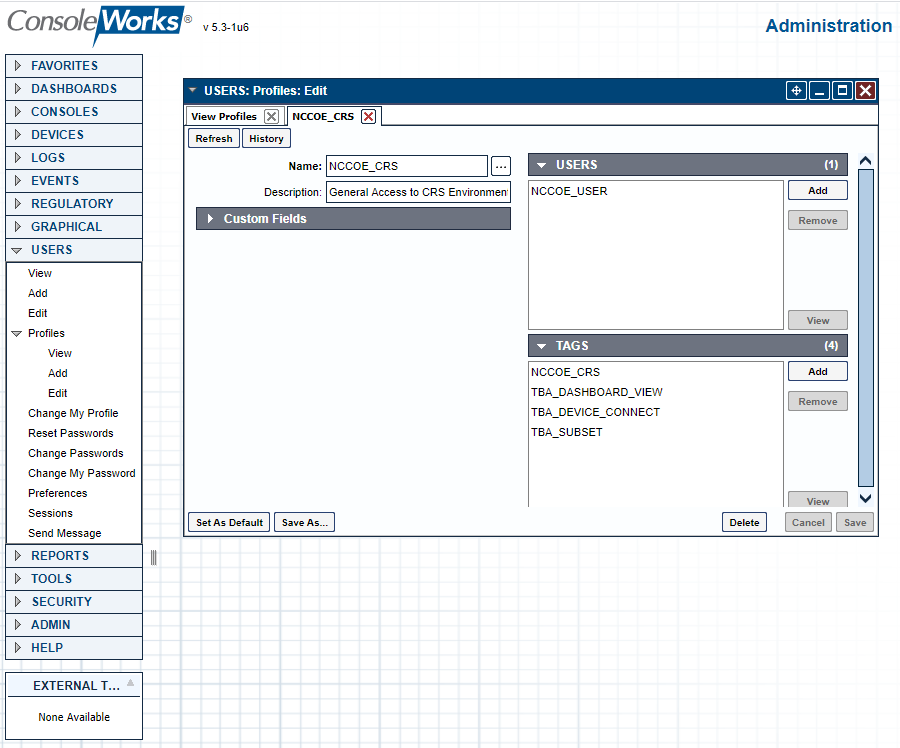

Figure 2‑63 ConsoleWorks Example Profile

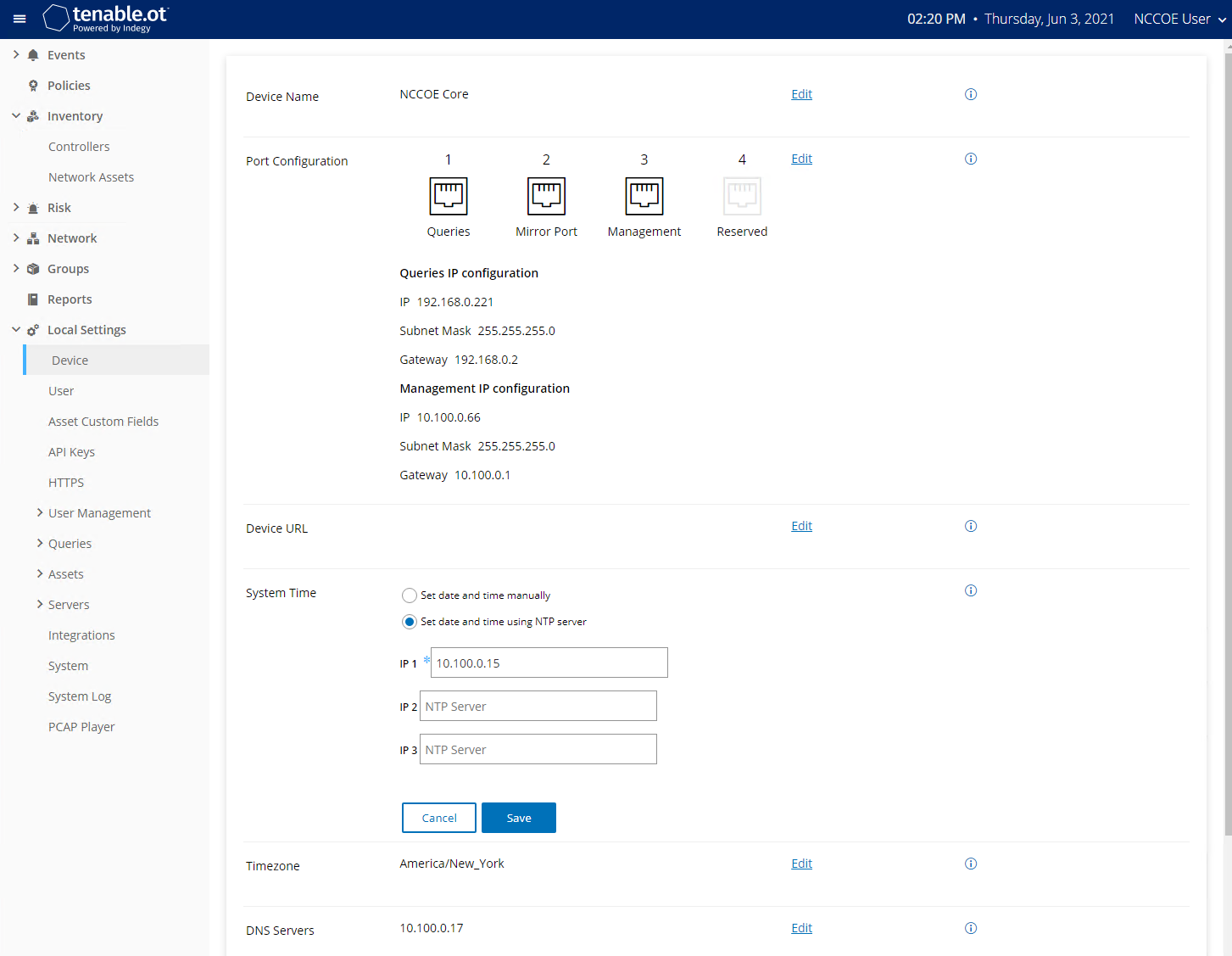

Figure 2‑64 Tenable.OT Local Device Setting for NTP Service

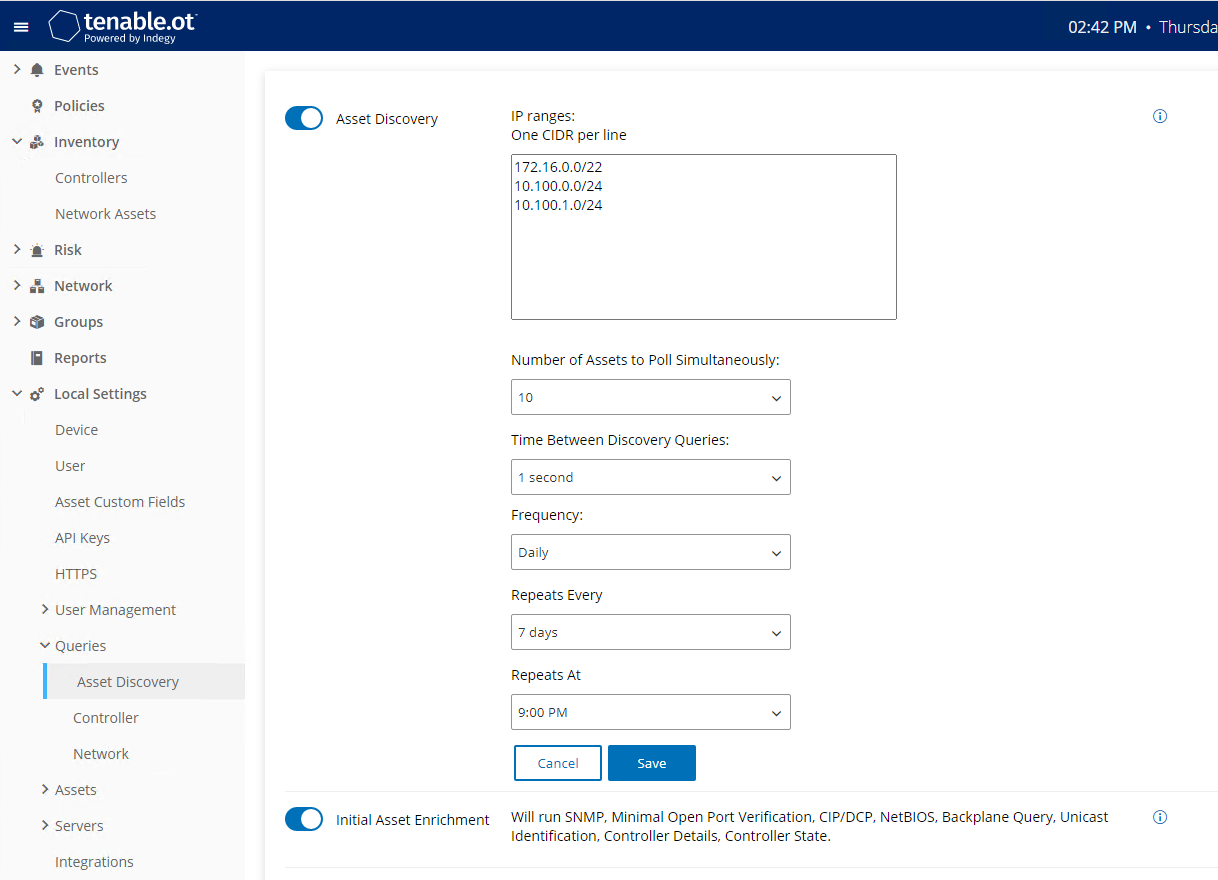

Figure 2‑65 Tenable.OT Asset Discovery Settings

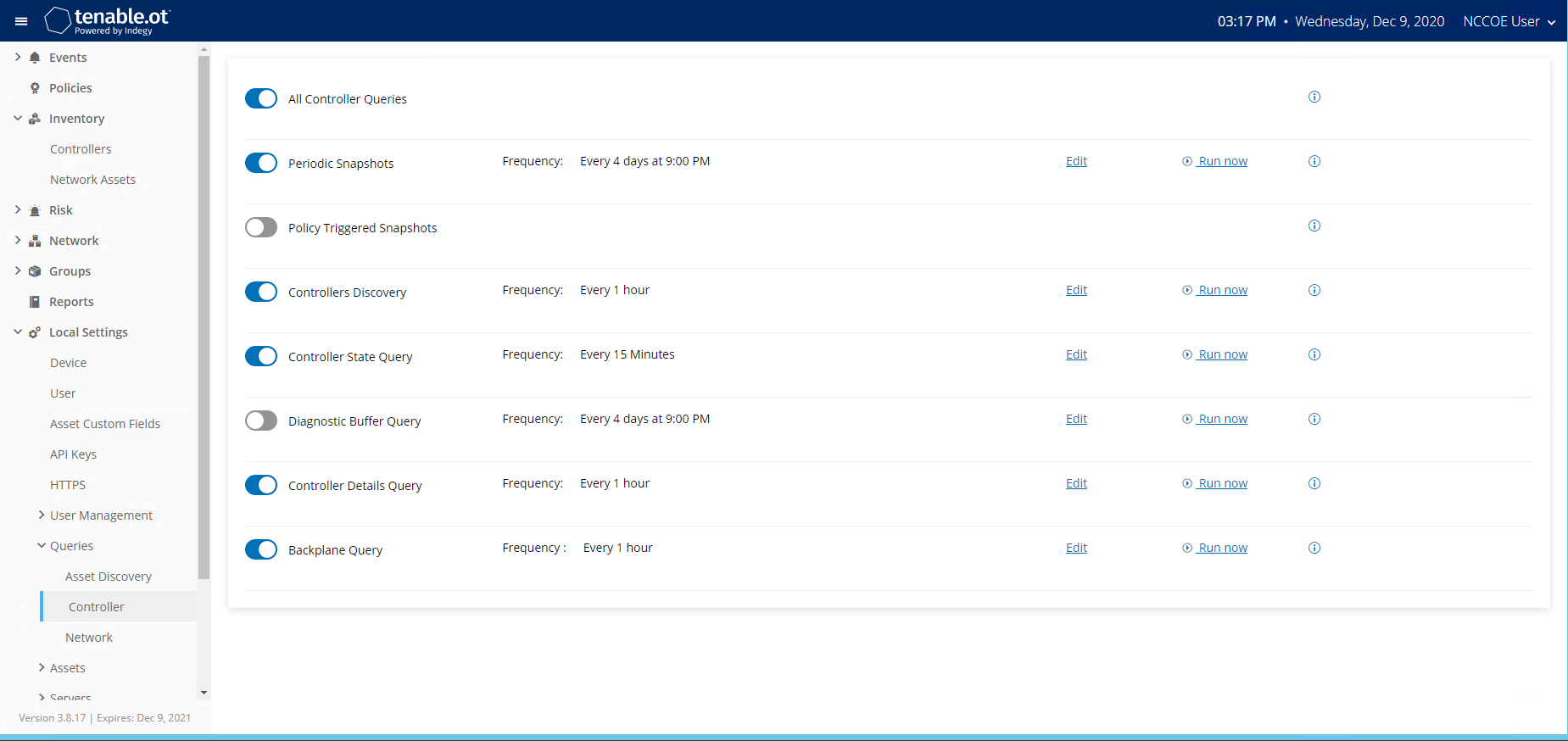

Figure 2‑66 Tenable.OT Controller Scans

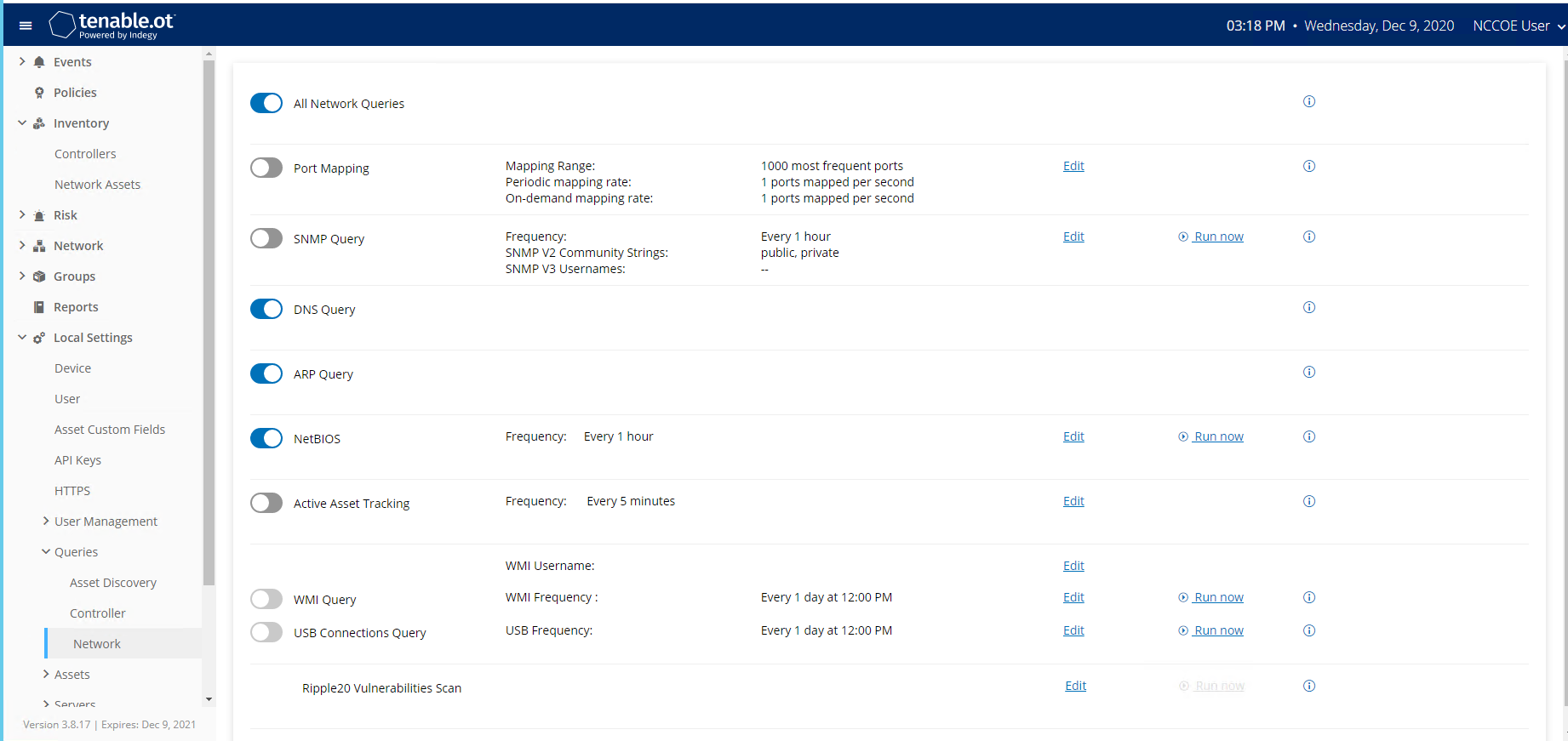

Figure 2‑67 Tenable.OT Network Scan Settings

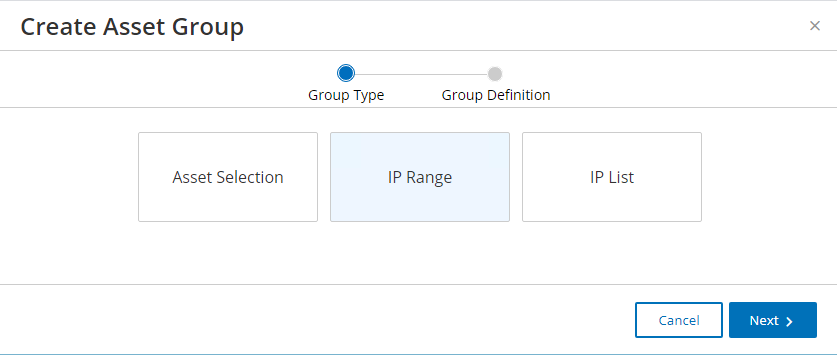

Figure 2‑68 Tenable.OT Create Asset Group Type

Figure 2‑69 Tenable.OT Create Asset Group Definition

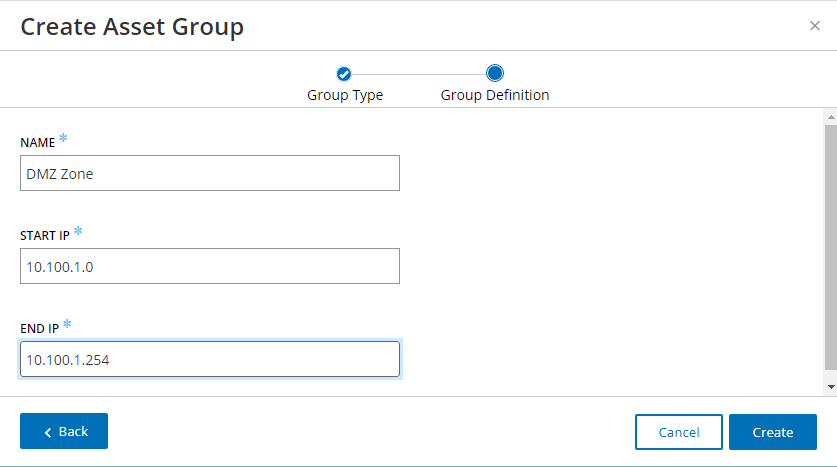

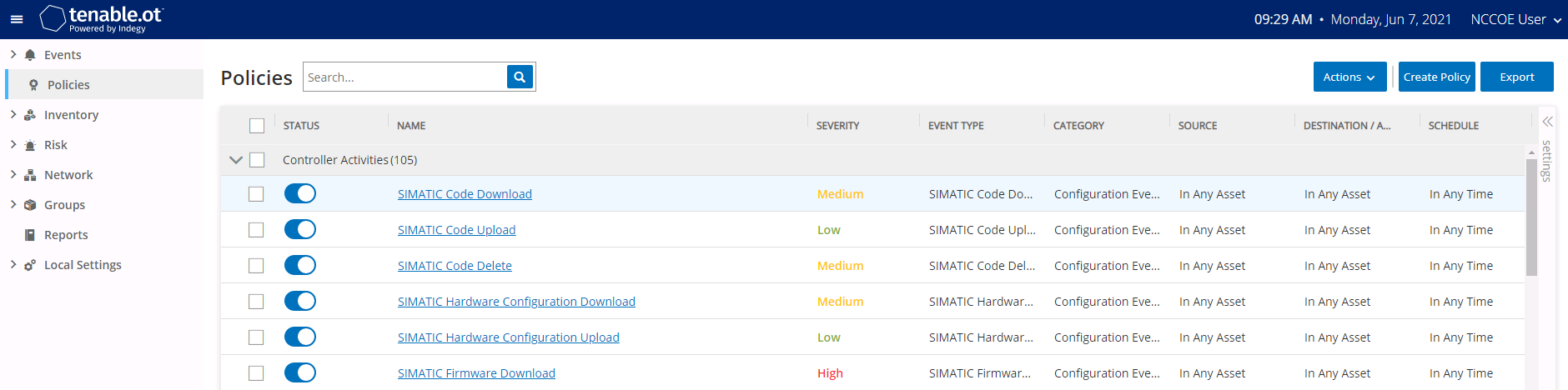

Figure 2‑70 Tenable.OT Policy Settings

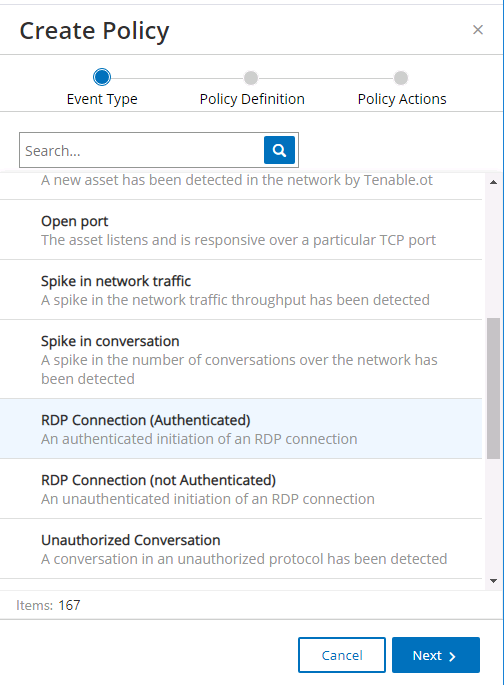

Figure 2‑71 Tenable.OT Create Policy – Event Type Options

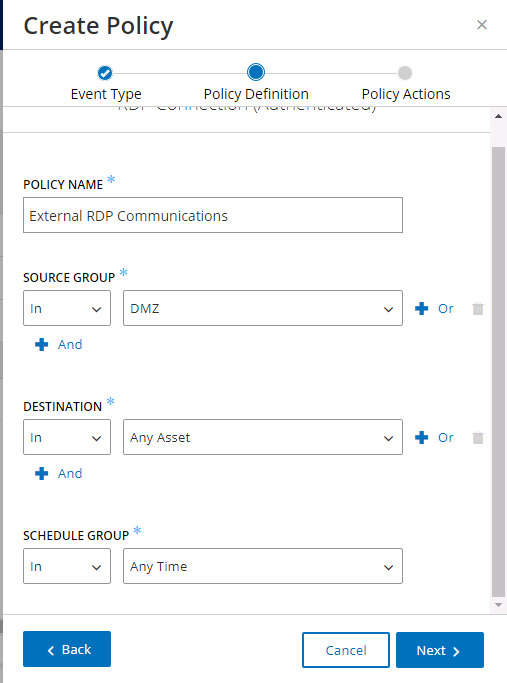

Figure 2‑72 Tenable.OT Create Policy - Definition

Figure 2‑73 Tenable.OT Create Policy - Actions

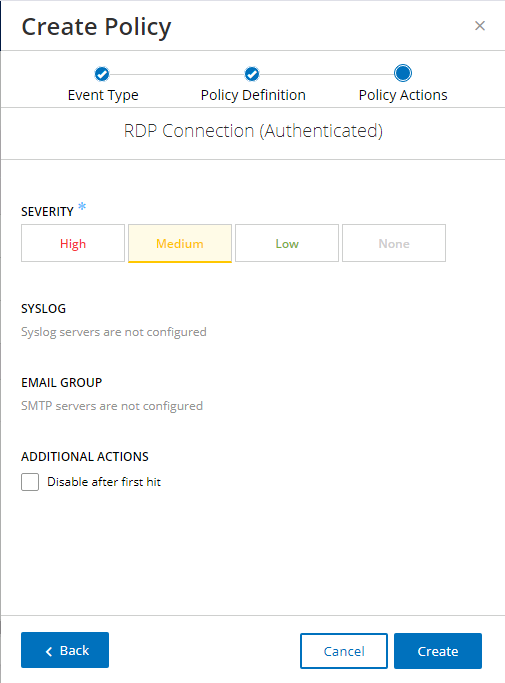

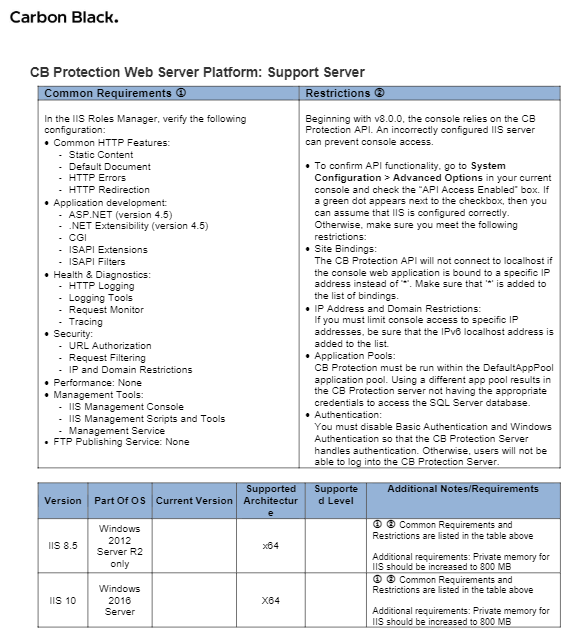

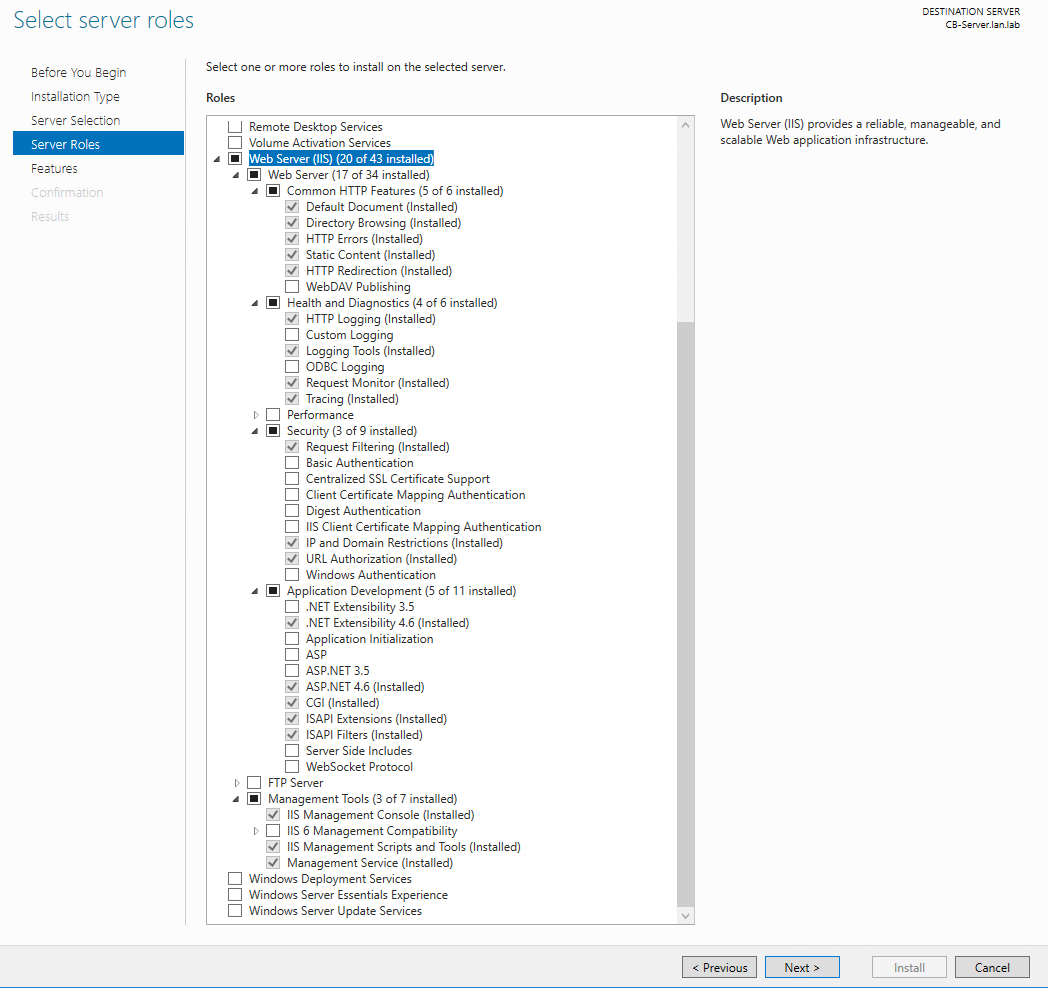

Figure 2‑74 Excerpt from Carbon Black Documentation on Support Server Requirements

Figure 2‑75 IIS Configuration for Carbon Black, Server Roles

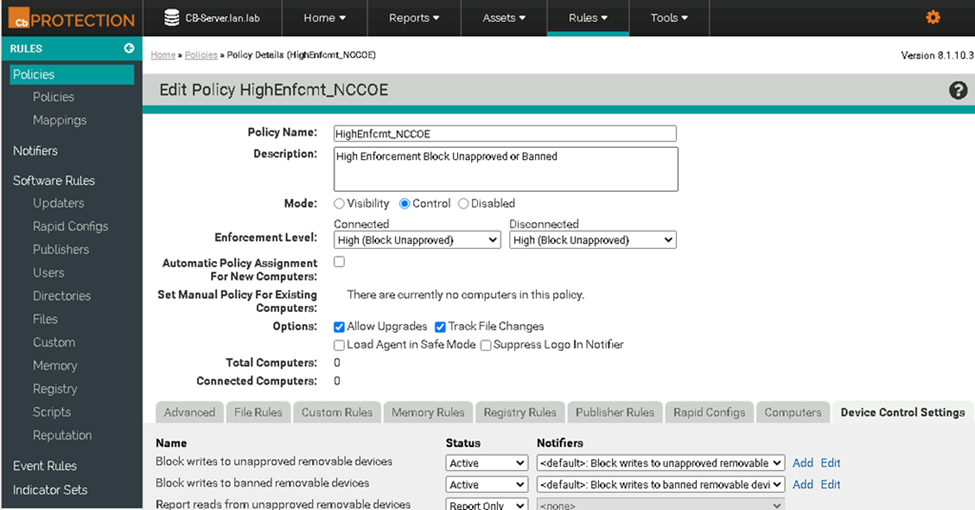

Figure 2‑76 Carbon Black Policy Edit

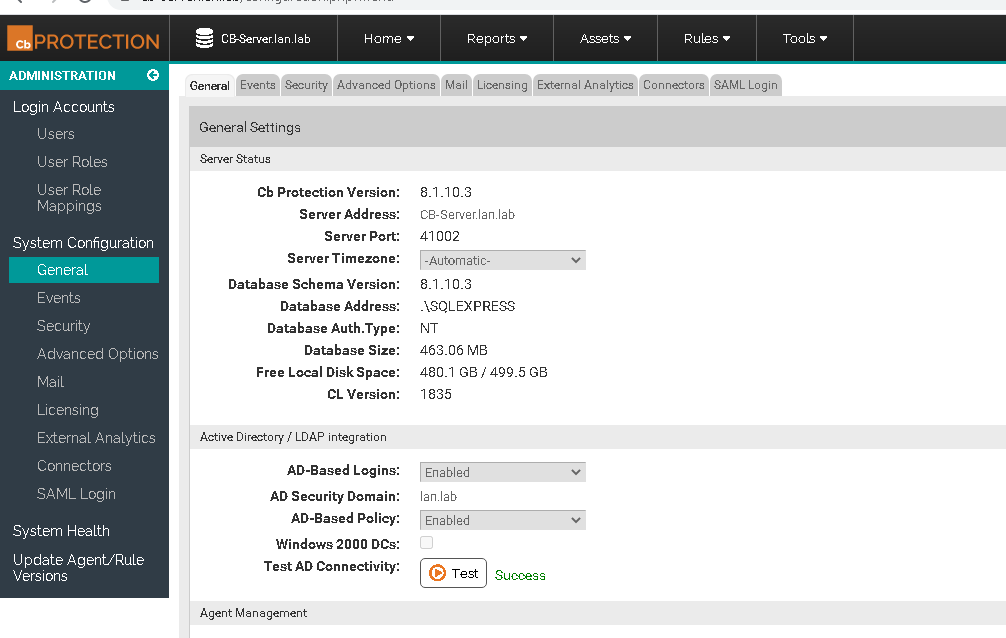

Figure 2‑77 Carbon Black App Control System Configuration

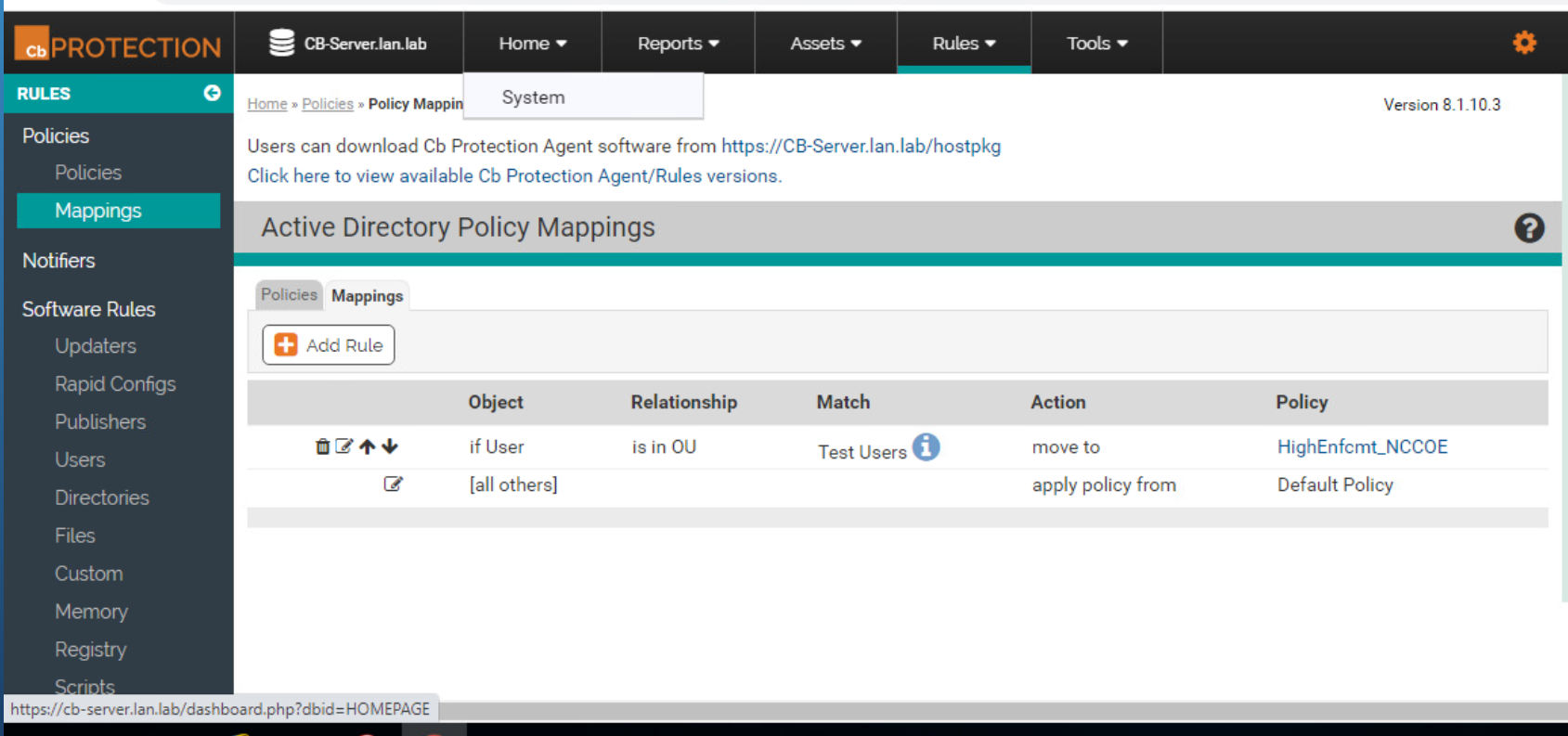

Figure 2‑78 Carbon Black App Control AD Policy Mappings

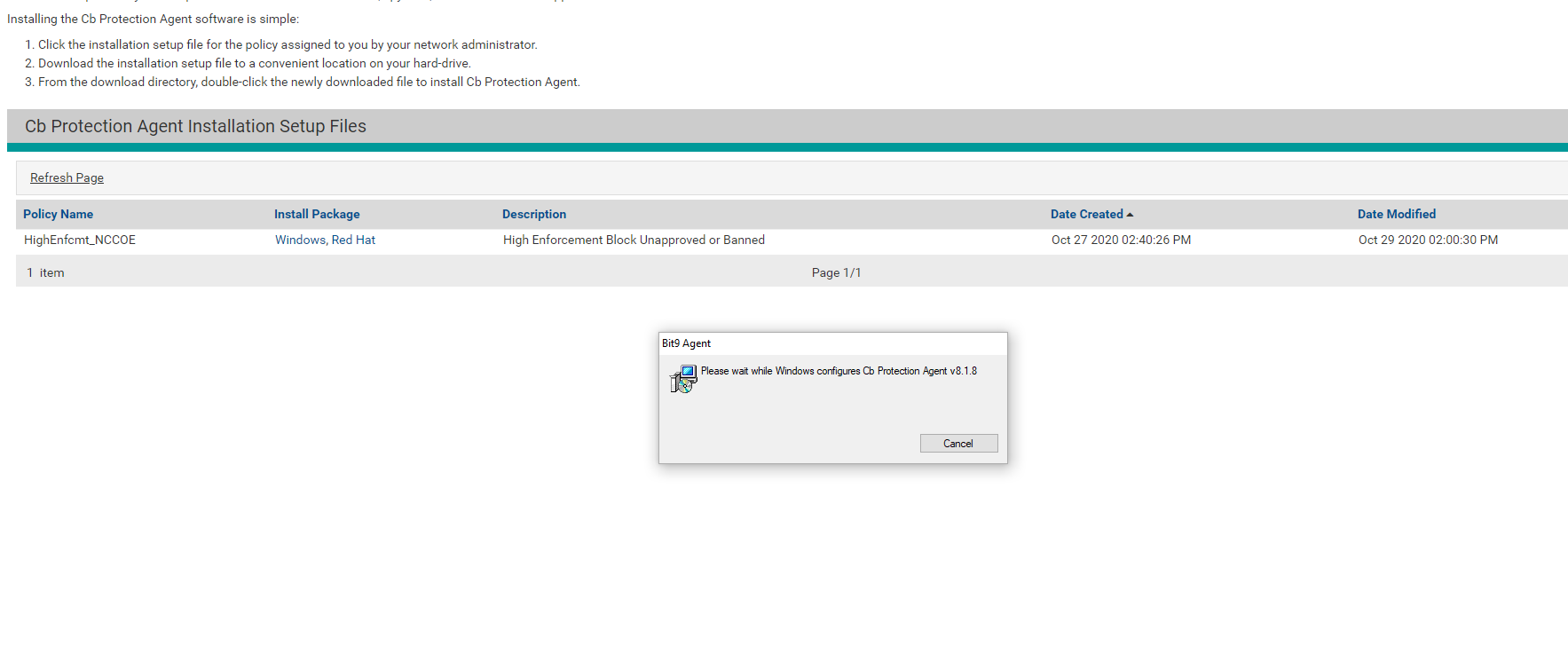

Figure 2‑79 Carbon Black Agent Download

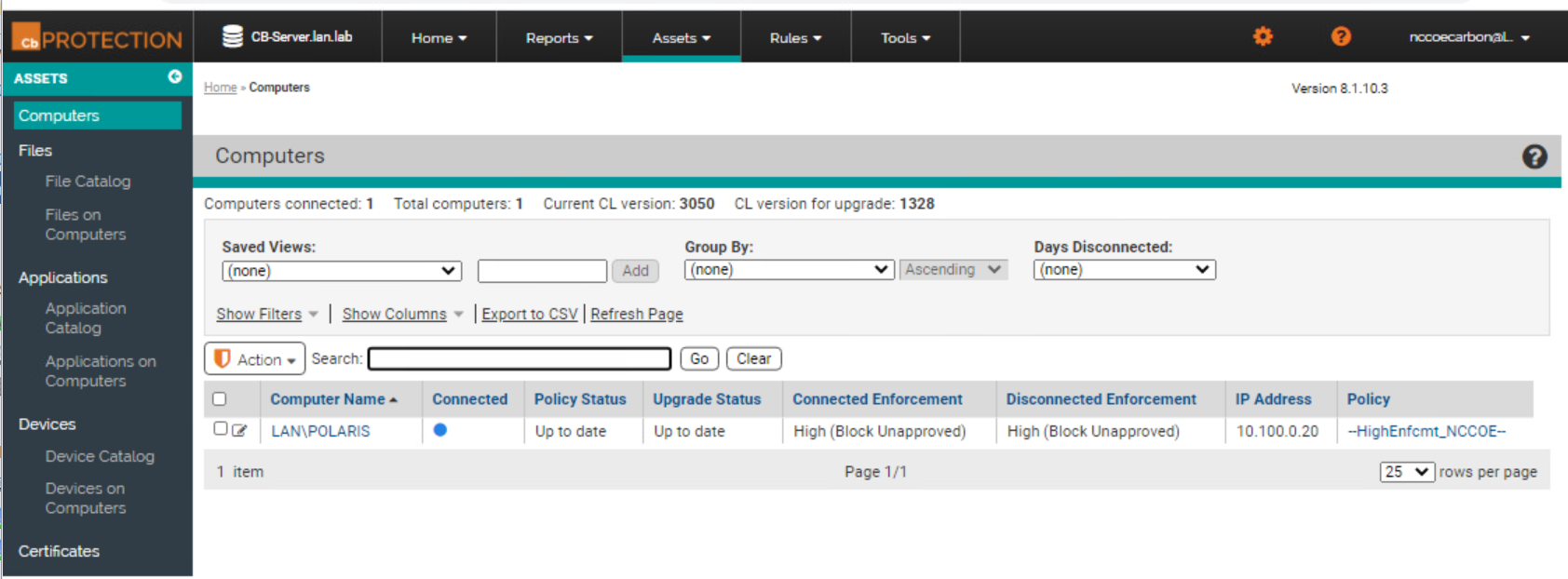

Figure 2‑80 Carbon Black App Control Computers

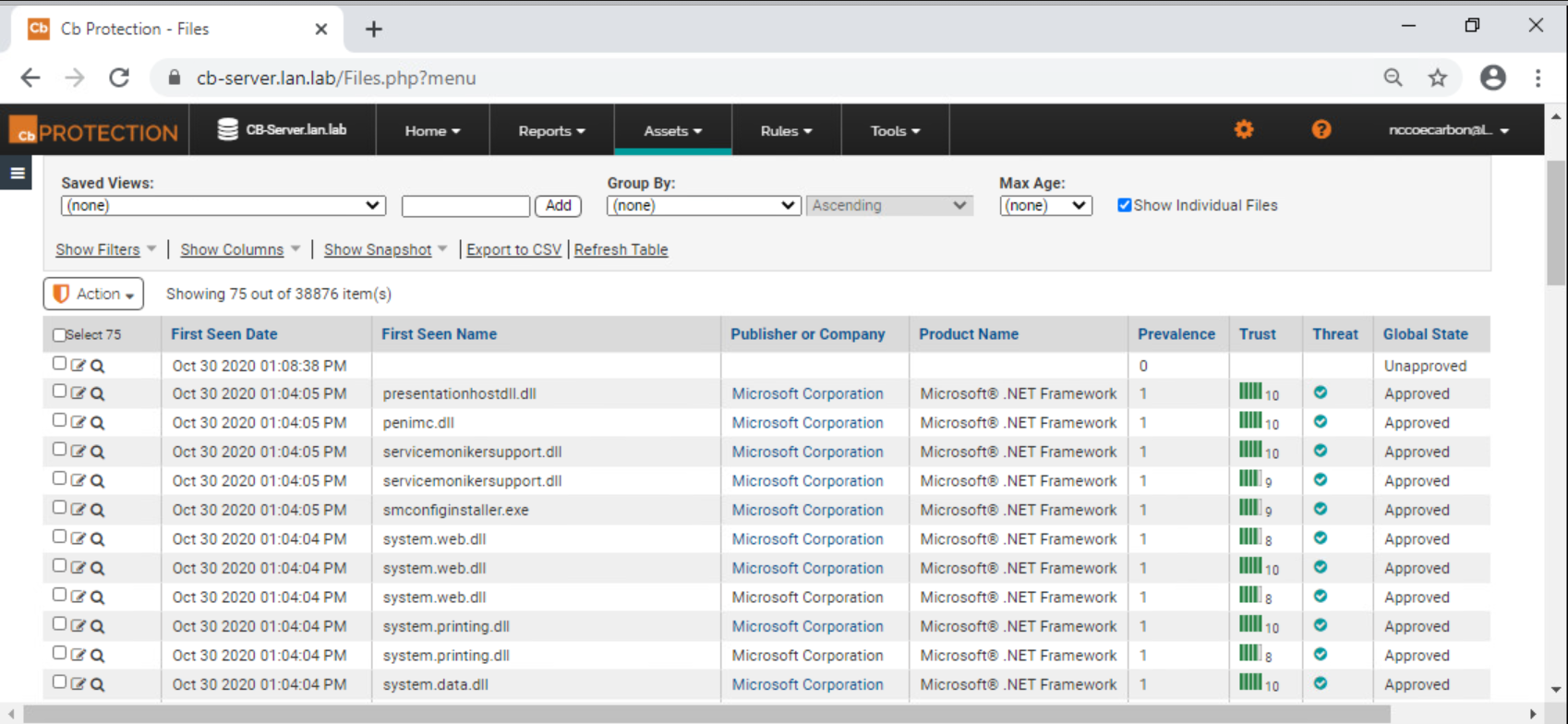

Figure 2‑81 Carbon Black App Control File Catalog

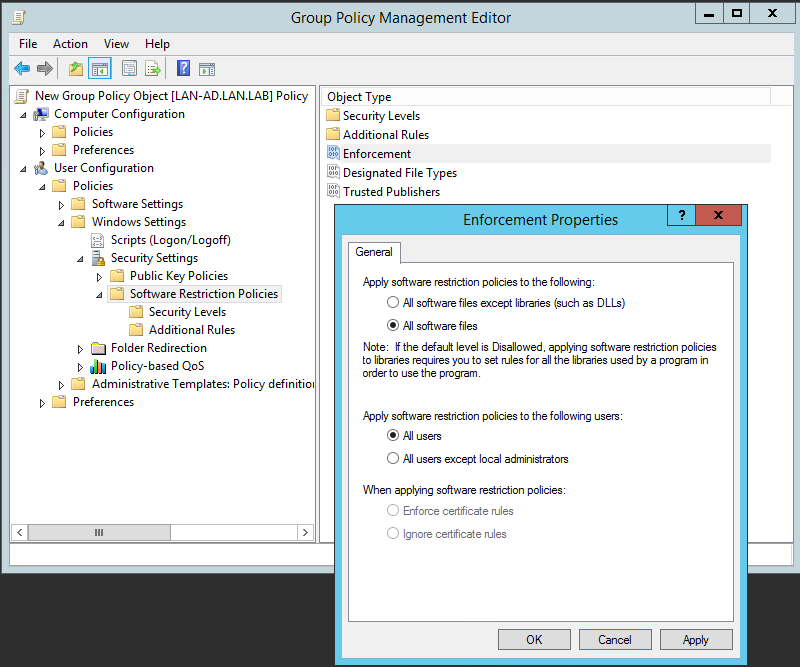

Figure 2‑82 Setting Enforcement Properties

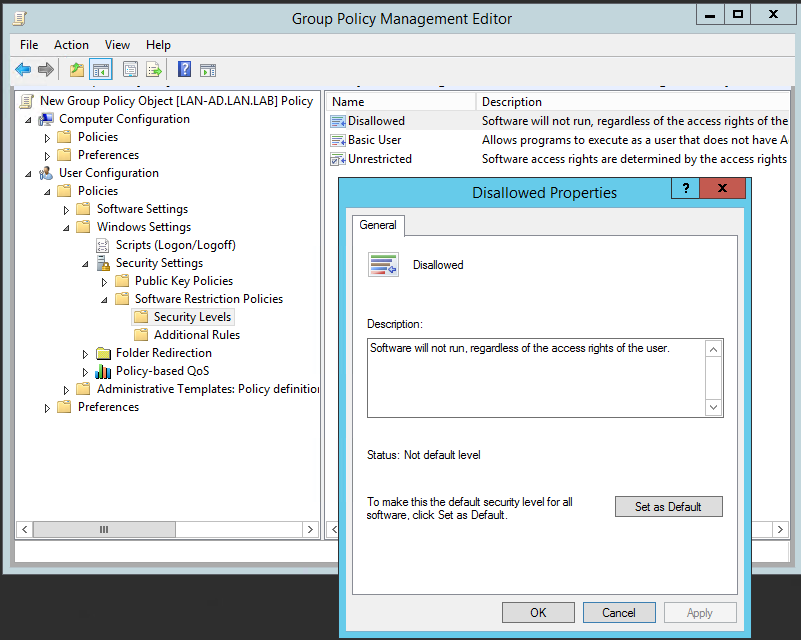

Figure 2‑83 Setting Security Level Default

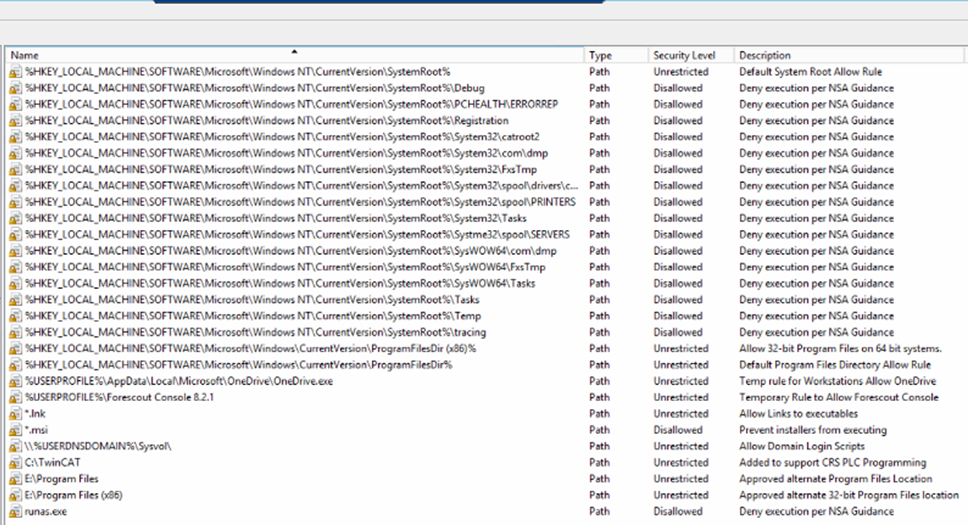

Figure 2‑84 Additional Rules Defined for Lab Environment

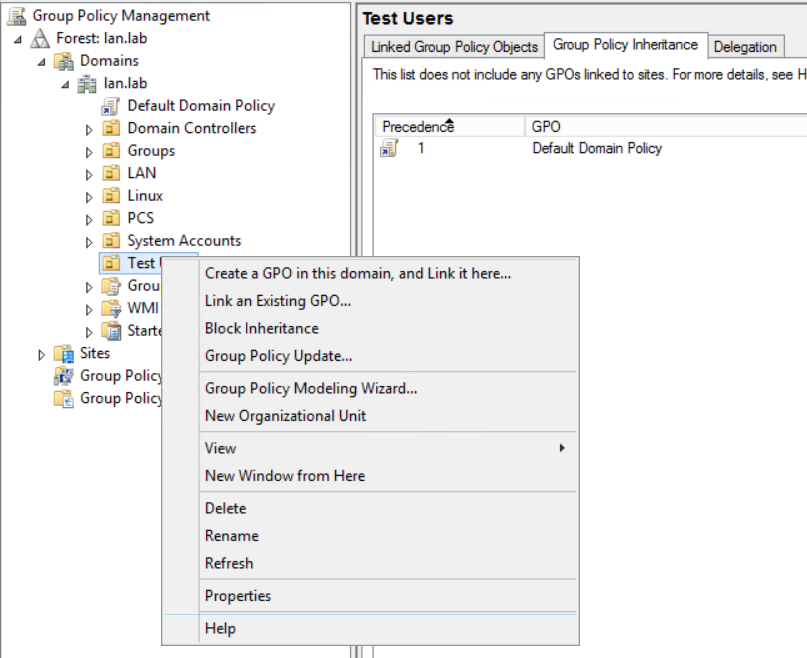

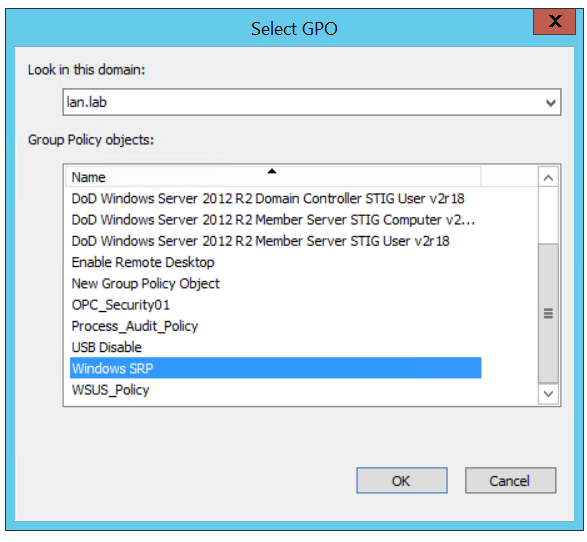

Figure 2‑85 Menu Options for Accessing the Link an Existing GPO Option

Figure 2‑86 Dialog Box for Selecting GPO to Link

Figure B-1 Build 1 Architecture Diagram

Figure B-2 Build 2 Architecture Diagram

Figure B-3 Build 3 Architecture Diagram

Figure B-4 Build 4 Architecture Diagram

List of Tables

Table 2‑2 Firewall Rules for Dispel

Table 2‑5 Forescout Deployment

Table 2‑6 eyeSight Agent Deployment

Table 2‑7 Firewall Rules for Forescout

Table 2‑8 GreenTec-USA WORMdrive and ForceField Deployment

Table 2‑9 Microsoft Azure Defender IoT Deployment

Table 2‑10 OSIsoft PI Domain Hosts Deployment

Table 2‑11 OSIsoft PI CRS Hosts Deployment

Table 2‑12 OSIsoft PI PCS Hosts Deployment

Table 2‑13 Security Onion Domain Hosts Deployment

Table 2‑14 Security Onion PCS Hosts Deployment

Table 2‑15 Security Onion CRS Hosts Deployment

Table 2‑16 ConsoleWorks Build 1 Deployment

Table 2‑17 ConsoleWorks Build 3 Deployment

Table 2‑18 ConsoleWorks Device Type List

Table 2‑19 ConsoleWorks PCS (Build 1) Devices

Table 2‑20 ConsoleWorks CRS (Build 3) Devices

Table 2‑21 ConsoleWorks PCS (Build 1) Graphical Connections

Table 2‑22 ConsoleWorks CRS (Build 3) Graphical Connections

Table 2‑23 ConsoleWorks PCS (Build 1) Console Connections

Table 2‑24 ConsoleWorks CRS (Build 3) Console Connections

Table 2‑25 Tenable.OT Appliance Details.

Table 2‑26 Firewall Rules for Tenable.OT

Table 2‑27 Carbon Black App Control Domain Hosts Deployment

Table 2‑28 Carbon Black App Control PCS Hosts Deployment

Table 2‑29 Carbon Black App Control CRS Hosts Deployment

Table 2‑30 Windows SRP Domain Servers

Table 2‑31 Windows SRP Build 2 Deployment

Table 2‑32 Windows SRP Build 3 Deployment

1 Introduction¶

The following volume of this guide shows information technology (IT) professionals and security engineers how we implemented this example solution. We cover all the products employed in this reference design. We do not re-create the product manufacturers’ documentation, which is presumed to be widely available. Rather, these volumes show how we incorporated the products together in our environment.

Note: These are not comprehensive tutorials. There are many possible service and security configurations for these products that are out of scope for this reference design.

1.1 How to Use this Guide¶

This NIST Cybersecurity Practice Guide demonstrates a modular design and provides users with the information they need to replicate the described manufacturing industrial control system (ICS) security solutions, specifically focusing on information and system integrity. This reference design is modular and can be deployed in whole or in part.

This guide contains three volumes:

NIST SP 1800-10A: Executive Summary

NIST SP 1800-10B: Approach, Architecture, and Security Characteristics – what we built and why

NIST SP 1800-10C: How-To Guides – instructions for building the example solution (this document)

Depending on your role in your organization, you might use this guide in different ways:

Senior information technology (IT) executives, including chief information security and technology officers, will be interested in the Executive Summary, NIST SP 1800-10A, which describes the following topics:

challenges that enterprises face in ICS environments in the manufacturing sector

example solution built at the NCCoE

benefits of adopting the example solution

Technology or security program managers might share the Executive Summary, NIST SP 1800-10A, with your leadership to help them understand the importance of adopting a standards-based solution. Doing so can strengthen their information and system integrity practices by leveraging capabilities that may already exist within their operating environment or by implementing new capabilities.

Technology or security program managers who are concerned with how to identify, understand, assess, and mitigate risk will be interested in NIST SP 1800-10B, which describes what we did and why. The following sections will be of particular interest:

Section 3.4.4, Security Control Map, maps the security characteristics of this example solution to cybersecurity standards and best practices.

IT professionals who want to implement an approach like this will find this whole practice guide useful. You can use this How-To portion of the guide, NIST SP 1800-10C, to replicate all or parts of the build created in our lab. This How-To portion of the guide provides specific product installation, configuration, and integration instructions for implementing the example solution. We do not recreate the product manufacturers’ documentation, which is generally widely available. Rather, we show how we incorporated the products together in our environment to create an example solution.

This guide assumes that IT professionals have experience implementing security products within the enterprise. While we have used a suite of commercial products to address this challenge, this guide does not endorse any products. Your organization can adopt this solution or one that adheres to these guidelines in whole, or you can use this guide as a starting point for tailoring and implementing parts of this manufacturing ICS solution. Your organization’s security experts should identify the products that will best integrate with your existing tools and IT system infrastructure. We hope that you will seek products that are congruent with applicable standards and best practices. Section 3.5, Technologies, in NIST SP 1800-10B, lists the products that we used and maps them to the cybersecurity controls provided by this reference solution.

A NIST Cybersecurity Practice Guide does not describe “the” solution, but a possible solution. This is a draft guide. We seek feedback on its contents and welcome your input. Comments, suggestions, and success stories will improve subsequent versions of this guide. Please contribute your thoughts to manufacturing_nccoe@nist.gov.

1.2 Build Overview¶

The NCCoE partnered with NIST’s Engineering Laboratory (EL) to provide real-world scenarios that could happen in ICS in the manufacturing sector. This collaboration spawned four unique builds: two builds within the Collaborative Robotics (CRS) environment and two builds within the Process Control System (PCS) environment. For each build, the NCCoE and the EL performed eleven scenarios. The step-by-step instructions on how each product was installed and configured in this lab environment are outlined in this document. For more information on the two environments refer to Section 4.5 in NIST SP 1800-10B. Additionally, Appendix B of this Volume contains the four build architecture diagrams for reference.

1.3 Typographic Conventions¶

The following table presents typographic conventions used in this volume.

Typeface/ Symbol |

Meaning |

Example |

|---|---|---|

Italics |

file names and path names; references to documents that are not hyperlinks; new terms; and placeholders |

For language use and style guidance, see the NCCoE Style Guide. |

Bold |

names of menus, options, command buttons, and fields |

Choose File > Edit. |

Monospace |

command-line input, onscreen computer output, sample code examples, and status codes |

|

Monospace (block) |

multi-line input, on-screen computer output, sample code examples, status codes |

% mkdir -v nccoe_projects

mkdir: created directory 'nccoe_projects'

|

blue text |

link to other parts of the document, a web URL, or an email address |

All publications from NIST’s NCCoE are available at https://www.nccoe.nist.gov. |

1.4 Logical Architecture Summary¶

The security mechanisms and technologies were integrated into the existing NIST Cybersecurity for Smart Manufacturing Systems (CSMS) lab environment. This cybersecurity performance testbed for ICS is comprised of the PCS and the CRS environments along with additional networking capabilities to emulate common manufacturing environments. For more information see An Industrial Control System Cybersecurity Performance Testbed, NISTIR 8089, http://nvlpubs.nist.gov/nistpubs/ir/2015/NIST.IR.8089.pdf.

Typically, manufacturing organizations have unique cyber-ecosystems and specific needs for their operations. To demonstrate the modularity and interoperability of the provided solutions, this project used available Cooperative Research and Development Agreement (CRADA) partner technologies to assemble four “builds” deployed across both the PCS and CRS. Additionally, to increase the diversity of technologies between builds, two of the builds also utilized open source solutions (Security Onion Wazuh), native operating system features (Windows Software Restriction Policies [SRP]), and a Cisco Adaptive Security Appliance (ASA) device configured with the AnyConnect virtual private network (VPN) client.

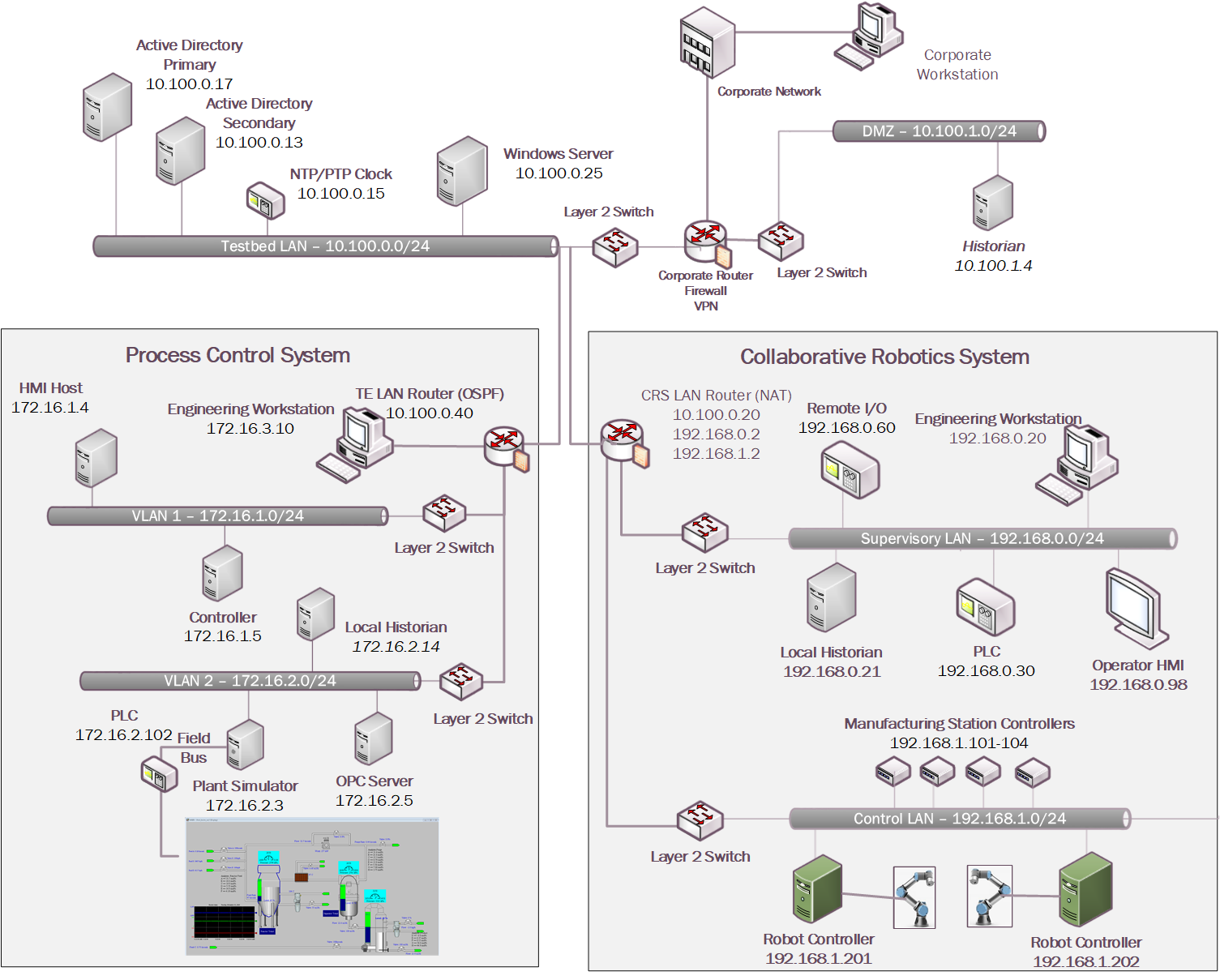

Figure 1‑1 depicts a high-level architecture for the demonstration environment consisting of a Testbed Local Area Network (LAN), a demilitarized zone (DMZ), the PCS, and the CRS. The environment utilizes a combination of physical and virtual systems and maintains a local network time protocol (NTP) server for time synchronization. Additionally, the environment utilizes virtualized Active Directory (AD) servers for domain services. The tools used to support information and system integrity are deployed and integrated in the DMZ, Testbed LAN, PCS, and CRS per vendor recommendations and standard practices as described in the detailed sections for each build.

Figure 1‑1: CSMS Network Architecture

In summary, there are six networks within the CSMS architecture:

Testbed LAN: This network is where the majority of the collaborators’ products are installed. This LAN has access to the PCS and CRS environments. Other systems, such as AD, an NTP server, and a Windows server, are also located on this LAN. The Testbed LAN has three gateways to other network segments, including 10.100.0.1 to reach the DMZ and the corporate network, 10.100.0.20 as a network address translation (NAT) interface to the CRS environment, and 10.100.0.40 as the gateway to the PCS environment.

DMZ: A demilitarized zone that separates the corporate network from the operational technology (OT) network. Many of the collaborators’ products are also installed in the DMZ. The DMZ is used across the PCS and CRS environments.

PCS Virtual Local Area Network (VLAN) 1: This is the operations LAN within the PCS environment. This LAN simulates a central control room environment. The gateway interface for this network segment is 172.16.1.1

PCS VLAN 2: This is the supervisory LAN within the PCS environment. This LAN simulates the process operation/manufacturing environment, which consists of the operating plant, programmable logic controller (PLC)s, object linking and embedding for process control (OPC) server, and data historian. The gateway interface for this network segment is 172.16.2.1

CRS Supervisory LAN: This LAN is within the CRS environment. The historian, PLCs, operating human machine interface (HMI), Engineering workstation, and remote input/output devices are connected to this network. The gateway interface for this network segment is 192.168.0.2

CRS Control LAN: This LAN is within the CRS environment. The robot controllers and manufacturing station controllers are connected to this network. The gateway interface for this network segment is 192.168.1.2

The test bed networks used static IPv4 addresses exclusively, and the subnet masks were set to 255.255.255.0. No IPv6 addresses were used. This setup is consistent with industry practice. Specific Internet Protocol (IP) addresses are listed for each component in the following sections.

For an in-depth view of the architectures PCS and CRS builds, specific build architecture diagrams can be found in Volume B of this practice guide, Section 4.3, Process Control System, and Section 4.4, Collaborative Robotics System.

2 Product Installation Guides¶

This section of the practice guide contains detailed instructions for installing and configuring all the products used to build the example solutions.

2.1 Dispel Remote Access¶

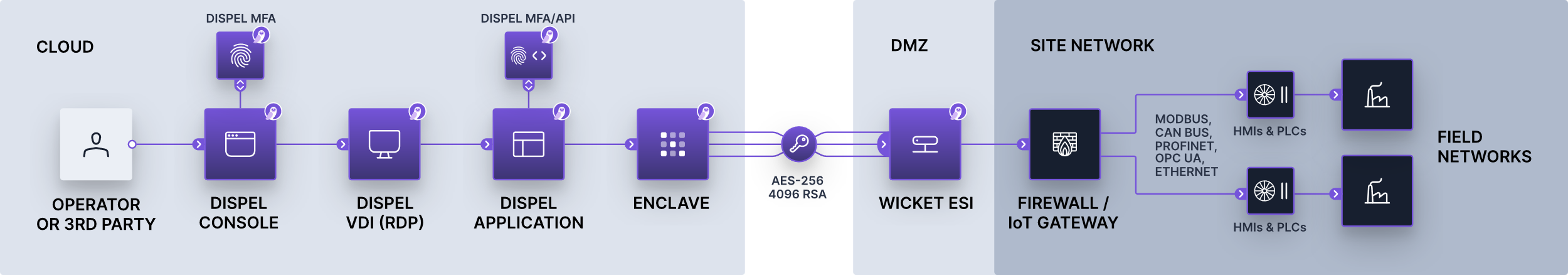

Dispel is a remote access tool for OT environments that provides secure remote access to the industrial networks. Dispel, implemented in Build 2 and Build 4, uses cloud-based virtual desktop interfaces (VDIs) that traverse a cloud-based Enclave to reach a Wicket ESI device that is deployed within the local OT network. Dispel supports both user authentication and authorization, and remote access for Builds 2 and 4.

Virtual Desktop Interfaces (VDIs)

VDIs are Virtual Machines (VMs) that reside in the cloud and allow users to connect using Remote Desktop Protocol (RDP). The VDIs establish a secure connection to the Wicket ESI located in the OT network to provide network access to the OT devices.

Enclave

Enclaves are single-tenanted, colorless core, moving target defense (MTD) networks. Enclaves are composed of VMs that act as traffic nodes. To create a shifting target profile, these VMs are steadily replaced by new VMs launched on different hypervisors, in different geographic regions, and/or on altogether different public or private clouds. In the case of Builds 2 and 4, the Enclaves were launched exclusively on public clouds. To provide a static set of IP addresses throughout the builds, the MTD characteristic was disabled.

Wicket ESI

Wicket ESIs are on-premise components, shown in Figure 2‑1, that allow users to connect to the OT network remotely. These devices establish encrypted connections from the local OT network up to an Enclave which, in turn, is connected to the VDI, allowing a remote user to access the OT devices.

Additional information is available in Remote Access for Industrial Control Systems from Dispel.io at: https://s3.amazonaws.com/downloads.dispel.io/resources/One+Pager/dispel-ics-brochure_20190529.pdf

Figure 2‑1 Dispel High-level Implementation, from Remote Access for ICS

2.1.1 Host and Network Configuration¶

The Wicket ESI is connected to two ports within the DMZ, one for supporting outbound communications to the Dispel Enclave (labeled “WAN”) and one for supporting communication through the local firewall to the ICS environment (labeled “LAN”). The items listed in Table 2‑1 are the Wicket ESI specific device and network settings for the hardware provided to support Build 2 Figure B-2 and 4 Figure B-4.

Table 2‑1 Dispel Deployment

Name |

System |

OS |

CPU |

Memory |

Storage |

Network |

|---|---|---|---|---|---|---|

Dispel Wicket ESI |

ONLOGIC, ML340G-51 |

Ubuntu 16.04 |

Intel i5-6300U |

16GB |

120GB |

Wicket

WAN

Interface

10.100.1.60

Wicket

LAN

Interface

10.100.1.61

DMZ

|

Dispel Enclave |

Cloud Virtual Machines |

Ubuntu 16.04 |

Variable |

Variable |

Variable |

N/A |

Dispel VDI |

Cloud Virtual Machine |

Windows Server 2016 |

Intel Xeon Platinum 8171M |

8GB |

120GB |

N/A |

2.1.2 Installation¶

Installation involves establishing an account on the Dispel cloud-infrastructure and deploying the preconfigured Wicket ESI device within the OT environment. Detailed installation information, customized to the end user’s deployment, is provided by Dispel.

After connecting the WAN and LAN network cables, configuring the Wicket ESI required connecting a monitor, keyboard, and mouse to the unit using the available VGA and USB ports. Logging into the unit locally using the credentials provided by Dispel enabled configuration of the network connections using the following procedure (note: these procedures were executed using root privileges and can also be performed using Sudo).

Update the network interfaces with the IP configuration information:

#> vi /etc/network/interfaces

source-directory /etc/network/interfaces.d # LAN auto enp4s0 allow-hotplug enp4s0 iface enp4s0 inet static address 10.100.1.61 netmask 255.255.255.0 #gateway up route add -net 10.100.0.0 netmask 255.255.255.0 gw 10.100.1.1 dev enp4s0 up route add -net 172.16.0.0 netmask 255.255.252.0 gw 10.100.1.1 dev enp4s0 # WAN auto enp0s31f6 allow-hotplug enp0s31f6 iface enp0s31f6 inet static address 10.100.1.60 netmask 255.255.255.0 gateway 10.100.1.1 dns-nameservers <ip address>

Update the Wicket ESI netcutter.cfg file to include the local subnet information (toward the bottom of the file):

#> vi /home/ubuntu/wicket/netcutter.cfg

... subnets = ( { name = "Default"; value = "10.100.0.0/24"; advertise = "false"; }, { name = "PCS"; value = "172.16.0.0/22"; advertise = "false"; }, { name = "DMZ"; value = "10.100.1.0/24"; advertise = "false"; } );

Restart the Wicket services with the following command:

#> service wicket restart

Check the log for errors and test connectivity to the Dispel environment (note: IP address will be account specific):

#> tail -f /home/ubuntu/wicket/wicket.log

2.1.3 Configuration¶

With the Wicket ESI connected to the lab environment, the solution may be configured by establishing an account and configuring the cloud infrastructure, configuring the corporate router/firewall to allow authorized connections to and from the Wicket ESI, and configuring the VDI environment to support the remote access to the ICS environments.

For full documentation and configuration instructions, see the Dispel documentation at https://intercom.help/dispel/en/.

Dispel created an organization named “NCCOE” with an Enclave name “NCCoE-Manufacturing” in their pre-production staging environment. A single “user” account was created for accessing the cloud infrastructure environment named nccoe-m-user@dispel.io. Organizations will need to plan for implementing multiple accounts for supporting the “owner” and “admin” roles in addition to the “user” roles. The “owner” and “admin” roles are for monitoring and managing the cloud infrastructure and are separate from the user accounts used to login to the VDI environment.

The staging environment was configured without the Dispel multifactor authentication (MFA) settings because personal identity verification (PIV) cards were not available as a supported mechanism, and the lab environment did not support authenticator application or security keys. However, MFA is very important for implementation and is strongly encouraged when planning the implementation. For this effort, to reduce the risk of not having the MFA implementation, NCCoE worked with Dispel to limit access to the cloud infrastructure and the VDI instances to only approved source IP addresses. The additional protection of restricting access to the cloud infrastructure and VDI instances is also encouraged to reduce the risks associated with the internet-accessible web and RDP services.

Configure Firewall Settings:

The Wicket ESI needs access to the internet and to the internal OT environment. Table 2‑2 below describes the firewall rules implemented on the corporate router/firewall for communications on the internet-facing firewall and internal network zone firewall.

Table 2‑2 Firewall Rules for Dispel

Rule Type |

Source |

Destination |

Protocol:Port(s) |

Purpose |

|---|---|---|---|---|

Allow |

10.100.1.60 |

IdAM:

159.65.111.193

Entry Node:

52.162.177.202

|

TCP/UDP:1194,

HTTPS

|

Outbound Secure Web to Dispel Environment on the Internet |

Allow |

10.100.1.61 |

10.100.1.0/24 |

ICMP

TCP/UDP:RDP,

SSH,

HTTP/HTTPS,

SMB, NTP

|

PLC Controller Scans |

Allow |

10.100.1.61 |

Security Onion 10.100.0.26 |

TCP:1515

UDP:1514

|

Build 2: Communication between Wazuh Agent and the server |

Allow |

10.100.1.61 |

172.16.0.0/22 |

TCP:RDP,

HTTP/HTTPS

|

Build 2: Authorized Inbound Communications to PCS Environment |

Allow |

10.100.1.61 |

Carbon Black 10.100.0.52 |

TCP:41002 |

Build 4: Communication port used between Carbon Black Agent and the server |

Allow |

10.100.1.61 |

CRS NAT 10.100.0.20 |

TCP:48898

UDP:48899

|

Build 4: Inbound Automation Device Specification (ADS) Protocol for Communication with PLC Device |

Notes:

Dispel’s recommended rule for allowing secure shell (SSH)for installation and remote support from the Dispel environment was not enabled for this effort.

The rules implemented include restricting these outbound ports to Enclave specific IP addresses.

The Enclave’s MTD characteristics were disabled to keep the Enclave’s IP addresses static for the duration of the project.

Configure Virtual Desktop Infrastructure (VDI):

The VDI instance is a fully functional workstation/server within the cloud environment. From the VDI instance, authorized users establish a VPN tunnel to the Wicket ESI within the OT environment and then have the access to the environment configured by the device and firewall configurations. In this effort, NCCoE implanted the VDI configuration to support Build 2 and Build 4. The configuration supports the OT environment’s jump server configuration (allowing RDP and SSH access to systems within the PCS and CRS environment) and remote engineering workstation (configuring the VDI with the tools needed to support the ICS environment). The configuration for each build is detailed in the following sections:

Build 2: PCS Configuration

For the PCS setup, the Dispel VDI was used in a jump server configuration. No additional software was installed. The firewall and Wicket ESI configuration allowed RDP and SSH connections to the PCS ICS environment. Additionally, RDP, SSH, and HTTP/HTTPS access to the Cybersecurity LAN environment was authorized for the remote sessions as defined in the previously described firewall settings, Table 2‑2.

Build 4: CRS Configuration

For the CRS setup, the Dispel VDI was configured as a remote engineering workstation. To support the Beckhoff PLC, the TwinCAT 3 XAE software was installed on a VDI, and the network drive provided by the GreenTec-USA solution and hosted in the DMZ environment that contained the PLC code was mapped to the VDI. Additionally, RDP, SSH, and HTTP/HTTPS access to the Cybersecurity LAN environment was authorized for the remote sessions as defined in the previously described firewall settings, Table 2‑2.

For the interaction with the Beckhoff PLC, the TwinCAT 3 XAE software (TC31-FULL-Setup.3.1.4024.10.exe) was installed on the VDI.

The Dispel VPN connection does not allow split-tunneling so, once the VPN connection is established from the VDI to the Wicket ESI, the VDI is disconnected from the internet. Therefore, download and installation of software occurred prior to connecting to the Wicket ESI.

Due to the NAT configuration of the RUGGEDCOM RX1510 router between the Cybersecurity LAN and the CRS environment, port forwarding rules were configured to allow external traffic to reach the Beckhoff CX9020 PLC.

The following rules (Table 2‑3) were created in the RX1510 firewall to enable destination network address translation (DNAT) from the firewall WAN interface (10.100.0.20) to the CRS PLC (192.168.0.30)

Table 2‑3 Firewall Rules

Rule Type |

Source |

Destination |

Destination Port(s) |

Purpose |

|---|---|---|---|---|

DNAT |

10.100.1.61 |

192.168.0.30 |

UDP:48899 |

DNAT (10.100.0.20) - Beckhoff ADS discovery protocol used by the TwinCAT 3 software to discover ADS devices. |

DNAT |

10.100.1.61 |

192.168.0.30 |

TCP:48898 |

DNAT (10.100.0.20) - Beckhoff ADS protocol used by the TwinCAT 3 software to communicate with the PLC. |

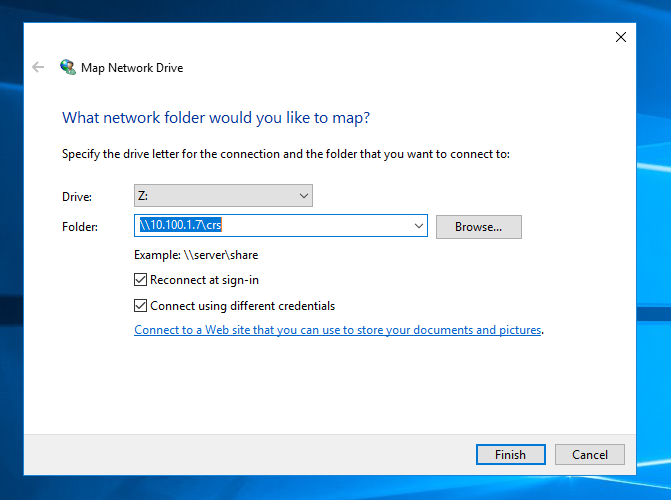

As described in 2.a above, the GreenTec WORMdisk (\10.100.1.7crs) was mapped to the VDI to access the PLC code. The configuration to map Windows is shown in Figure 2‑2 below:

Figure 2‑2 Mapping a Network Drive

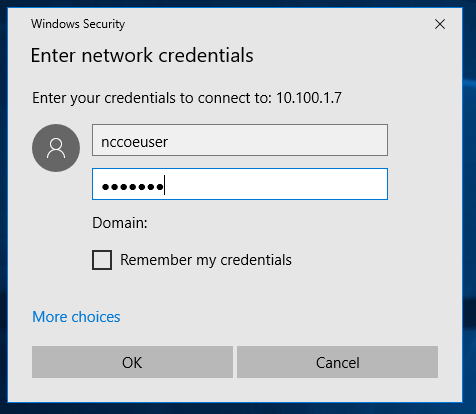

After clicking Finish, the user is prompted for credentials, as shown in Figure 2‑3. An account authorized to access the network drive must be used. This is separate from the Dispel VDI credentials.

Figure 2‑3 Authentication to File Server

2.2 Dragos¶

The Dragos platform implementation in Build 3 consists of two physical servers hosting the Dragos SiteStore and the Dragos sensor to meet the behavioral anomaly detection (BAD), hardware modification, firmware modification, and software modification capabilities. Dragos utilizes a combination of a passive sensor and integration with the OSIsoft PI Server to monitor critical networks for anomalies. OSIsoft PI performs active querying to retrieve information about endpoints in the CRS environment, which is shared with Dragos.

2.2.1 Host and Network Configuration¶

Dragos is installed and configured to support the CRS Environment in Build 3. The overall build architecture is shown in Figure B-3, and the Dragos specific components are listed in Table 2‑4.

Table 2‑4 Dragos Deployment

Name |

System |

OS |

CPU |

Memory |

Storage |

Network |

|---|---|---|---|---|---|---|

VMware Server |

Dell OEMR R740 |

VMware 6.7.0 Update 3 |

2x Intel 6130 CPU |

384 GB |

2x 1.5TB Mirror 6x 8TB RAID 10 |

Testbed LAN 10.100.0.62/24 |

Dragos Server |

VMware |

CentOS 7 |

48x vCPU |

192 GB |

215 GB 10 GB 1.5 TB 1.5 TB |

Testbed LAN 10.100.0.63/24 |

Dragos Sensor |

Dell OEM |

CentOS 7 |

64x vCPU |

128 GB |

240 GB 1 TB |

Testbed LAN 10.100.0.64/24 |

2.2.2 Installation¶

The Dragos platform, which includes the SiteStore server and the Dragos sensor, was delivered as pre-configured hardware appliance by Dragos with the required IP addresses already assigned. The only installation step was correctly connecting the server and the sensor management ports to the Testbed LAN and adding the switch port analyzer (SPAN) port connection to the sensor.

The Dragos Platform Administrator Guide and Dragos Platform User Guide for Release 1.7 were used to guide the installation. Customers can obtain these guides from Dragos.

2.2.3 Configuration¶

In addition to the standard configuration preset by Dragos, the Dragos Platform was configured to work with OSIsoft PI for alerting on certain conditions.

Configure the Dragos SiteStore Server:

Configure the data connection between Dragos SiteStore and OSIsoft PI Server:

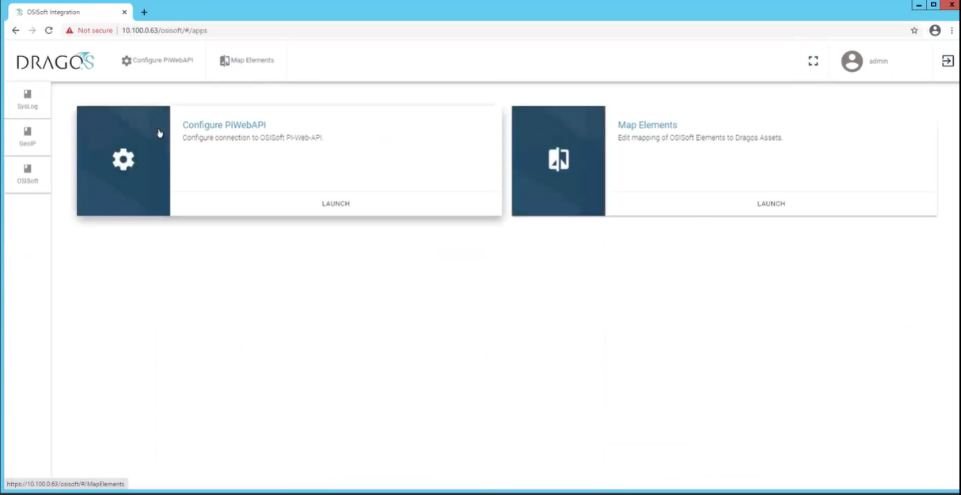

Once installation is successful, open a browser to access the configuration screen by using the URL https://<SiteStore ip address>/osisoft/#/apps. (Figure 2‑4)

Figure 2‑4 Dragos OSIsoft PI Server Integration

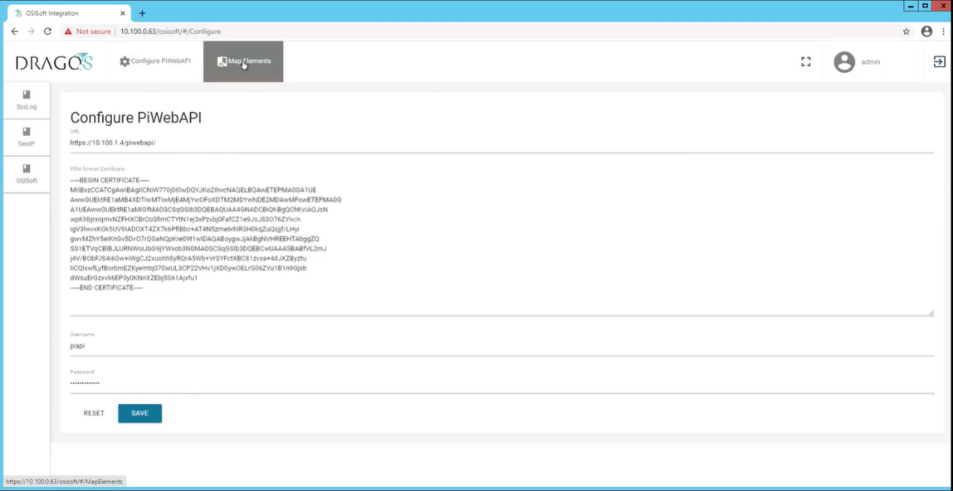

Click Configuration Pi Web API to open a screen for filling out the required information, including privacy enhanced mail (PEM) format certificate and password for secure authentication (Figure 2‑5).

Upload the server public key for the HTTPS certificate.

Specify the user credentials for the OSIsoft PI Web API interface.

Click Save.

Figure 2‑5 Dragos PI Web API Configuration

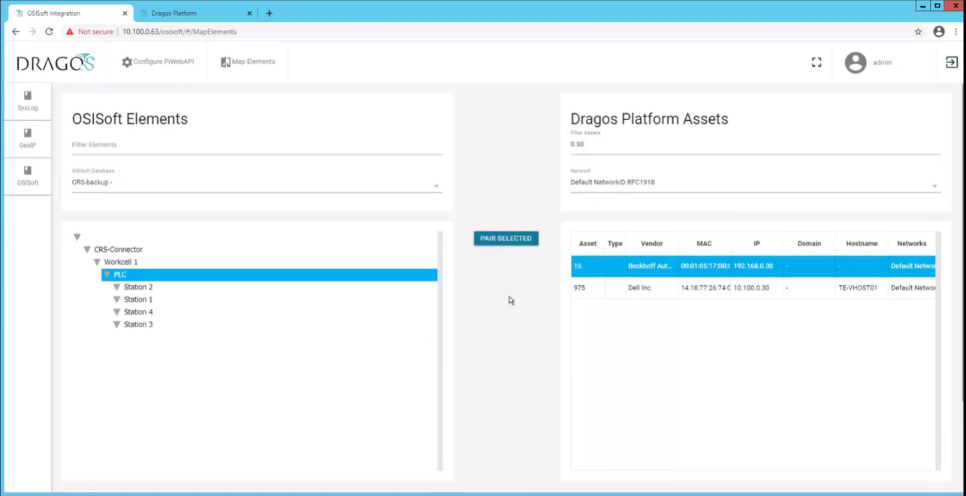

Click Map Elements to access the interface to pair elements between OSIsoft PI Server and the Dragos Platform assets. Here, the PLC in OSIsoft Elements panel is paired with Beckhoff asset in the Dragos Platform asset (Figure 2‑6).

Select the OSIsoft Database CRS-backup on the left side to access the devices list from the Historian Database.

Select the Default NetworkID RFC 1918 and use the Filer options to find specific assets.

For each asset in the OSIsoft Database, select the corresponding asset in the Dragos asset repository and click Pair Selected.

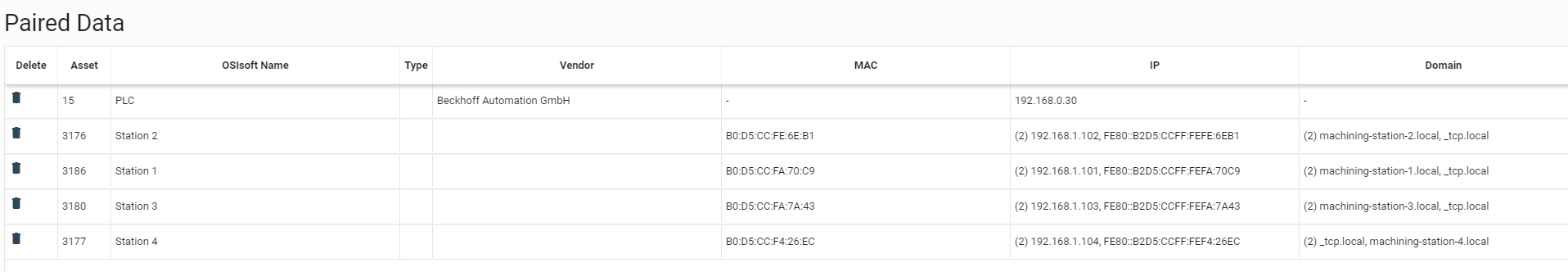

Repeat this process for each asset until all paired assets are listed in the Paired Data table (Figure 2‑7).

1) PLC paired to 192.168.0.302) Station 1 paired to 192.168.1.1013) Station 2 paired to 192.168.1.1024) Station 3 paired to 192.168.1.1035) Station 4 paired to 192.168.1.104

Figure 2‑6 OSIsoft PI Server to Dragos Asset and Data Pairing

Figure 2‑7 OSIsoft PI Server and Dragos Paired Data Elements

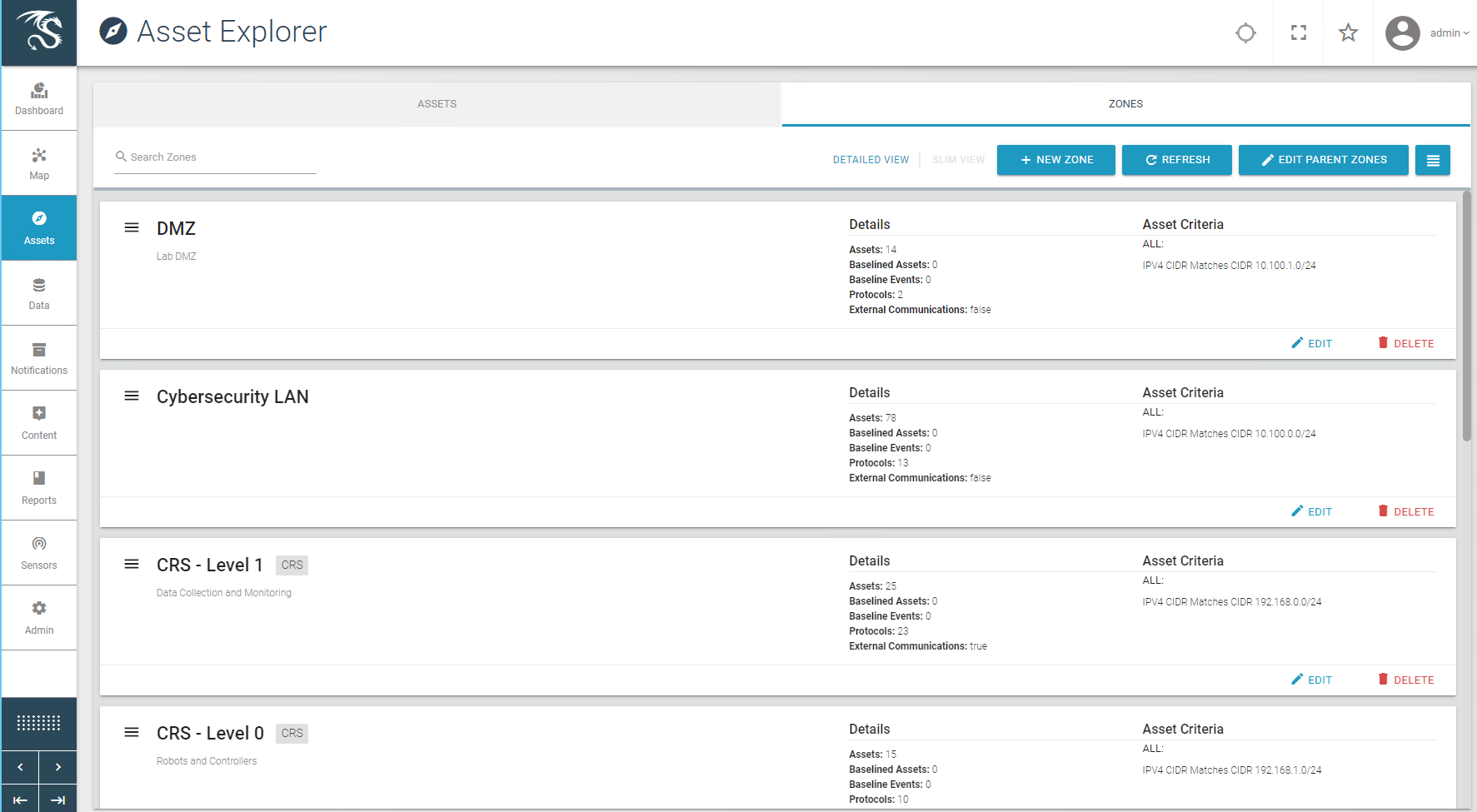

Configure Zones

NOTE: Zones are ordered in a similar manner to firewall rules. In other words, higher rules have priority over lower rules.

Click Assets and select the Zones tab (Figure 2‑8).

Figure 2‑8 Dragos Zone Administration Page

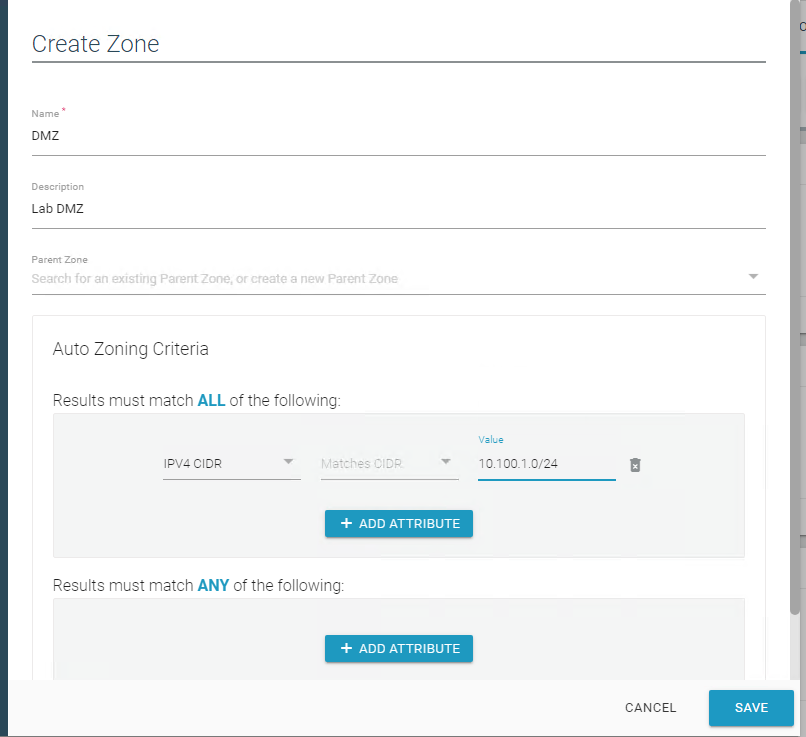

Click + New Zone (Figure 2‑9) and define the following zones:

Name: DMZ:

1) Description: Lab DMZ2) Zone Criteria (Match ALL):a) IPV4 CIDR Matches CIDR 10.100.1.0/24Name: Testbed LAN:

1) Description: Lab Testbed LAN2) Auto Zone Criteria (Match ALL):a) IPV4 CIDR Matches CIDR 10.100.0.0/24Name: CRS:

1) Description: Parent CRS2) No CriteriaName: CRS – Level 0:

1) Description: Robots and Controllers2) Parent Zone: CRS3) Auto Zone Criteria (Match ALL):a) IPV4 CIDR Matches CIDR 192.168.1.0/24Name: CRS – Level 1:

1) Description: Lab DMZ2) Parent Zone: CRS3) Auto Zone Criteria (Match ALL):a) IPV4 CIDR Matches CIDR 192.168.0.0/24

Figure 2‑9 Dragos Create Zone Pop-up

2.3 Forescout Platform¶

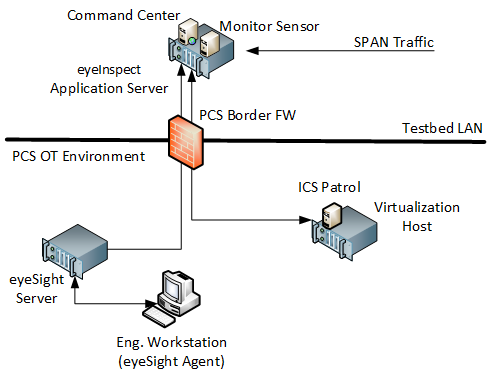

The Forescout products included in the practice guide are eyeInspect (formally SilentDefense), eyeSight, ICS Patrol, and Forescout Console. These products are utilized in Build 2 to meet the BAD, hardware modification, firmware modification, and software modification capabilities. The Forescout implementation utilizes different components and modules installed on different devices to monitor critical networks for anomalies and active query capabilities to retrieve information about endpoints in the PCS environment. A high-level of the key server and agent components is presented in Figure 2‑10.

Figure 2‑10 Forescout High-Level Components and Dataflows

eyeInspect (formally SilentDefense)

The eyeInspect (Version 4.1.2) control server and monitoring sensor are installed on a single appliance with a management interface on the Testbed VLAN and network monitoring capabilities through a dedicated SPAN port. The SPAN port provides passive monitoring for network-based anomalies and retrieves information about endpoints within the network. The eyeInspect appliance also serves as the command center for supporting the ICS Patrol and eyeSight components.

eyeSight

Forescout eyeSight (Version 8.2.1) provides enhanced network monitoring and response using an agent installed on endpoints. In this build, eyeSight instances are configured through the Forescout Console to provide additional monitoring and reporting information to eyeInspect.

ICS Patrol

Forescout ICS Patrol (Version 1.1.2-4.a826b94) is a sensor that supports active queries for ICS devices to obtain status and other information such as hardware configuration and firmware version. ICS Patrol queries and reporting results are managed through eyeInspect.

Forescout Console

The Forescout Console (Version 8.2.1) is a Java-based application for configuring and managing eyeSight and eyeSight agents. The Forescout Console is installed on a computer with network access to the eyeSight server.

2.3.1 Host and Network Configuration¶

Forescout was installed and configured to support the PCS Environment as part of Build 2. The overall build architecture is provided in Figure B-2 with the Forescout specific components in Table 2‑5 and the eyeSight agents in Table 2‑6.

Table 2‑5 Forescout Deployment

Name |

System |

OS |

CPU |

Memory |

Storage |

Network |

|---|---|---|---|---|---|---|

eyeInspect control server |

Dell Embedded Box PC 5000 |

Ubuntu 16.04 |

Intel i7-6820EQ |

32 GB |

250 GB |

Testbed LAN 10.100.0.65 |

Forescout Console |

Hyper-V VM |

Windows 2012R2 |

2x vCPU |

6 GB |

65 GB |

Testbed LAN 10.100.0.25 |

eyeSight Server |

Dell R640 |

Ubuntu 16.04.06 |

Intel Xeon Silver 4110 |

32 |

600 GB |

PCS VLAN 2 172.16.2.61 |

ICS Patrol |

VirtualBox VM |

Ubuntu 16.04.06 |

2x vCPU |

2 GB |

40 GB |

PCS VLAN 2 172.16.2.62 |

For the lab environment, network connectivity between the components in the Testbed LAN and the components in the PCS environment required the following persistent route configured on Testbed LAN systems:

route -p ADD 172.16.0.0 MASK 255.255.252.0 10.100.0.40

The following systems were configured to utilize the eyeSight Agents.

Table 2‑6 eyeSight Agent Deployment

Name |

System |

OS |

CPU |

Memory |

Storage |

Network |

|---|---|---|---|---|---|---|

Engineering Workstation |

Dell T5610 |

Windows 7 |

Intel i5-4570 |

16 GB |

465 GB |

PCS VLAN 3 172.16.3.10 |

HMI Host |

Generic |

Windows 7 |

Intel i5-4590 |

8 GB |

233 GB |

PCS VLAN 1172.16.1.4 |

Additional details for Build 2 are available in Section 4.5 of Volume B.

2.3.2 Installation¶

The Forescout products included in the practice guide are eyeInspect, Forescout Console, ICS Patrol, and eyeSight. These products are installed as indicated in the appropriate subsection below. To support these components, the PCS Gateway/Firewall rules were updated as follows (Table 2‑7).

Table 2‑7 Firewall Rules for Forescout

Rule Type |

Source |

Destination |

Port(s) |

Purpose |

|---|---|---|---|---|

Allow |

10.100.0.65 |

172.16.2.61 |

22 (ssh)

9999

9092

|

System Management

eyeInspect Data

eyeInspect Data

|

Allow |

10.100.0.65 |

172.16.2.62 |

22 (ssh)

9001

|

System Management

eyeInspect Data

|

2.3.2.1 eyeInspect¶

eyeInspect is an appliance hosted on a Dell Embedded Box PC 5000. The unit was placed within a standard datacenter rack unit with the eyeSight appliance and connected to the network as described in Section 2.3.1. SPAN ports from the DMZ, Testbed LAN, and PCS VLAN 1, 2, and 3 switches were routed to the appliance for passive network monitoring. Installation also required uploading the license file after successfully logging onto the appliance.

2.3.2.2 Forescout Console¶

Forescout Console was installed following the standard installation procedures. Instructions can be found in the Forescout Installation Guide Version 8.2.1 available at https://docs.forescout.com. The software is available from https://forescout.force.com/support/s/downloads, where current and past versions are available. Login credentials were provided by Forescout.

2.3.2.3 eyeSight¶

Forescout eyeSight is an appliance hosted on a 1U Dell R640 that is installed within a standard datacenter rack and connected to the network as described in the previous section.

2.3.2.4 eyeSight SecureConnector Agent¶

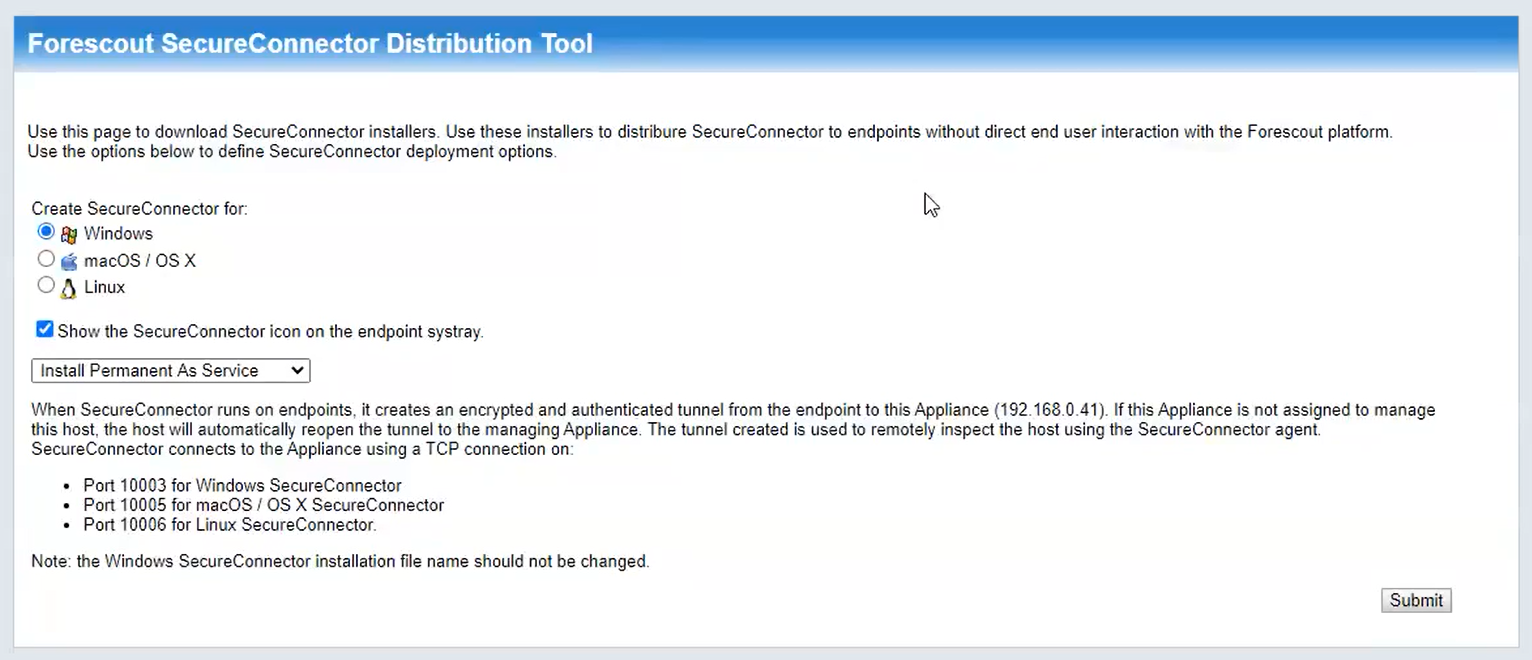

In a browser on a system with web connectivity to the eyeSight server, navigate to https://172.16.2.61/sc.jsp to access the SecureConnector download page (Figure 2‑11) and follow these steps:

Select Create SecureConnector for: Windows.

Enable Show the SecureConnector icon on the endpoint systray.

Select Install Permanent As Service.

Click Submit.

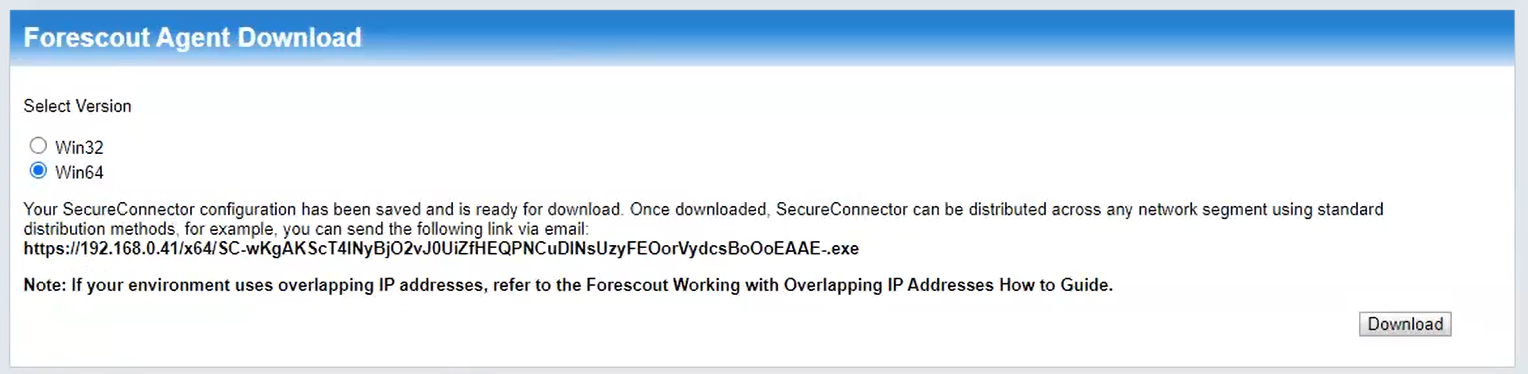

Download the Forescout Agent (Figure 2‑12):

Select Version Win64.

Click Download.

Install the downloaded agent on the target systems using an administrator account.

Figure 2‑11 Forescout SecureConnector Distribution Tool

Figure 2‑12 Forescout Agent Download

2.3.2.5 ICS Patrol¶

Forescout ICS Patrol (Version 1.1.2-4.a826b94) is a sensor that is deployed on an existing VirtualBox host in the PCS environment. Ubuntu 16.04.06 is required for proper installation and can be downloaded from http://old-releases.ubuntu.com/releases/xenial/ubuntu-16.04.6-server-amd64.iso. Install the operating system on a VM connected to PCS VLAN 2 following the procedures from the Silent Defense Installation and Configuration Guide 4.1.2 document Section 2.2.2, Installing the Linux Ubuntu OS.

Install the ICS Patrol Component from the Silent Defense Installation and Configuration Guide 4.1.2 document Sections 2.2.4 and 2.2.5 following these steps:

Establish an SSH session to the eyeInspect appliance.

Copy the components to the ICS Patrol VM:

$ scp os_provisioning_4.1.1_install.run \ main_configuration_4.1.1_install.run \ silentdefense@172.16.2.62:/home/silentdefense

SSH to the ICS Patrol VM and execute the installation components:

$ chmod a+x *.run $ sudo ./os provisioning 4.1.1 install.run $ sudo ./main_configuration_4.1.1_install.run $ sudo reboot

2.3.3 Configuration¶

The eyeSight agents and ICS Patrol do not require specific configurations.

2.3.3.1 eyeInspect¶

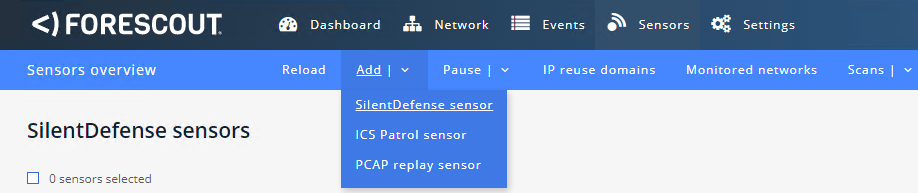

Access the eyeInspect web interface and log in with an administrator account.

Register the local sensor for SPAN traffic monitoring:

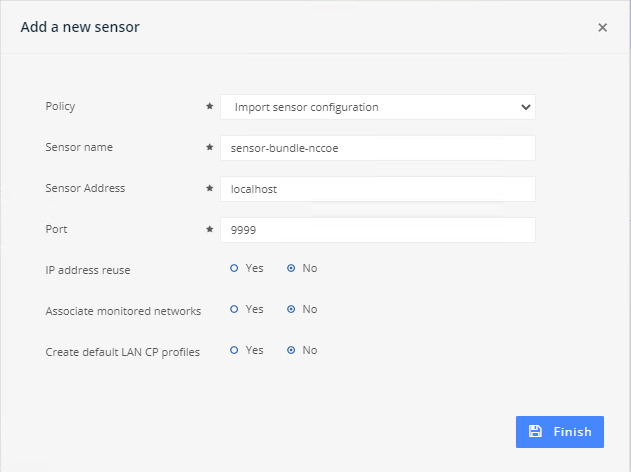

Click the Sensors tab to access the Sensor Admin/Overview Page (Figure 2‑13).

Click Add > SilentDefense sensor.

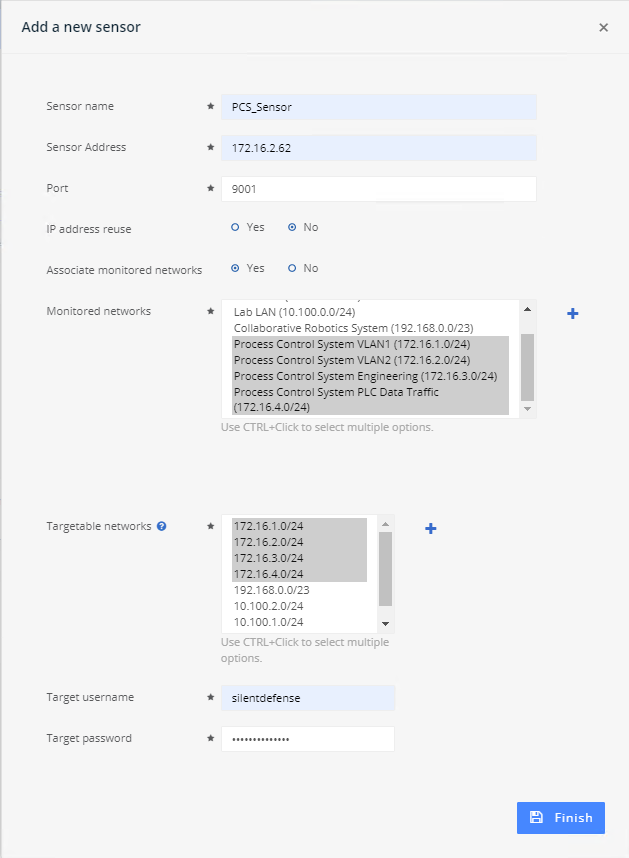

Specify the sensor parameters in the dialog box (Figure 2‑14).

Figure 2‑13 eyeInspect Sensor Admin/Overview Page – Add Sensor

Figure 2‑14 Adding a New SilentDefense Sensor Dialog

Adjust Passive Monitoring settings:

From the Dashboard, click Sensors.

Select the SilentDefense Sensor from the list of available sensors.

Click the Industrial Threat Library Overview option in the upper right corner.

Click the Security menu option on the left under Checks by Category.

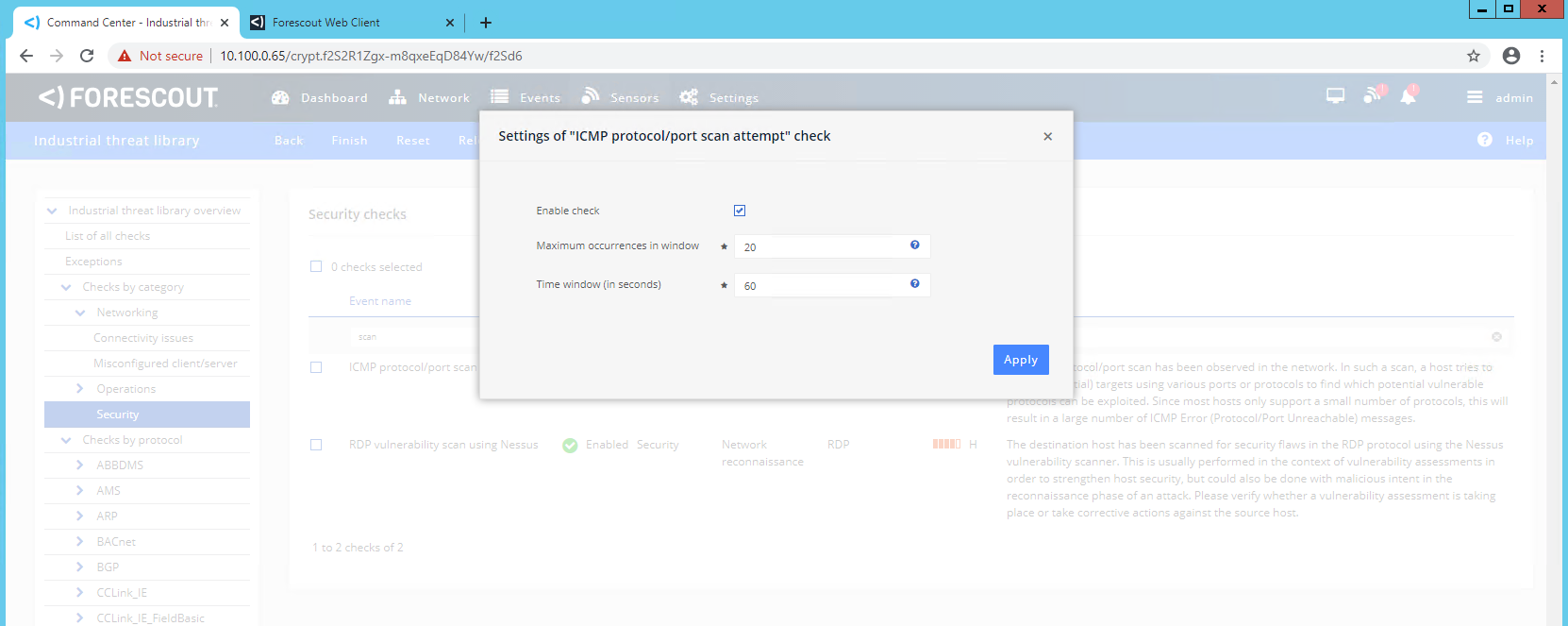

Enter “ICMP” in the Search field to reduce the list of available options.

Click the ICMP protocol/port scan attempt to open the settings dialog box ( Figure 2‑15) and verify the following settings:

Verify Enable Check is selected.

Verify Maximum occurrences in window is set to 20.

Verify Time Window (in seconds) is set to 60.

Figure 2‑15 eyeInspect ICMP Protocol/Port Scan Attempt Settings

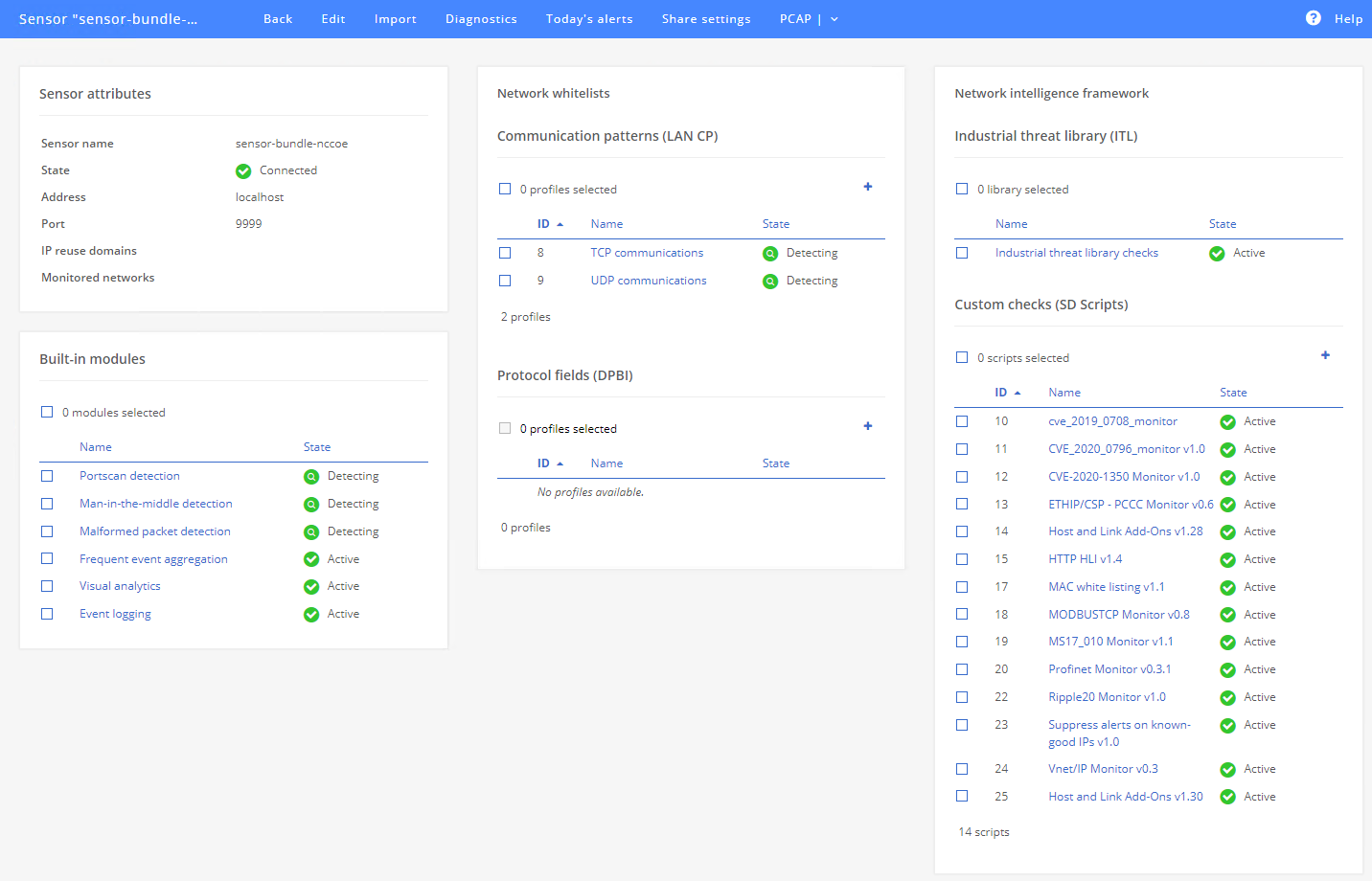

Select Portscan Detection under Built-in Modules (Figure 2‑16).

Figure 2‑16 eyeInspect Sensor Configuration Options

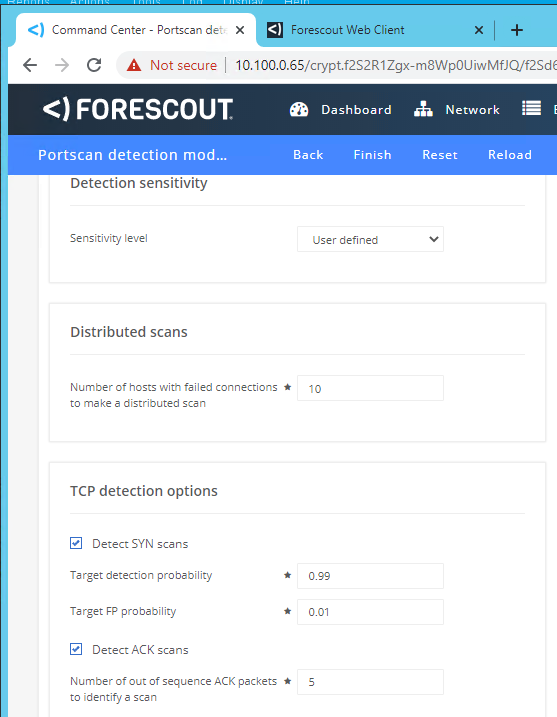

Click the Settings tab and set the following parameters (Figure 2‑17):

Sensitivity level: User defined

Number of Hosts with failed connections to make a distributed scan: 10

Detect SYN scans: Checked

Target detection probability: 0.99

Target FP probability: 0.01

Detect ACK scans: Checked

Number of out of sequence ACK packets: 5

Figure 2‑17 eyeInspect Portscan Detection Settings

Register the ICS Patrol Sensor:

From the Sensor admin page, click Add > ICS Patrol sensor.

Specify the sensor parameters in the dialog box (Figure 2‑18).

Figure 2‑18 Add ICS Patrol Sensor Dialog

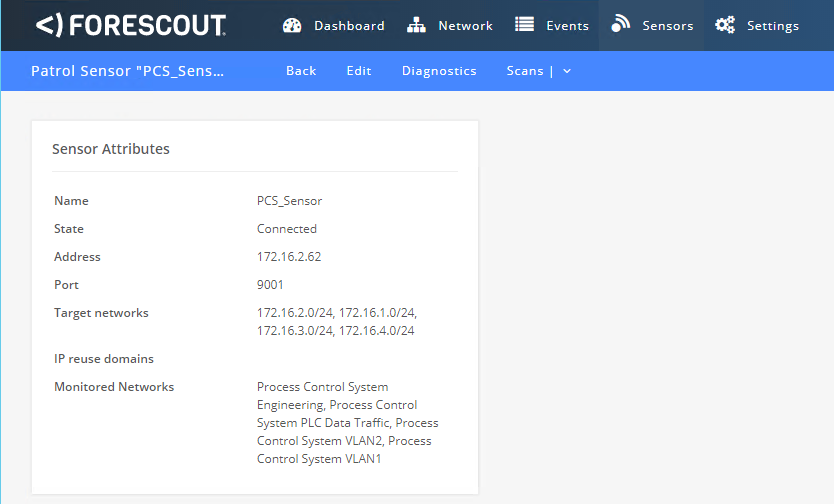

Define a scan policy to periodically check the PCS PLC to monitor for changes.

Click the PCS Sensor created in the previous step to open the sensor admin page (Figure 2‑19).

Figure 2‑19 ICS Patrol Sensor Admin Page

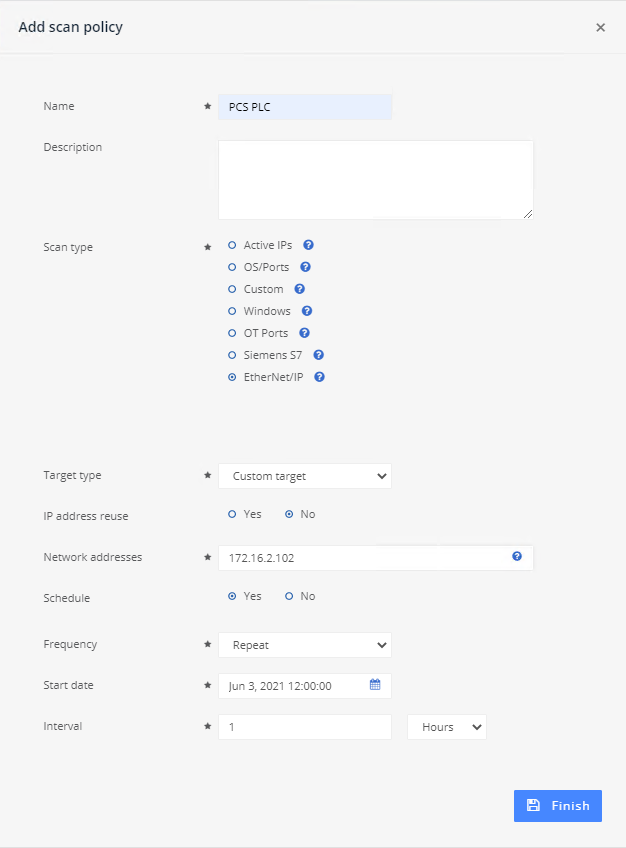

Click Scans > Scan Policies.

In the dialog option (Figure 2‑20) enter the scanning parameters:

1) Name: PCS PLC2) Scan Type: EtherNet/IP3) Target Type: Custom target4) IP address reuse: No5) Network Address: 172.16.2.1026) Schedule: Yes7) Frequency: Repeat8) Interval: 1 . Select “Hours” from the drop-down menu.9) Click Finish.

Figure 2‑20 Add an ICS Patrol Scan Policy

2.3.3.2 eyeSight¶

Using the Forescout Console application, users may configure, monitor, and manage the eyeSight appliance and agents. The Forescout Console is also used to test and verify connectivity to the eyeInspect server.

Login to the Forescout Console.

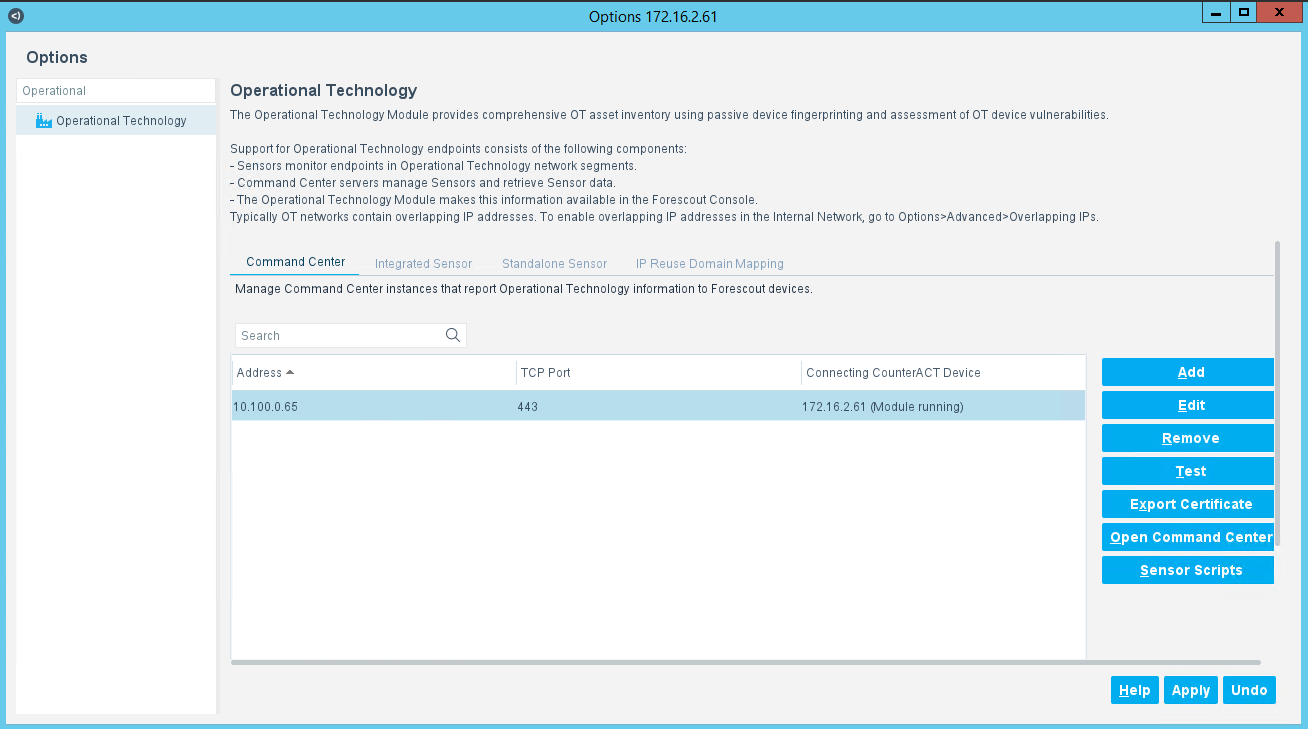

Select the Gear Icon in the upper right corner or the Tools > Option menu item to bring up the Options display.

Enter “Operational” in the search bar.

Select the Operational Technology tab on the left side of the screen to display the current settings.

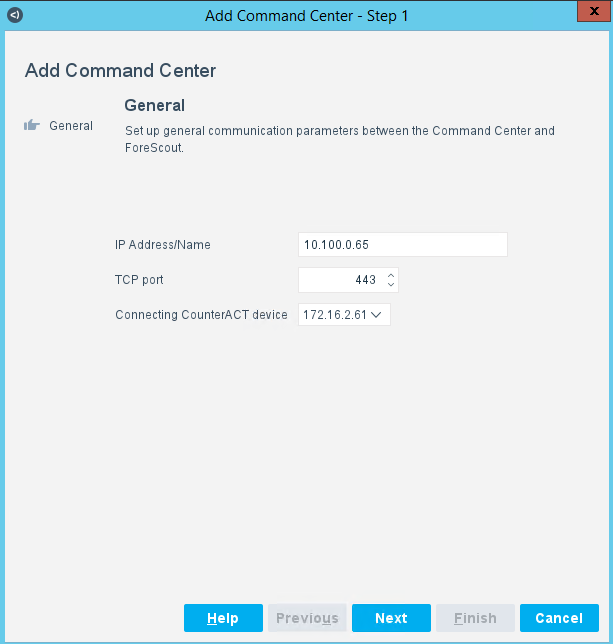

Select the IP entry for the Command Center and select Add to start the workflow process.

Specify General Information (Figure 2‑21):

Enter the Command Center IP Address “10.100.0.65” for IP Address/Name.

Select “172.16.2.61” from the Connecting CounterAct device drop-down menu.

Select “443” from the TCP Port drop-down menu.

Figure 2‑21 eyeSight Add Dialog – General Information

Click Next.

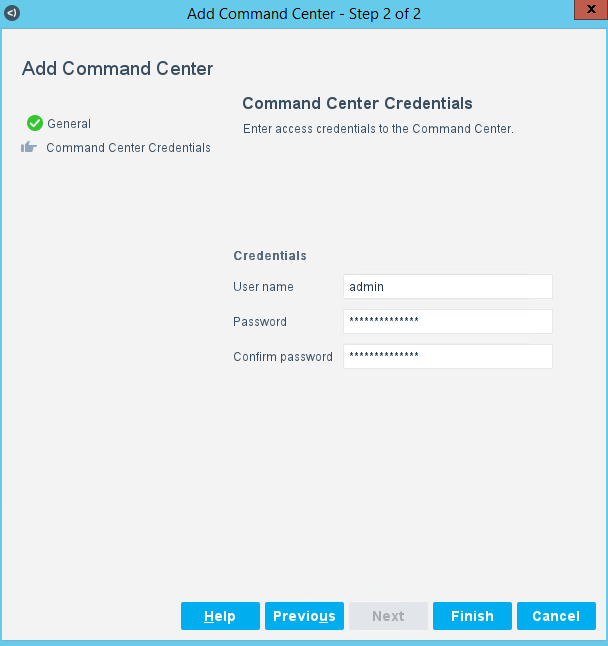

Enter the command center credentials (Figure 2‑22).

Click Finish.

Figure 2‑22 eyeSight Add – Command Center Credentials

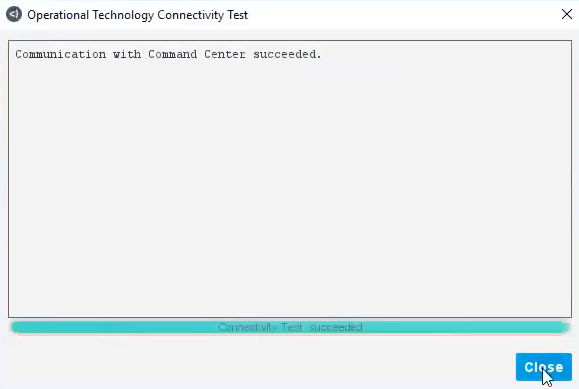

Select the IP address for the Command Center and Click Test (Figure 2‑23). If the connection is successful, a message like the one shown in Figure 2‑24 displays.

Click Apply to save the changes.

Click Close to close the message.

Figure 2‑23 eyeSight OT Settings

Figure 2‑24 eyeSight Test Connection Successful Message

2.4 GreenTec-USA¶

The GreenTec-USA products included in this practice guide are the ForceField and WORMdisk zero trust storage devices. These products were utilized in Builds 1, 2, 3, and 4 to meet the File Integrity Checking capability by storing and protecting critical PCS and CRS data from modification and deletion.

ForceField

A ForceField hard disk drive (HDD) provides a protected write-once-read-many data storage location for historian data backups and database backups. Data is immediately protected as it is written to the HDD in real time, permanently preventing the data from modification and deletion.

WORMdisk

A WORMdisk HDD provides a protected data storage location for PLC logic, device firmware, and approved software applications for use in the manufacturing environment. Data is protected by “locking” individual partitions of the HDD using a software utility, permanently preventing the data from modification and deletion.

2.4.1 Host and Network Configuration¶

The WORMdisk and ForceField HDDs were installed in a rack-mount server appliance provided by GreenTec-USA and described in Table 2‑8. The overall build architectures utilizing this appliance and devices are described in Section 4.5 in Volume B.

Table 2‑8 GreenTec-USA WORMdrive and ForceField Deployment

Name |

System |

OS |

CPU |

Memory |

Storage |

Network |

|---|---|---|---|---|---|---|

GreenTec-USA Server |

Supermicro x8 Series Server |

Ubuntu 18.04 |

2x Intel Xeon E5620 |

16 GB |

750 GB OS

1.0 TB

WORMdisk

1.0 TB

ForceField

|

DMZ

10.100.1.7

|

2.4.2 Installation¶

The ForceField and WORMdisk HDDs were hosted on a hardware appliance provided by GreenTec-USA. The unit was placed within a standard datacenter rack unit and connected to the network as shown in Figure B-1, Figure B-2, Figure B-3, and Figure B-4.

Full documentation and installation guides are provided to customers by GreenTec-USA.

NIST chose to utilize Samba as the network file sharing protocol due to the prevalence of Windows and Linux workstations within the testbed. The GreenTec-USA appliance did not come with Samba pre-installed, so installation was performed via the Ubuntu Advanced Packaging Tool and the Ubuntu package repository.

NOTE: GreenTec-USA typically provides turnkey server storage solutions. Installation and configuration of file sharing packages and other software will likely not be required.

NOTE: Many of the commands used to manage the ForceField and WORMdisk HDDs must be executed by a user with superuser privileges or as the root user.

Add the default gateway so the appliance can communicate to other devices on the network using the following command:

$ sudo route add default gw 10.100.1.1In a terminal window on the GreenTec-USA appliance, execute these commands:

$ sudo apt update $ sudo apt -y install samba $ sudo ufw allow samba

2.4.3 Configuration¶

The appliance provided by GreenTec-USA for this project was

preconfigured with the ForceField HDD as device /dev/sdc and the

WORMdisk HDD as device /dev/sdb.

2.4.3.1 ForceField HDD¶

The ForceField HDD is configured as a mounted volume, allowing the drive to be used as a typical HDD by using native operating system commands.

Create a mount point (empty directory) for the ForceField HDD using the following command:

$ sudo mkdir /mnt/forcefieldStart the ForceField WFS volume manager to mount the drive using the following command:

$ sudo /opt/greentec/forcefield/bin/wfs /dev/sdc /mnt/forcefield/

2.4.3.2 WORMdisk HDD¶

The WORMdisk is divided into 120 partitions to enable periodic updates and revisions to the protected data (i.e., data in the “golden” directory). Once a partition is locked it cannot be modified, so the next sequential partition on the drive is used as the new “golden” directory.

Format the WORMdisk with 120 partitions (NOTE: this operation must be performed from the command line as administrator on a computer with the Microsoft Windows OS) using the following command:

> gt_format.exe 1 /parts:120In the Ubuntu OS, create the mountpoint for the WORMdisk HDD partition using the following command:

$ sudo mkdir /mnt/goldenAdd a persistent mount to the /etc/fstab file:

$ sudo echo “/dev/sdb2 /mnt/golden fuseblk rw,nosuid,nodev,relatime,user_id=0,group_id=0,allow_other,blksize=4096 0 0” >> /etc/fstab

Create a directory structure within the “golden” directory and copy approved files into those directories (e.g., PLC logic, device firmware, approved software).

Once all files have been copied and verified, lock the partition to protect the data:

$ sudo /greentec/Ubuntu/wvenf /dev/sdb2

When it is time to create a new “golden” partition, the partition names

in the /etc/fstab file must be updated to point to the correct

partition. The following instructions provide an example process to

update the files and increment the golden partition from /dev/sdb2 to

/dev/sdb3.

On the GreenTec-USA appliance, create a temporary directory, mount the folder to the next unlocked WORMdisk partition, and copy existing “golden” files to the temporary directory:

$ sudo mkdir /mnt/tmp $ sudo mount /dev/sdb3 /mnt/tmp $ sudo cp -R /mnt/golden /mnt/tmp

Update the files and folders in the temporary directory, /mnt/tmp, as desired.

Unmount the temporary directory and lock the partition:

$ sudo umount /mnt/tmp $ sudo /greentec/Ubuntu/wvenf /dev/sdb3

Stop the Samba service:

$ sudo systemctl stop smb.serviceUnmount the golden partition:

$ sudo umount /mnt/goldenModify the /etc/fstab file with the new partition name and save the file:

/dev/sdb3 /mnt/golden fuseblk rw,nosuid,nodev,relatime,user_id=0,group_id=0,allow_other,blksize=4096 0 0”

Re-mount all partitions, start the Samba service, and remove the temporary directory:

$ sudo mount -a $ sudo systemctl stop smb.service $ sudo rmdir -r /mnt/tmp

2.4.3.3 Samba¶

Add local user accounts to the appliance for accessing the network file shares and create a password:

$ sudo adduser nccoeuser $ sudo smbpasswd -a nccoeuser

Open the file /etc/samba/smb.conf and add the following content to the end of the file to create the individual shares:

# GreenTec-USA ForceField Share strict sync=no # OSIsoft PI historian and database backups [ForceField] browsable = yes guest ok = no path = /mnt/forcefield read only = no writeable = yes case sensitive = yes # GreenTec-USA Golden WORMDisk Share [golden] browsable = yes guest ok = no path = /mnt/golden read only = no writeable = yes case sensitive = yes

Restart Samba:

$ sudo systemctl restart smbd.service

2.4.3.4 OSIsoft PI Server and Database Backups¶

Create the scheduled backup task to backup PI Data Archive files. The script automatically inserts the current datetime stamp into the filename of each file copied to the ForceField drive. Follow these steps:

On the server containing the PI Data Archive, open a command prompt with Administrator privileges.

Change to the PIadm directory:

> cd /d “%piserver%adm”Create the backup directory, and start the Windows scheduled task to perform the backup:

> pibackup h:\PIBackup -install

Create a scheduled task to copy the backup files to the ForceField HDD. Follow these steps:

Open the Task Scheduler and create a new scheduled task to rename, timestamp, and copy the backup files to the ForceField HDD:

Trigger: At 3:30 AM every day

Action: Start a Program

Program/script:

C:\Windows\System32\WindowsPowerShell\v1.0\powershell.exeAdd arguments (optional):

-Command { Get-ChildItem -Path "h:\PIBackup\arc\" \| foreach { copy-item -path $($_.FullName) -destination "\\10.100.1.7\ForceField\$(Get-Date -f yyyy-MM-dd_HHMMss)_$($_.name)" } }

2.5 Microsoft Azure Defender for IoT¶

Microsoft Azure Defender for IoT, based on technology acquired via CyberX, consists of a single appliance containing the sensor and application interface integrated into Build 4 to meet BAD, hardware modification, firmware modification, and software modification capabilities. The Microsoft Azure Defender for IoT implementation utilizes passive monitoring and protocol analysis to support cybersecurity monitoring and threat detection.

2.5.1 Host and Network Configuration¶

Microsoft Azure Defender for IoT was installed and configured to support the CRS environment as part of Build 4. The overall build architecture is provided in Figure B-4. The Microsoft Azure Defender for IoT specific components are in Table 2‑9.

Table 2‑9 Microsoft Azure Defender IoT Deployment

Name |

System |

OS |

CPU |

Memory |

Storage |

Network |

|---|---|---|---|---|---|---|

Azure Defender for IoT |

Dell OEMR XL R340 |

Ubuntu 18.04 |

Intel Xeon E-2144G |

32 GB |

3x 2 TB Drives RA ID-5 |

Testbed LAN 10.100.0.61 |

2.5.2 Installation¶

The Microsoft Azure Defender for IoT (Version 10.0.3) appliance was preinstalled with the operating system and application. The appliance is mounted in a rack with power and network interfaces connected to the Testbed LAN on the Eth0 port along with the SPAN connection on the expansion network interface board.

2.5.3 Configuration¶

To configure the Microsoft Azure Defender for IoT platform, follow these steps:

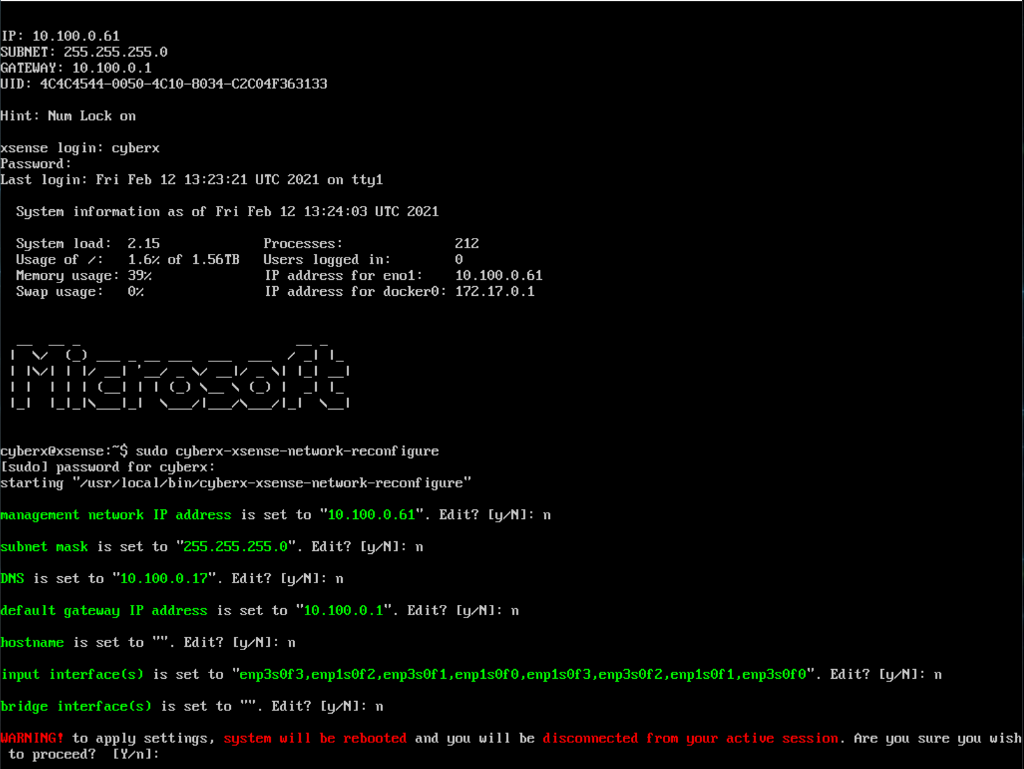

Set the Network Configuration:

Using either SSH, iDRAC, or the KVM Console connections on the appliance, establish shell access to the appliance.

From the console, enter the following command:

$sudo cyberx-xsense-network-reconfigure

The system will walk through a series of network options (Figure 2‑25) that are set as follows:

IP Address: “10.100.0.61”

Subnet Mask: “255.255.255.0”

DNS: “10.100.0.17”

Default Gateway: “10.100.0.1”

Hostname: Not set

Input Interface(s): “enp3s0f3, enp1s0f2, enp3s0f1, enp1s0f0, enp1s0f3, enp3s0f2, enp1s0f1, enp3s0f0”

Bridge Interface(s): Not Set

Figure 2‑25 Azure Defender for IoT SSH Session for Network Configuration

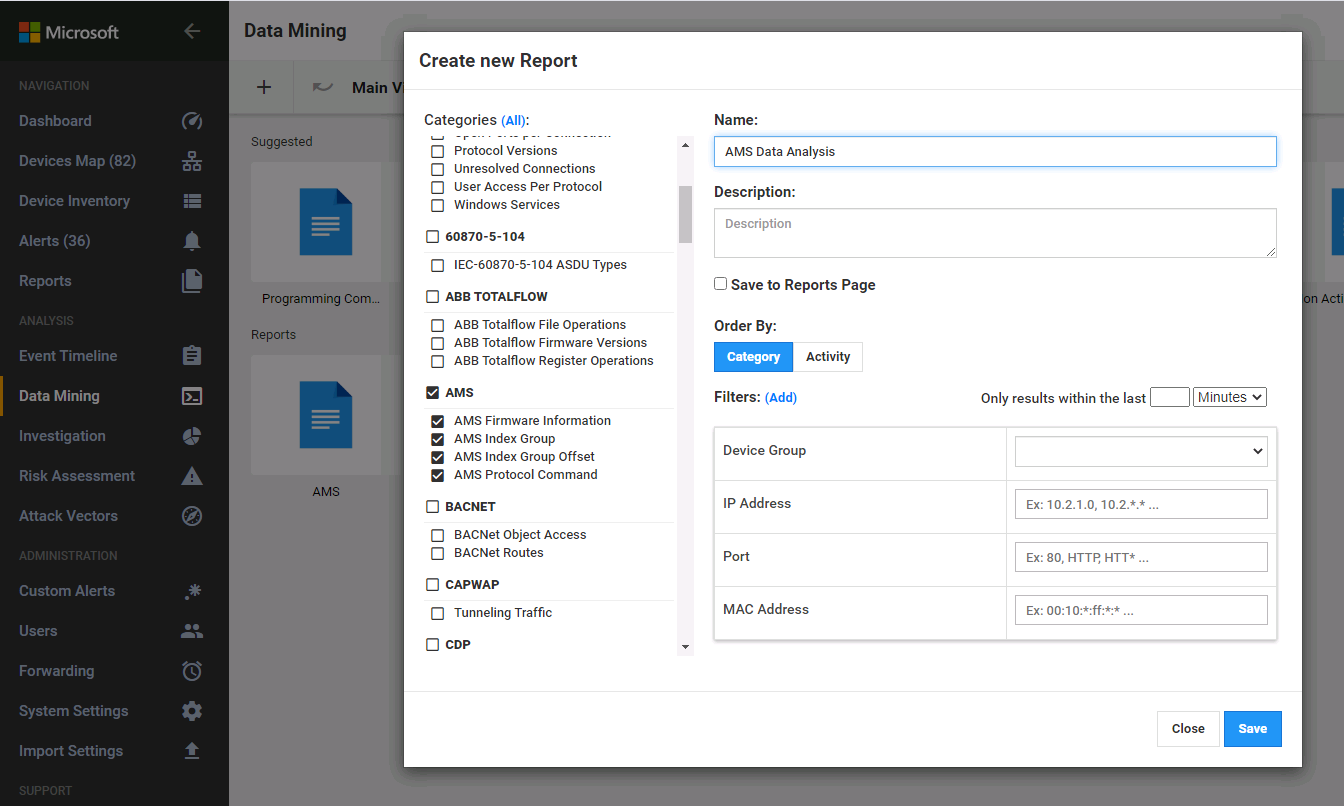

Create AMS Protocol report as a data mining tool:

Login to the application web interface and click Data Mining in the left menu navigation.

Click the + sign and click New Report. In the Create New Report panel set the following settings (Figure 2‑26):

Under Categories select AMS to automatically select the sub-elements, including:

1) AMS Firmware Information2) AMS Index Group3) AMS Index Group Offset4) AMS Protocol CommandEnter “AMS Data Analysis” as the name for the report.

Click Save.

Figure 2‑26 Azure Defender for IoT Create New Data Mining Report for AMS Protocol Information

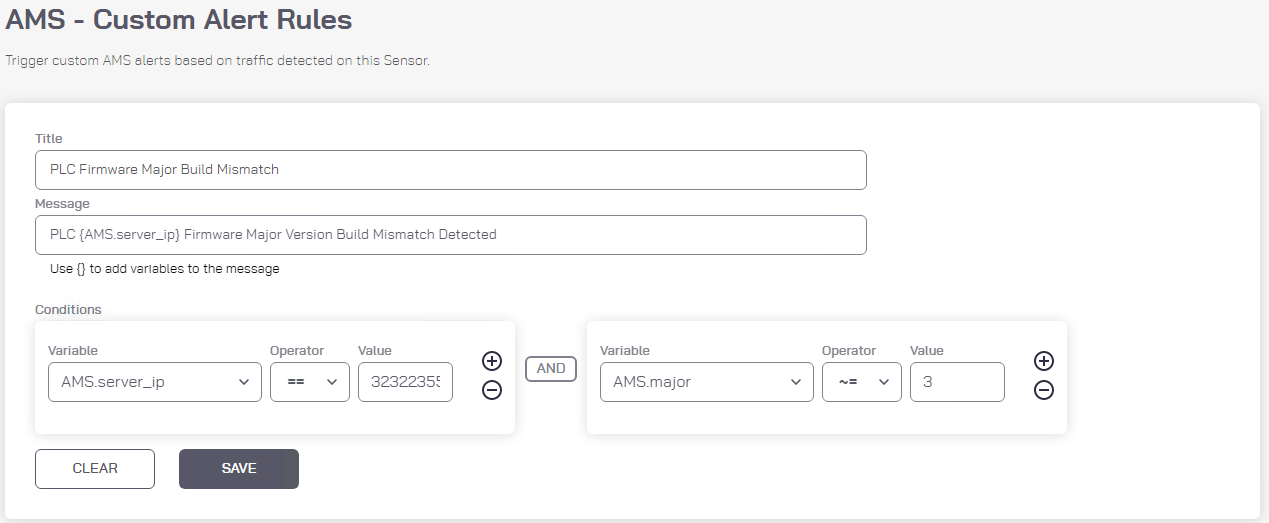

Create AMS – Custom Alert Rules

For this effort, the CRS PLC is configured to run using firmware version 3.1.4022 as the approved production firmware version. To detect changes to the approved version, custom alert rules are created to monitor for deviations from the approved version numbers through the AMS protocol messages over the network.

Click Horizon on the left menu navigation.

Select AMS > Horizon Customer Alert under the Plugin Options on the left menu.

Create Custom Alert to Detect Change in PLC Firmware Major Build Number (Figure 2‑27):

Enter “PLC Firmware Major Build Mismatch” as the title for the custom alert.

Enter “PLC {AMS_server_ip} Firmware Major Version Build Mismatch Detected” as the message to display with the alert.

Set the following conditions:

1) AMS_server_ip == 3232235550 (Note: this isthe PLC IP address 192.168.0.30 in Integer format).2) AND AMS_major ~= 3

Figure 2‑27 Azure Defender for IoT Custom Alert for Firmware Major Version Number Change

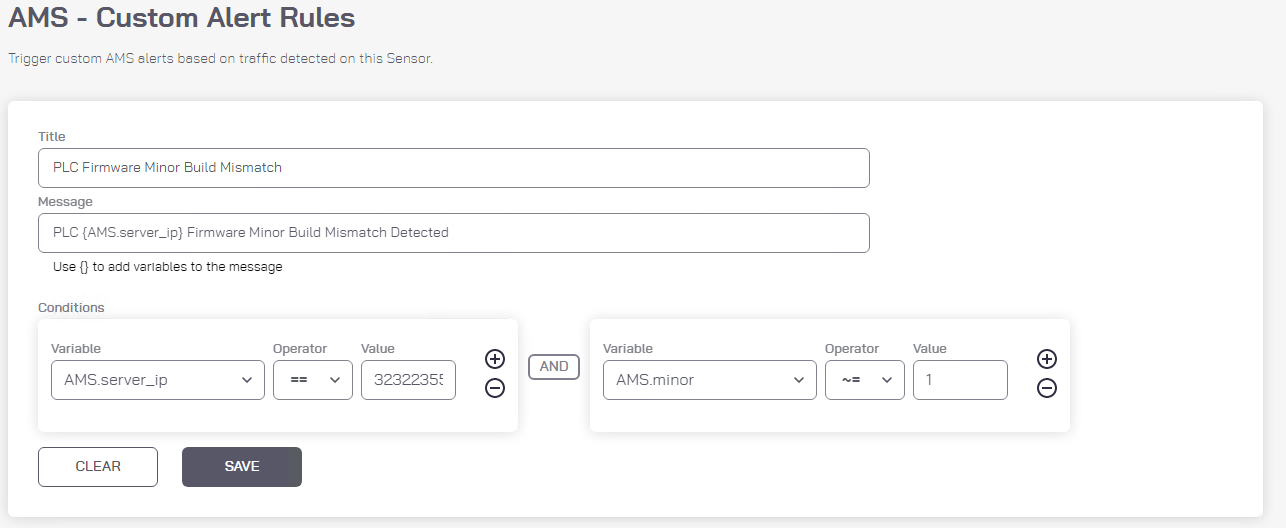

Create the custom alert to detect change in PLC firmware minor build number (Figure 2‑28):

Enter “PLC Firmware Minor Build Mismatch” as the title for the custom alert. PLC Firmware Minor Build Mismatch

Enter “PLC {AMS_server_ip} Firmware Minor Version Build Mismatch Detected” as the message to display with the alert.

Set the following conditions:

1) AMS_server_ip == 3232235550 (Note: this is thePLC IP address 192.168.0.30 in Integer format).2) AND AMS_minor ~= 1

Figure 2‑28 Azure Defender for IoT Custom Alert for Firmware Minor Version Number Change

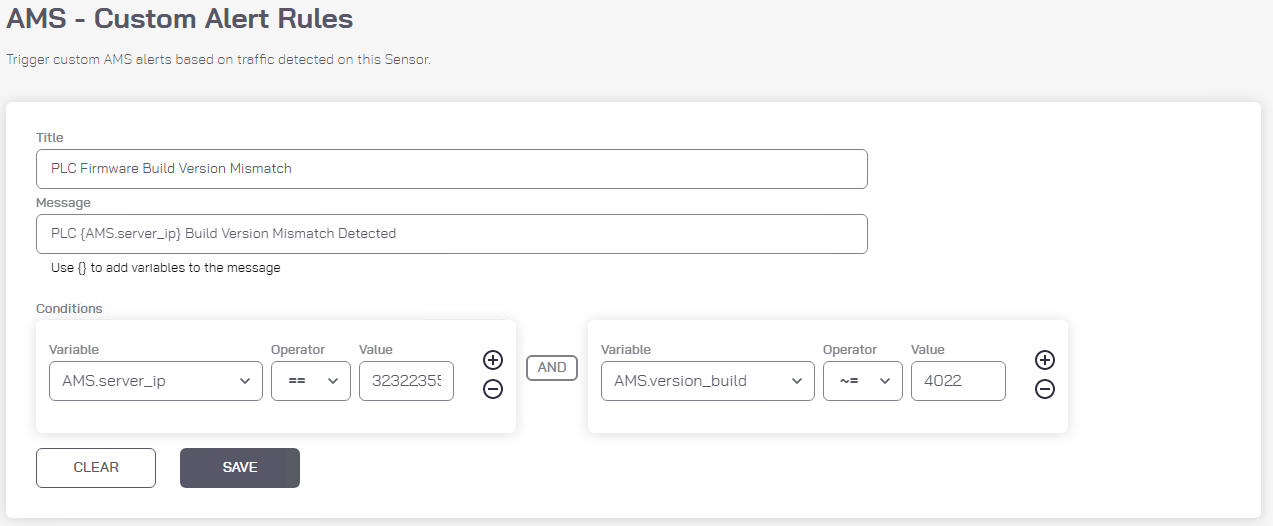

Create the custom alert to detect change in the PLC Firmware Build Version (Figure 2‑29):

Enter “PLC Firmware Build Version Mismatch” as the Title for the custom alert.

Enter “PLC {AMS_server_ip} Build Version Mismatch Detected” as the message to display with the alert:

Set the following conditions:

1) AMS_server_ip == 3232235550 (Note: this isthe PLC IP address 192.168.0.30 in Integer format).2) AND AMS_version_build ~= 4022

Figure 2‑29 Azure Defender for IoT Custom Alert for Firmware Build ersion Number Change

2.6 OSIsoft PI Data Archive¶

The OSIsoft product included in this practice guide is Process Information (PI), which is used to collect, store, analyze, and visualize testbed data. The product was utilized in Builds 1, 2, 3, and 4 to meet the historian capability by collecting and storing testbed data and the BAD capability by alerting when activity deviates from a baseline.

OSIsoft PI is a suite of software applications for capturing, analyzing, and storing real-time data for industrial processes. Although the PI System is typically utilized as a process historian, the PI System is also utilized to collect, store, and manage data in real time. Interface nodes retrieve data from disparate sources to the PI Server, where the PI Data Archive resides. Data is stored in the data archive and is accessible in the assets defined in the Asset Framework (AF). Data is accessed either directly from the data archive or from the AF Server by using tools in the PI visualization suite.

2.6.1 Host and Network Configuration¶

PI was installed on virtual machines hosted on hypervisors located in the DMZ and CRS networks. The virtual machine details and resources are provided in Table 2‑10, Table 2‑11 and, Table 2‑12. The overall build architectures utilizing PI are described in Section 4.5 in Volume B.

Table 2‑10 OSIsoft PI Domain Hosts Deployment

Name |

System |

OS |

CPU |

Memory |

Storage |

Network |

|---|---|---|---|---|---|---|

DMZ Historian |

Virtual Machine |

Microsoft Windows Server 2016 |

4x Intel Xeon E3-1240 |

8 GB |

Boot: 80 GB PI Data: 170 GB |

DMZ 10.100.1.4 |

Table 2‑11 OSIsoft PI CRS Hosts Deployment

Name |

System |

OS |

CPU |

Memory |

Storage |

Network |

|---|---|---|---|---|---|---|

CRS Local Historian |

Virtual Machine |

Microsoft Windows Server 2016 |

4x Intel Xeon E5-2407 |

16 GB |

Boot: 80 GB PI Data: 170 GB |

CRS Supervisory LAN 192.168.0.21 |

Table 2‑12 OSIsoft PI PCS Hosts Deployment

Name |

System |

OS |

CPU |

Memory |

Storage |

Network |

|---|---|---|---|---|---|---|

PCS Local Historian |

Virtual Machine |

Microsoft Windows Server 2008 R2 |

1x Intel i5-4590 |

2 GB |

50 GB |

PCS VLAN 2 172.16.2.14 |

2.6.2 Installation¶

PI was previously installed in the testbed as part of the NISTIR 8219: Securing Manufacturing Industrial Control Systems: Behavioral Anomaly Detection, https://www.nccoe.nist.gov/sites/default/files/library/mf-ics-nistir-8219.pdf. The installation for this project involved upgrading the existing CRS Local Historian and DMZ Historian VMs to Microsoft Windows Server 2016, and subsequently upgrading all the PI software components. Step-by-step instructions for each PI component installation are not included for brevity. Detailed instructions provided by the vendor can be found on the OSIsoft Live Library: https://livelibrary.osisoft.com/.

DMZ Historian Server

The following software is installed on the DMZ Historian server:

Microsoft SQL Server 2019 Express 15.0.2080.9

PI Server 2018 (Data Archive Server, Asset Framework Server)

PI Server 2018 SP3 Patch 1

PI Interface Configuration Utility version 1.5.1.10

PI to PI Interface version 3.10.1.10

PI Interface for Ramp Soak Simulator Data 3.5.1.12

PI Interface for Random Simulator Data 3.5.1.10

PI Connector Relay version 2.6.0.0

PI Data Collection Manager version 2.6.0.0

PI Web API 2019 SP1 version 1.13.0.6518

CRS Local Historian Server (Collaborative Robotics System)

The following software is installed on the CRS Local Historian server:

Microsoft SQL Server 2019 Express 15.0.2080.9

PI Asset Framework Service 2017 R2 Update 1

PI Data Archive 2017 R2A

PI Server 2018 SP3 Patch 1

PI Interface Configuration Utility version 1.5.1.10

PI to PI Interface version 3.10.1.10

PI Interface for Ramp Soak Simulator Data 3.5.1.12

PI Interface for Random Simulator Data version 3.5.1.10

PI Interface for Performance Monitor version 2.2.0.38

PI Ping Interface version 2.1.2.49

PI Interface for Modbus ReadWrite version 4.3.1.24

PI Interface for SNMP ReadOnly version 1.7.0.37

PI TCP Response Interface version 1.3.0.47

PI Processbook 2015 R3 Patch 1 version 3.7.1.249

PI Vision 2019 Patch 1 version 3.4.1.10

PI System Connector version 2.2.0.1

PCS Local Historian (Process Control System Historian)

Rockwell FactoryTalk Historian SE version 1.00

2.6.3 Configuration¶

The following sections describe how to configure select PI components to enable the capabilities described in this guide. Configurations for the other PI components are not included for brevity.

2.6.3.1 PI to PI Interface (PCS)¶

The PCS uses the Rockwell FactoryTalk Historian to collect, store, and analyze historical process data. The PI to PI Interface is used to duplicate the process data to the DMZ Historian server. The following steps describe how to configure the PI-to-PI Interface to collect data from the Rockwell FactoryTalk Historian.

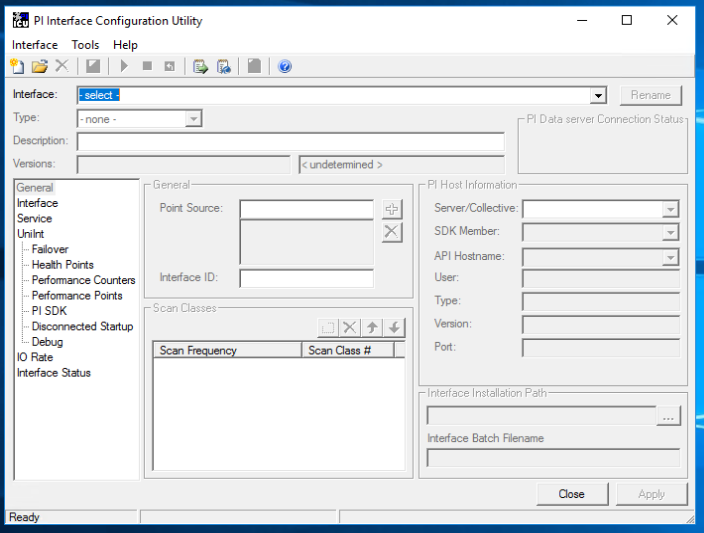

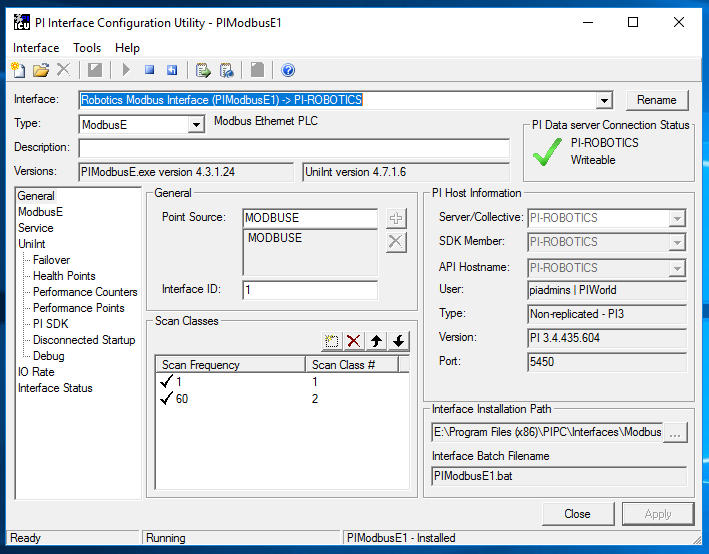

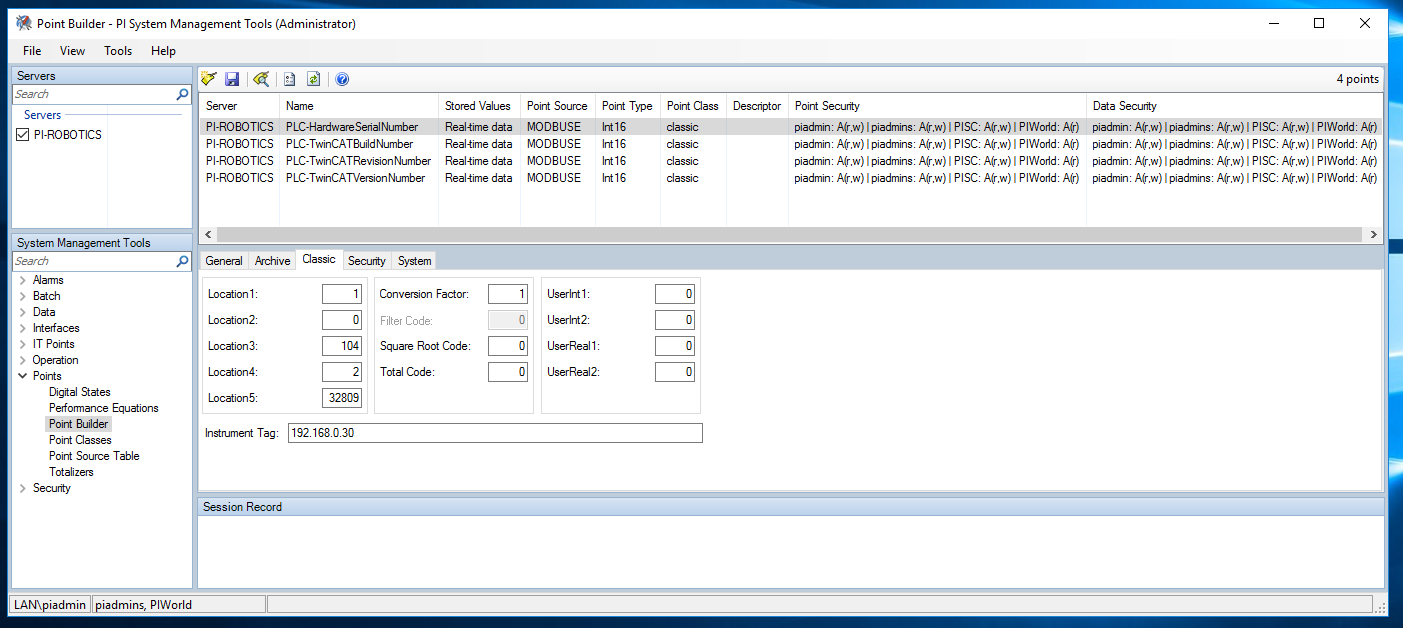

On the DMZ Historian server, launch the PI Interface Configuration Utility as shown in Figure 2‑30 from the Start menu and sign in with the local administrator account.

Figure 2‑30 Screenshot of the PI Interface Configuration Utility before the Interface is configured.

On the top menu, click Interface > New Windows Interface Instance from BAT File…

Navigate to E:\Program Files (x86)PIPCInterfacesPItoPI and select the file PItoPI.bat_new.

In the “Select Host PI Data server/collective” dialog box, select PI-DMZ from the drop-down menu and click OK.

In the left navigation panel select PItoPI. In the Source host textbox, enter “172.16.2.4”.

In the left navigation panel, select Service. In the “Create / Remove” section click the Create button. Click Yes in the dialog box.

Enter the commands

net startPItoPI andnet stop PItoPIin the files pisrvsitestart.bat and pisrvsitestop.bat files, respectively. Save and close the files.At the bottom of the PI Interface Configuration Utility click the Apply button. On top menu bar click the green play button

to start the service.

to start the service.

Close the PI Interface Configuration Utility. The interface is now configured to pull tags from the Rockwell Historian.

2.6.3.2 PI System Connector (CRS)¶

The PI System Connector is used to duplicate process data on the DMZ Historian from the CRS Local Historian server. The following steps describe how to configure the PI-to-PI Interface to collect data from the OSIsoft PI Server.

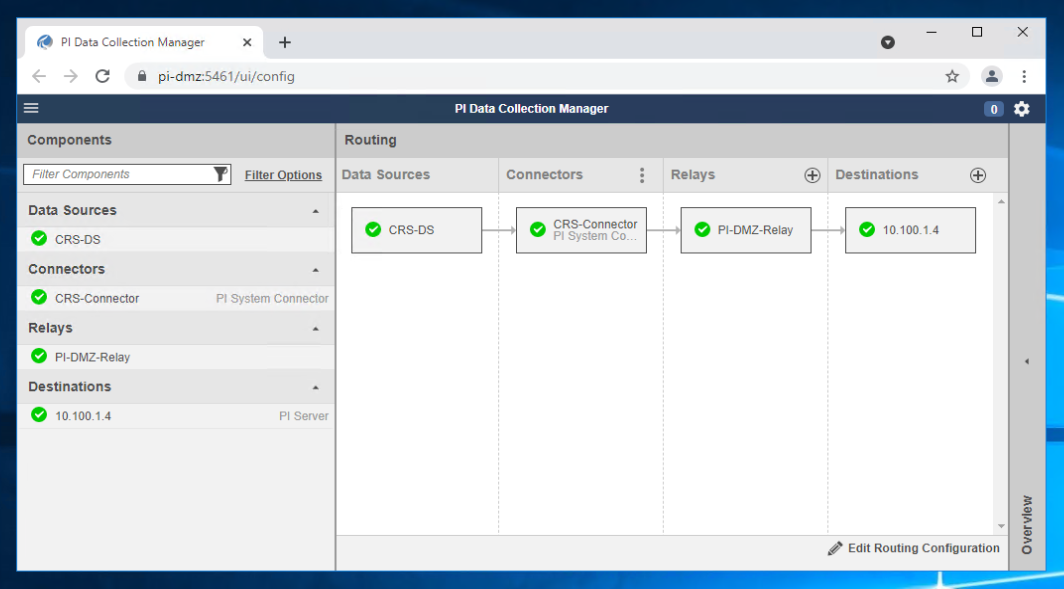

Figure 2‑31 Screenshot of the PI Data Collection Manager Displaying Green Checkmarks After the PI System Connector is Properly Configured

On the DMZ Historian server, launch the PI Data Collection Manager as shown in Figure 2‑31 from the Start menu and sign in with the local administrator account.

Click + on the Relays column to add a new connector relay. Use the following settings:

Name:

PI-DMZ-RelayAddress:

10.100.1.4Port:

5460

Username:

.\piconnrelay_svcClick Save Settings to add the connector relay.

Click + Add Destination to add the target PI Data Archive and PI AF Server. Use the following settings:

Name:

10.100.1.4PI Data Archive Address:

10.100.1.4AF Server:

10.100.1.4

Click Save Settings to add the destination.

On the CRS Local Historian server, open the PI System Connector Administration from the Start menu and sign in with the local administrator account.

Click Set up Connector to create a new connector.

Use the following information to request registration:

Registration Server Address: https:

//PI-DMZ:5460Registration Server Username:

piconnrelay_svcRegistration Server Password:

Description:

Registration to PI-DMZ

Click Request Registration to send the request to the DMZ Historian server.

On the DMZ Historian server, open the PI Data Collection Manager from the Start menu and sign in with the local administrator account.

Click Untitled Connector 1 and click Approve This Registration and Configure to approve the PI System Connector registration.

In the Untitled Connector 1 details panel, click Edit.

Use the following information to create the CRS-Connector connector:

Name:

CRS-ConnectorDescription:

Registration to PI-DMZ

Click Save Settings to create the CRS-Connector.

Click CRS-Connector in the Connectors column. On the Overview panel click CRS-Connector: No Data Sources option to create the data source.

On the CRS-Connector Connector Details in the Overview panel, click + Add Data Source.

In the Data Source Settings window, use the following settings:

Name:

CRS-DSSource AF Server:

PI-RoboticsSource AD Database:

TestbedDatabaseSelect Collect All Data from this Entire Database.

Click Save to save the data source.

Click 10.100.1.4 in the Destination column of the Routing panel and then click Data in the 10.100.1.4 Destination Details panel to configure the destination database for the CRS-Connector.

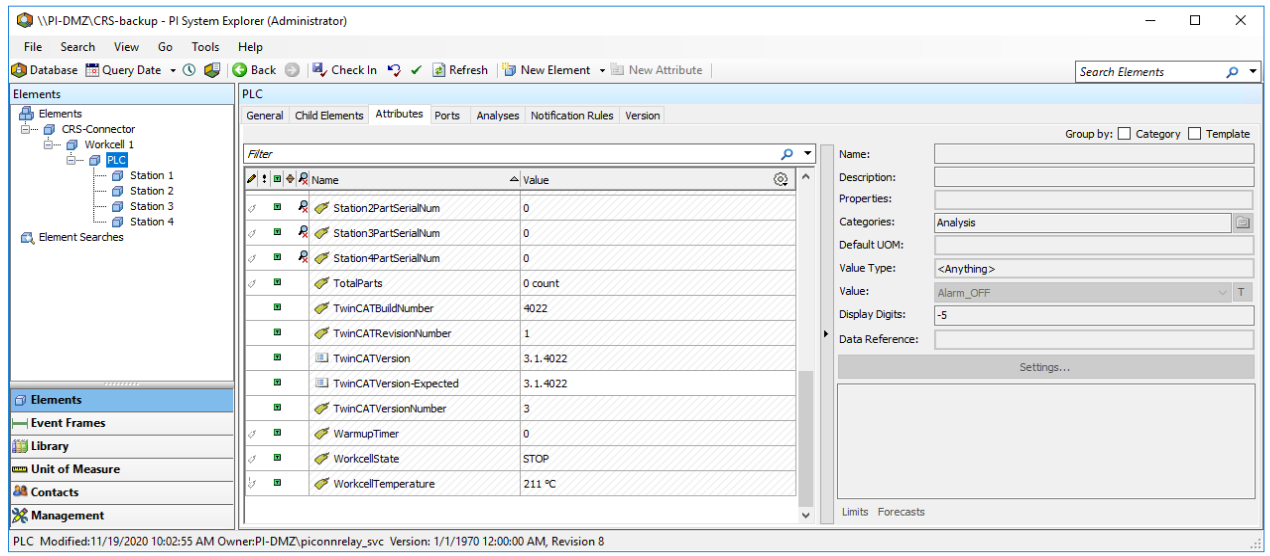

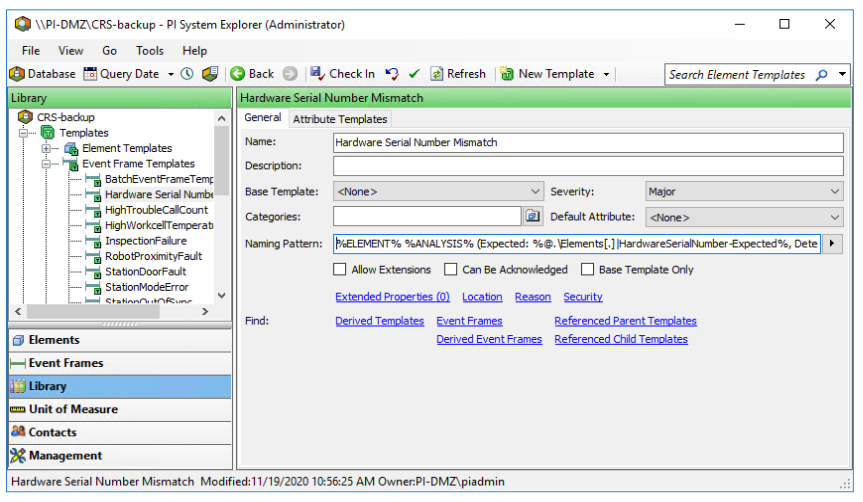

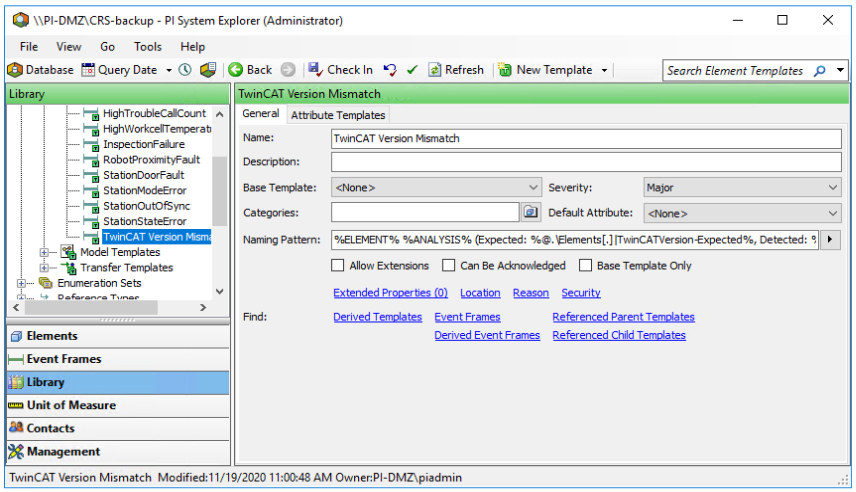

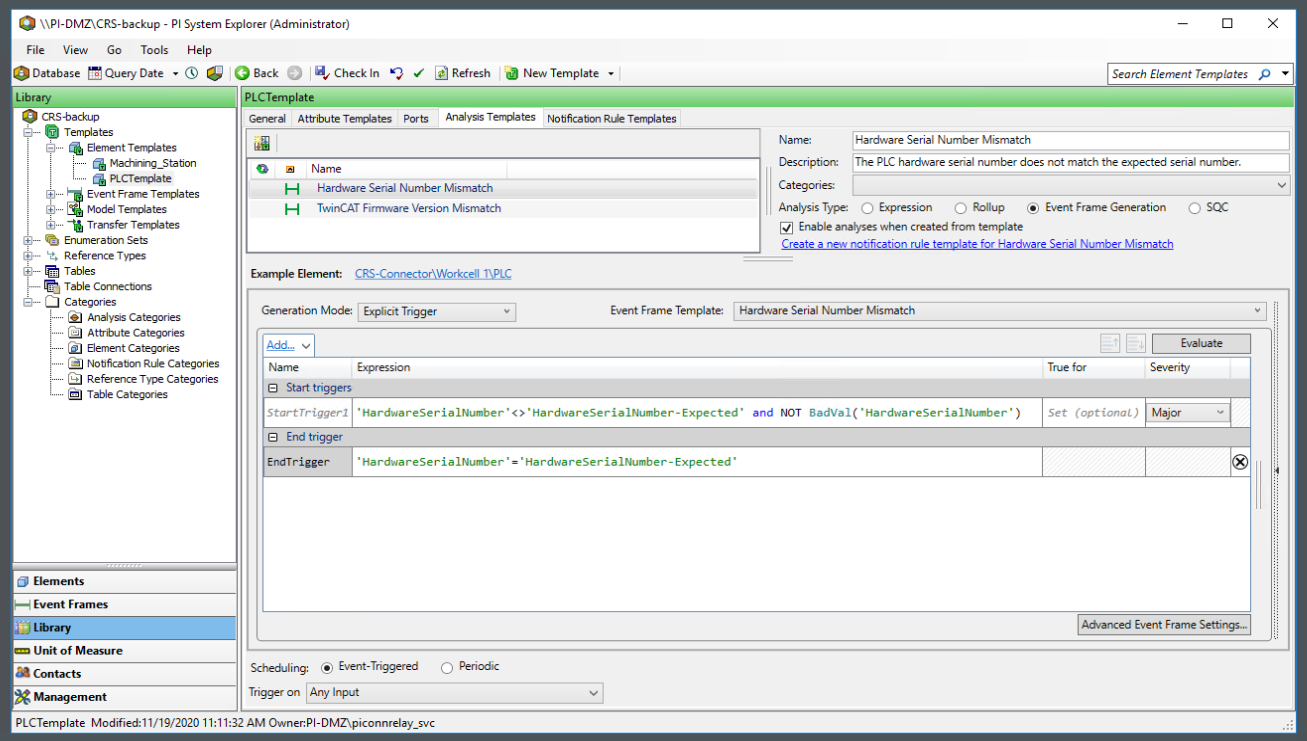

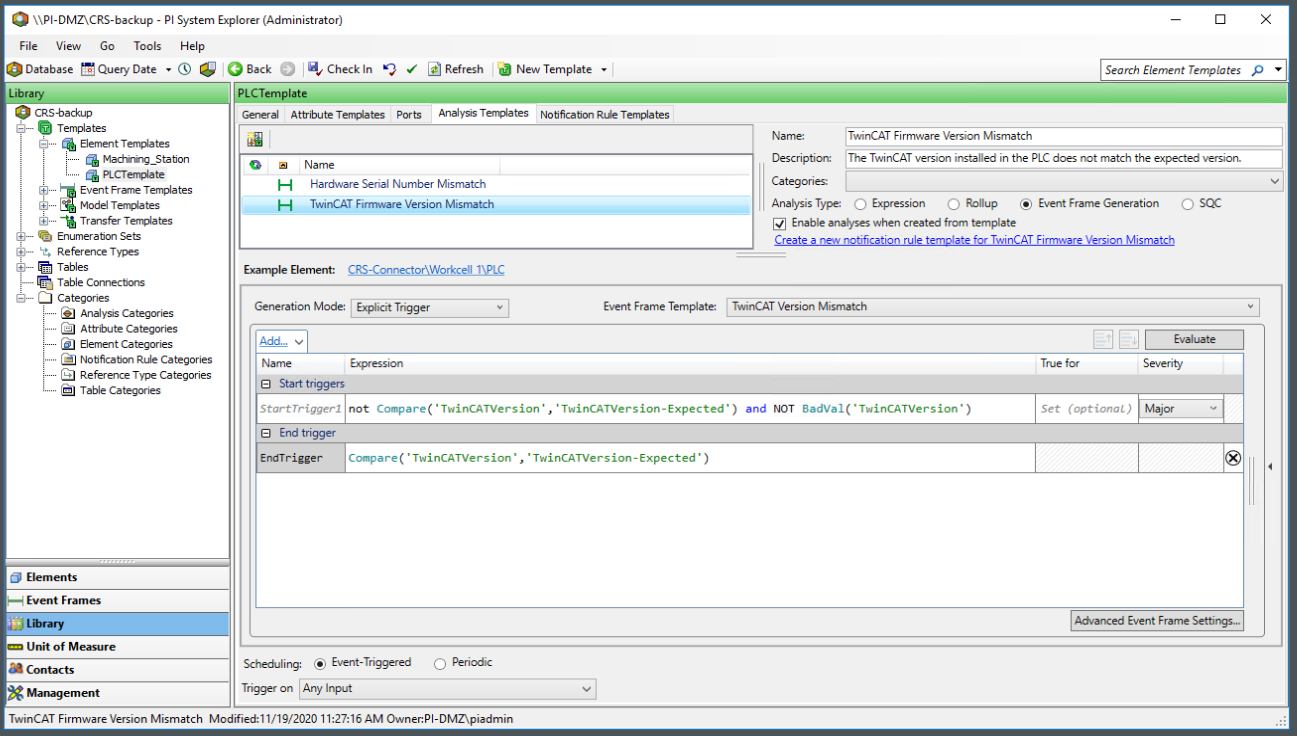

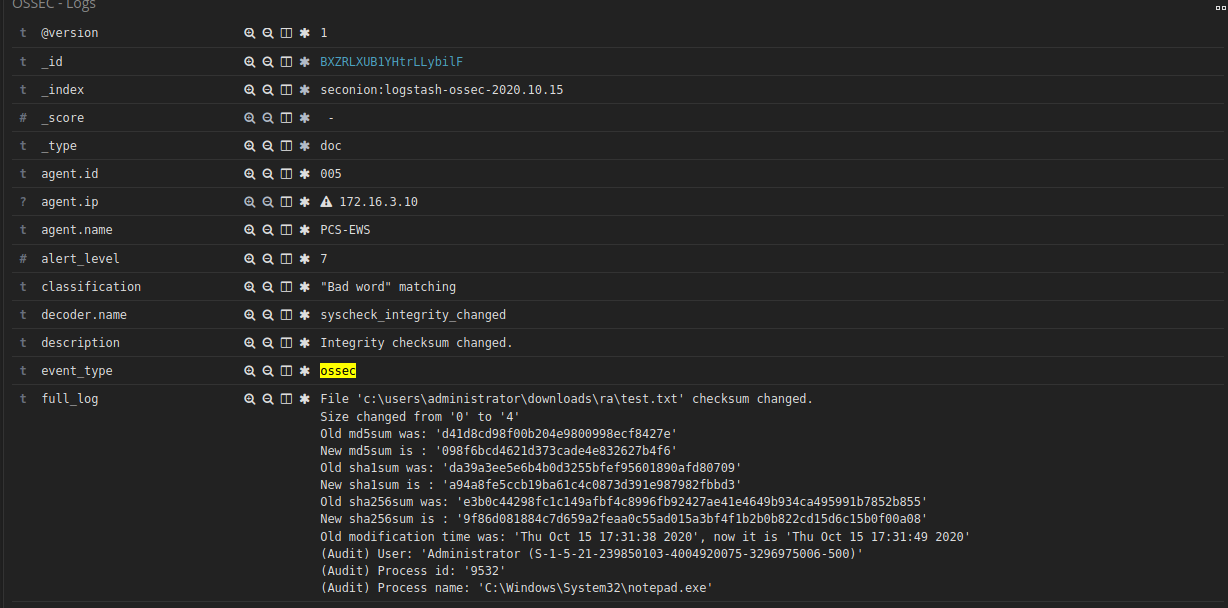

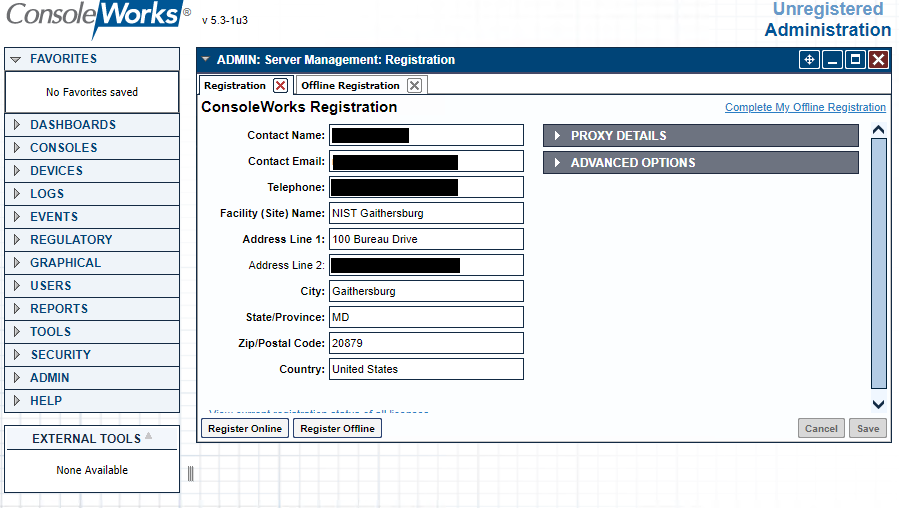

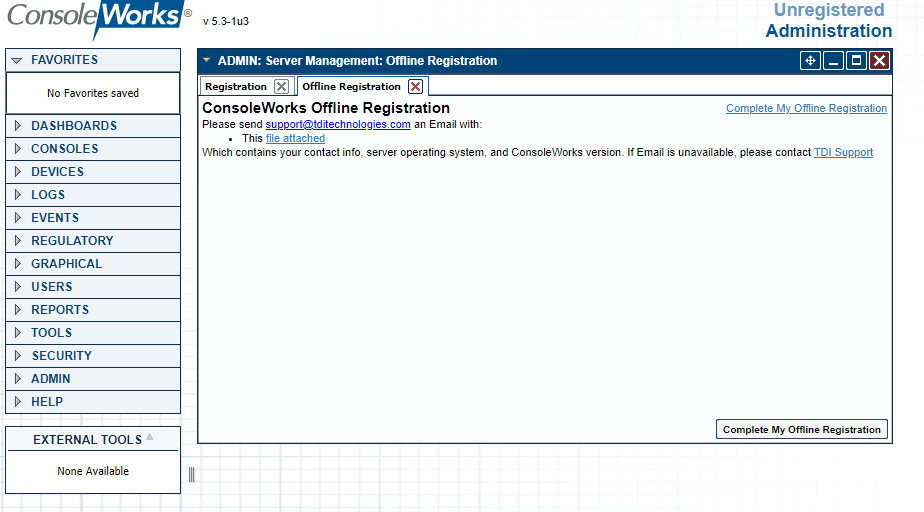

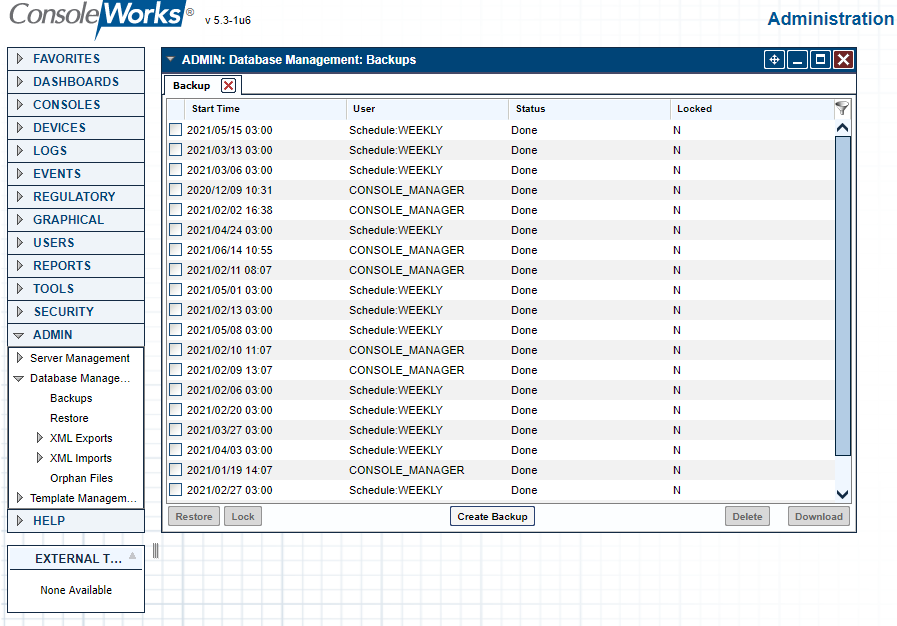

In the 10.100.1.4 Destination Details panel, change from Change Default Settings for new connectors to “CRS-Connector” and then click Edit Destination Data Settings.